Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

Importance Of User Experience, Authoritative Content And Page Speed Improvements For A Rapid Organic Traffic Growth: Unibaby Project

2. Meta Tag optimization/organization

3. Internal links and anchor texts

4. Nofollow and link sculpting for better PageRank distribution

5. Structured data and content marketing strategy

6. Social media activity, user retention, and Google Search Relevance

7. Conclusions and takeaways

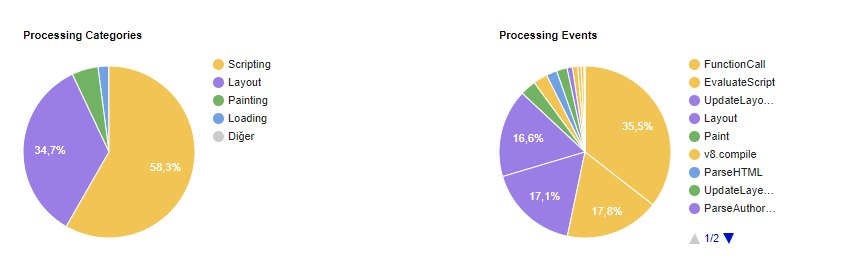

In this article, page speed, page layout, content types, structured data, user experience, internal linking, PageRank distribution, social media activities, user retention will be examined with the same attitude.

Below you may find the definitions and differences about resource Prioritization Hints:

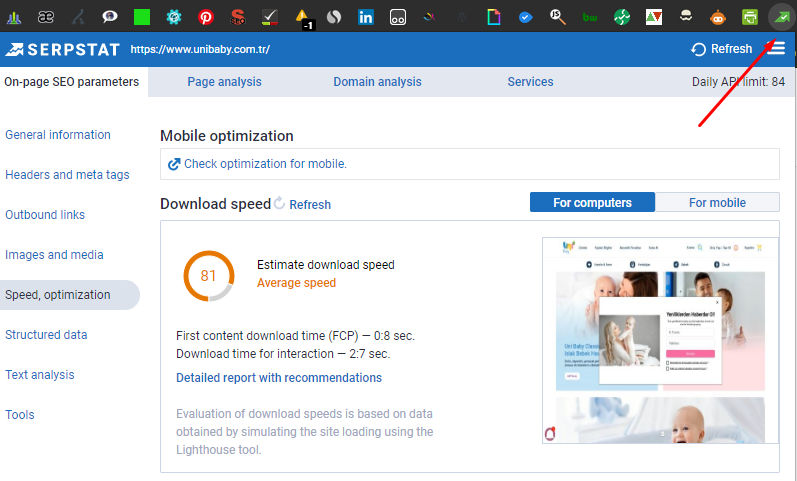

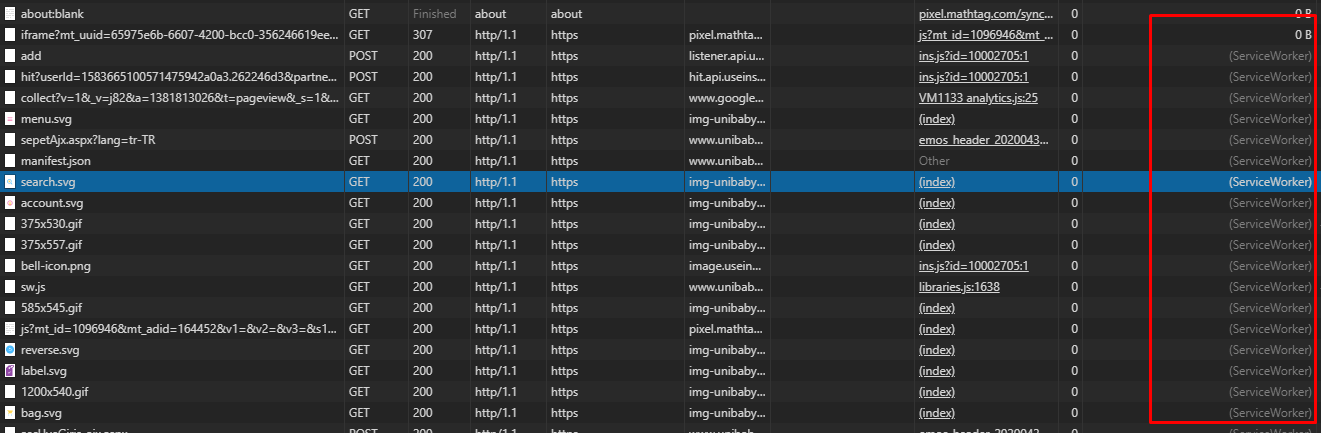

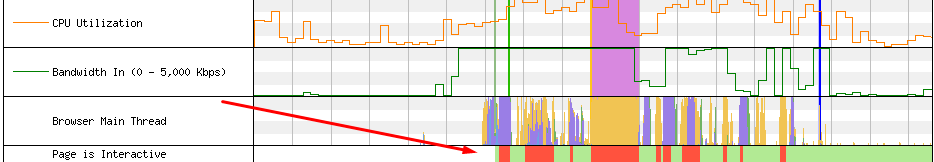

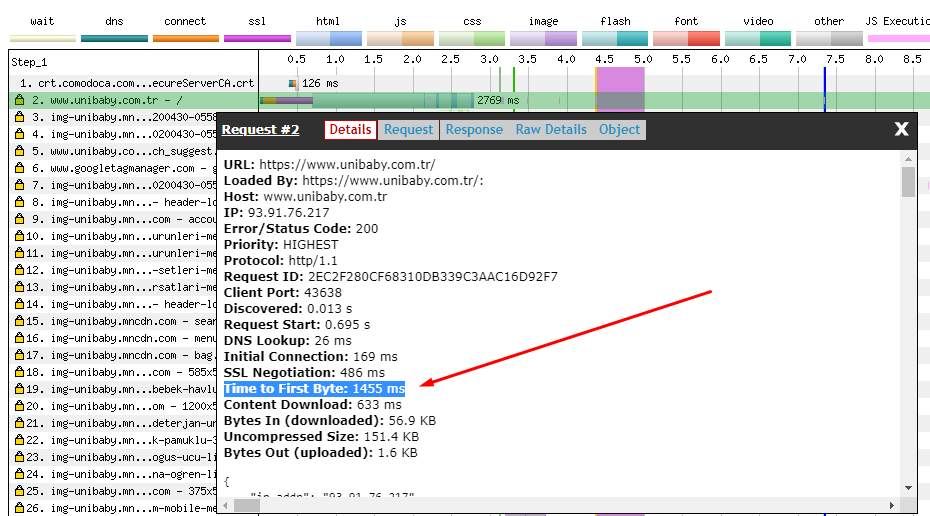

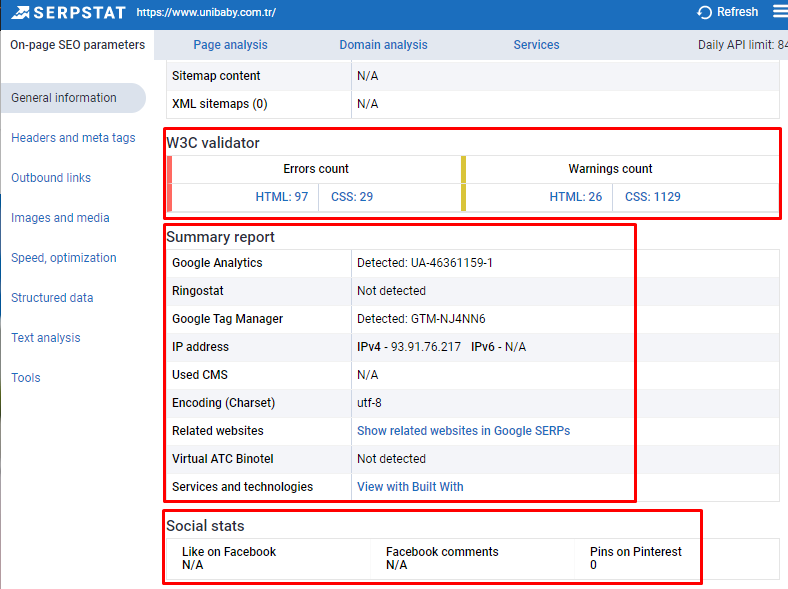

We have performed both in a short period. But during the new deployments by the developer team and also the added new 3rd party trackers, these improvements have stayed active for only one month.

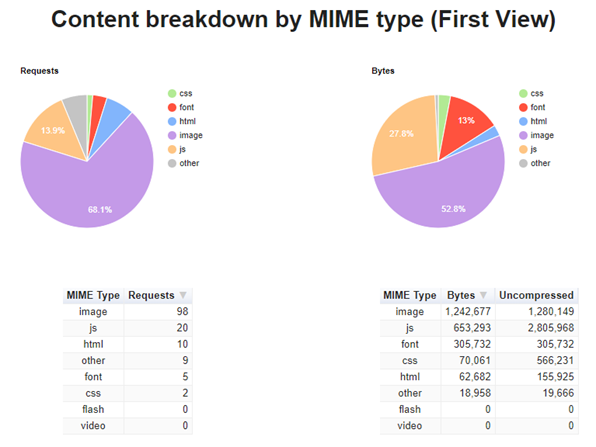

It can be done in many ways; you may delete spaces, comments, variables, Javascript functions, unused CSS variables, properties, unnecessary DOM Elements.

This list can be longer, for instance, you may also focus on cleaning unused codes, or you can decrease the request count with bundles. On this project, we mainly concentrate on compressing rather than minifying because of the time limit.

We have compressed all of the HTML Documents, JS, and CSS Resources on this part of the project. But still, during the new deployments and publishing processes, some of the old documents or uncompressed resources are getting live. This should remind us of the beginning sentences of this case study.

A healthy and robust relationship between SEOs and Developers, along with the customer's education, are two crucial points for a successful project.

So if you don't create a proper Meta Tag Strategy and CTR test platform, probably Google will rewrite your meta tags, and you may not reach your target audience.

Because of this situation, Google recommends not using Javascript for Meta Tags, internal links, and main content of the web page.

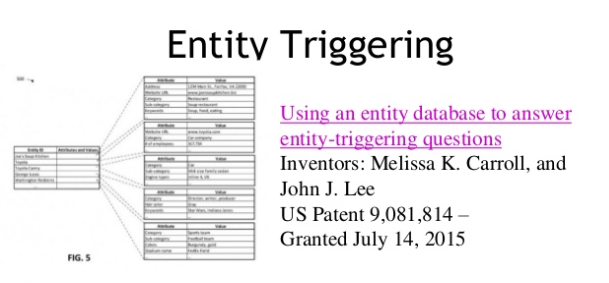

We have paid attention to Entity's and their relationships in our articles.

I have experienced some similar problems for some projects like below and fixed them with this method.

Such as HTML Lang Attribute. Googlers said that they don't use it as a Ranking or evaluation point. Most people buy an English theme with English HTML Lang Attribute but use it in another language. This confuses Google. But, if you have a semantic and stable Web Entity, the Search Engine can easily understand you and make work different and cheaper algorithms for your Web Entity.

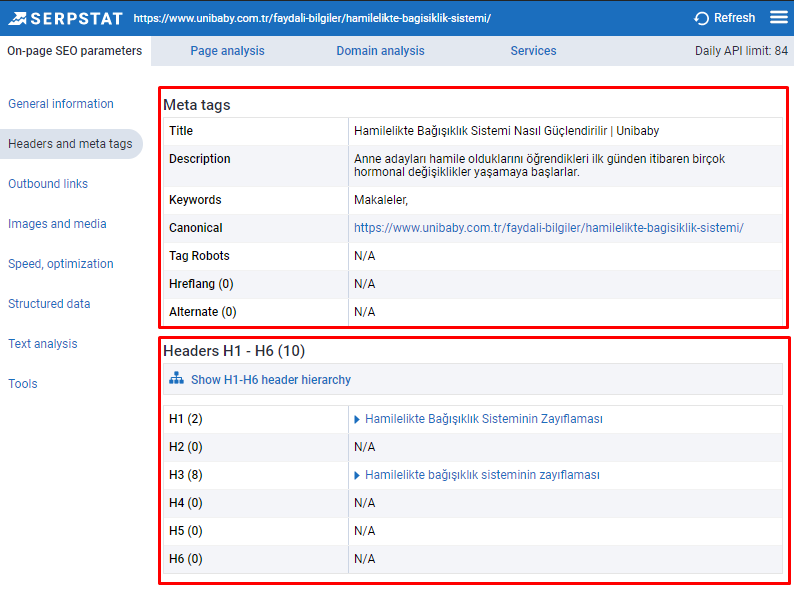

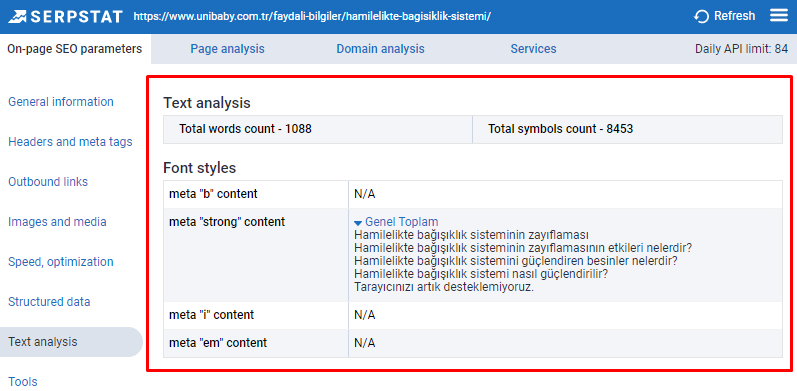

This also goes with Heading Tags. If you use them in Semantic HTML in the right way to give hints to the Googlebot, it will make Googlebot's job easier to crawl, render, and evaluate your content.

In addition to these, Google sometimes rewrites a web page's title according to its Heading 1 tag. It means that Google thinks that a web page's H1 tag represents a web page's content/function better in some cases because most of the publishers change their meta title for more clicks and higher CTR without thinking about the actual content on their web page.

So, if Google can use Heading 1 as Meta Title in its experiments and meta tag rewrite systems without telling you, we still should care about Heading Tags. Furthermore, Google may use and try new things for Heading Tags in the future without telling you like they stop using Link Rel Prev and Next Tags without telling us for years.

In case your CSS and JS resources can't be fetched, downloaded, or rendered or Googlebot come to your site without rendering your JS Resources, your HTML document can tell more about your content to the Search Engine.

Google may use Heading Tags in Search Algorithms and increase its value for Search Rankings even without telling you. Do you remember that they have stopped using Pagination Tags without even telling us for years?

Google's explanation can decrease Publisher's attention for the Heading Tags, and Google may exactly want this. Because this way, only people who care about a proper Heading Hierarchy can use heading tags in their Semantic HTML.

Imagine that Google makes an explanation for Social Media Shares and Ranking Correlations, we would see a massive explosion in social shares just for Search Engine's explanation. This is precisely opposite what Google wants. Google wants natural internet interaction without any manipulative intent.

My last thought about this topic is that Google is not the only Search Engine. Also, Google's explanation doesn't say that we don't give any weight for heading tags; they say we can understand the web better according to past years.

We have used at least one H1 Tags, which defines the web page's purpose with proper font size and position on the web page.

We have used H2 tags for every section on the web page, which defines that section's purpose. We have also used H3 Tags for intermediate and minor parts between H2 Tags.

After a complete brain-storm, we have finally set up a semantic, hierarchic, clear, and easy to understand Heading Structure on our web pages.

Google works on the web as it finds it. It does not expect the web to be what it wants.

However, at this point the mindset in Heading Tags is valid. If you use it correctly, the Search Engine will give you an advantage. If you use it incorrectly, you will not be able to take advantage of it. If you use it manipulatively, you will be damaged.

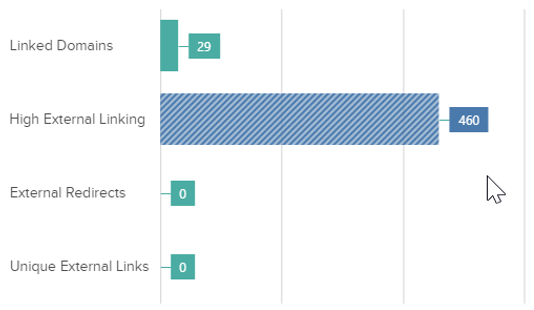

Some of the web pages don't have any anchor text or internal contextual link. eCommerce pages and blog pages were not linking each other in enough portions for more revenue and easier Intelligent Navigation. Some of them have extremely high internal links and also external links along with spammy anchor texts.

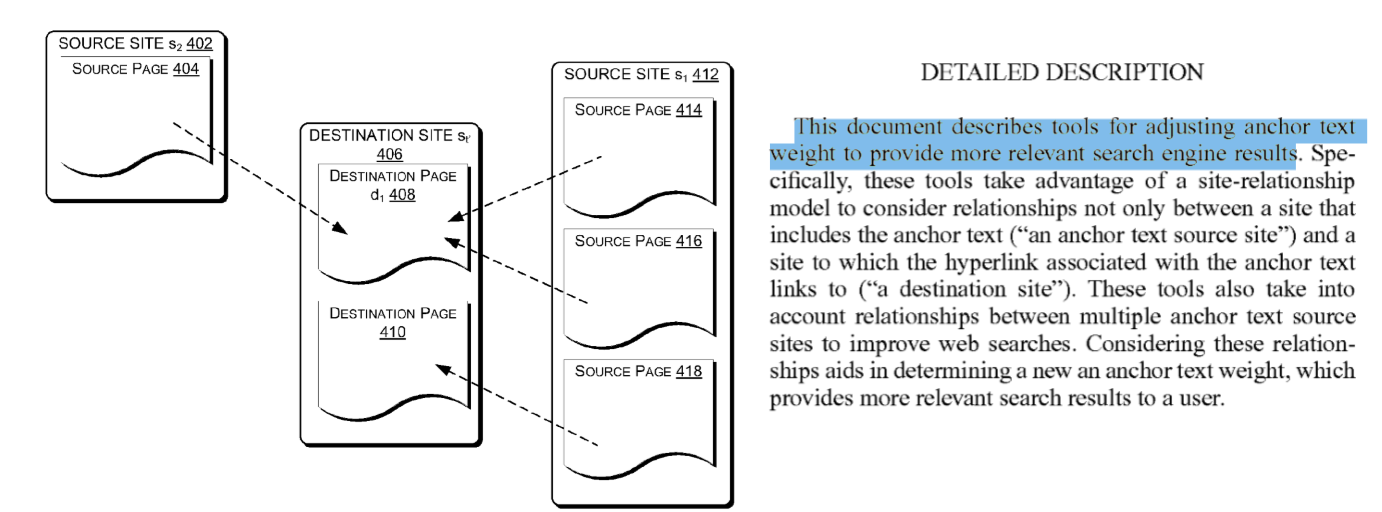

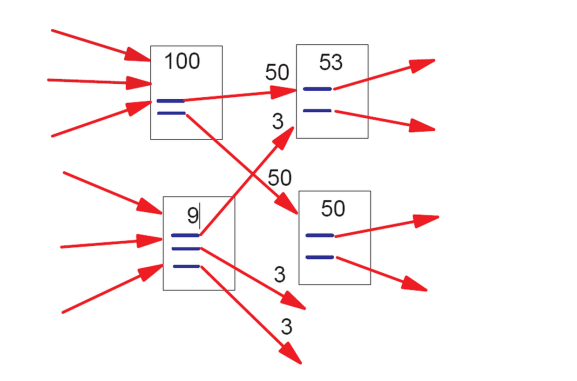

Any related topic or product and its blog equivalent were not linking each other. The most used anchor texts were brand keywords and ordinary CTA words instead of product and generic queries. Internal Links carry different Pagerank and Importance values according to their position, size, color, click possibility and dwell time. Unibaby.com.tr hasn't paid attention to their internal links and their interaction with users.

we have cleaned anchor texts from the web pages. We have used more relevant and contextual anchor texts between more relevant web pages. We have changed our internal link profile in favor of our important web pages to show consistency between user/click flow and internal link structure so that Search Engine Crawlers can understand what part of the site is important. We have also changed the position of the internal link in the content.

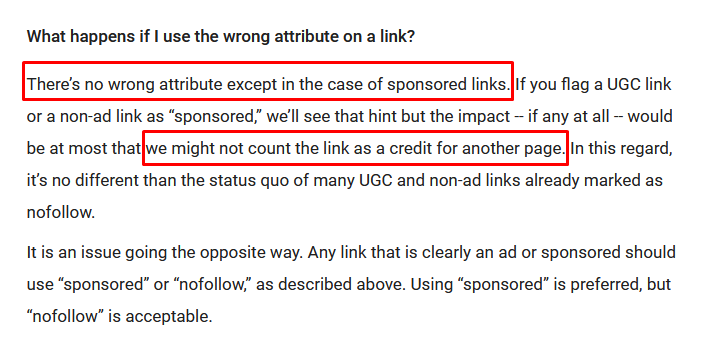

Most SEOs have three mistakes for Link Sculpting.

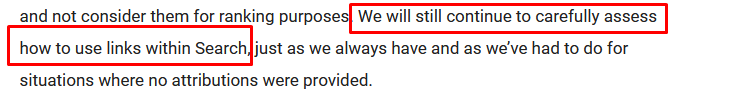

Also, if you have any kind of suspicion about Nofollow as a hint, you should use "Sponsored" instead of Nofollow or UGC Tags. You may see the reason of this suggestion below:

We have used Global Health Cooperations and Research Institutions as information sources instead of ordinary eCommerce competitors.

Google can perceive a content's feeling structure, the expertise level of language use, readability, reliability, and the Entity's authority on a topic. Unibaby.com.tr's informative and eCommerce contents were not comprehensive, complete guidelines, even their eCommerce aggregators, gave more information about their products.

- Performed a Content GAP Analysis with our competitors.

- Extracted all of the user queries which have more than 1000 Impressions but zero clicks.

- Extracted top landing pages of top competitors and their queries and compared them to ours.

- Researched potential mothers' and fathers' concerns, information needs before and after their baby's born along with new fathers' and mothers' experiences.

- Examined the layout of competitors along with their content structure.

- Created extremely detailed content briefs for writers for every possible informative, navigational, and commercial query.

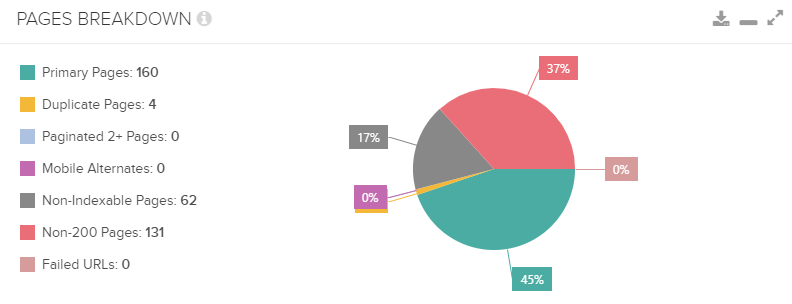

- Deleted some of the underperformed contents.

- Created a Data Studio Dashboard for determining Keyword Cannibalization Problems.

- Merged thin and cannibalized contents.

- Expanded product show cards with more information and re-organized their meta tags along with popular and relevant queries from the users.

- Used Google's Standard Natural Language Processor to see whether Google can understand our new content better or not.

- Used only present tense sentences on the new content with Scientific Facts.

- Extracted Entities, TF-IDF Words, and Phrases from the content and compare them to competitors' to see which one is more relevant, short, and useful for users.

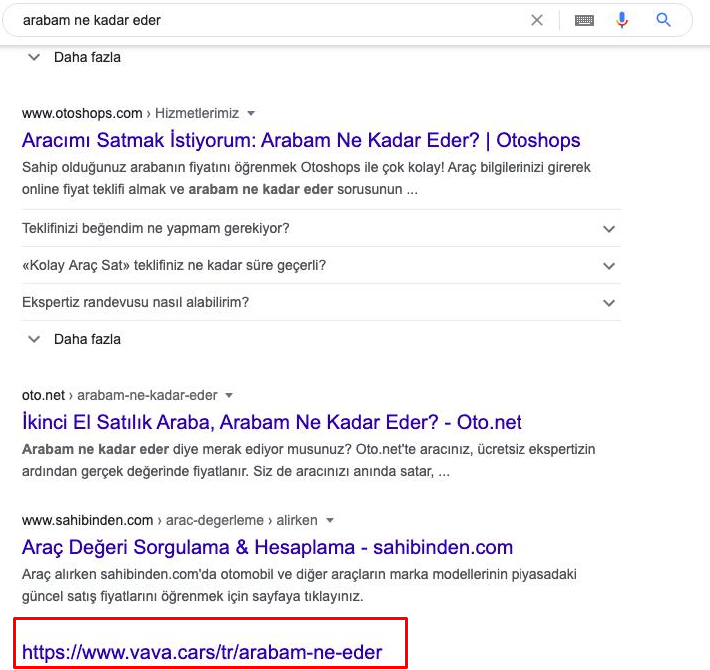

- Used FAQ Structured Data for giving users what they need without even clicking along with creating Brand Awareness, and have more SERP State.

Perfect Content should be short as much as possible but long as needed.

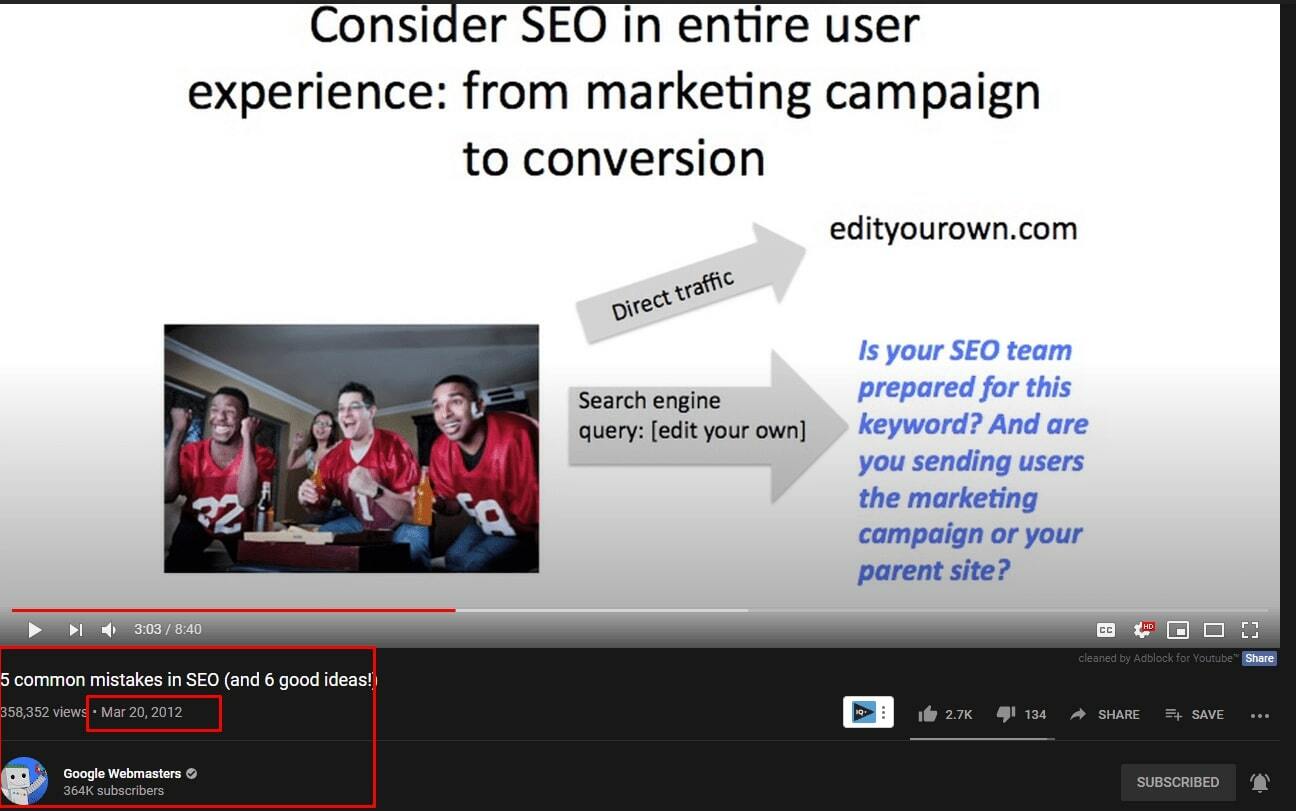

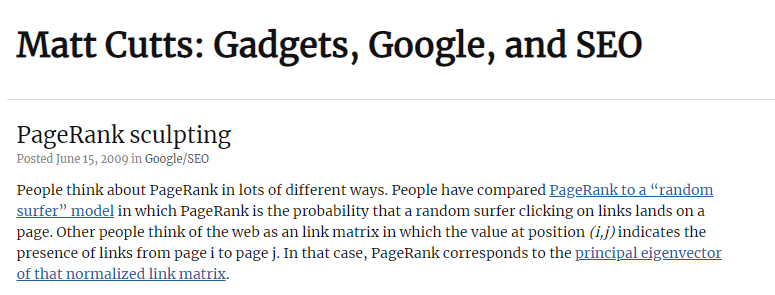

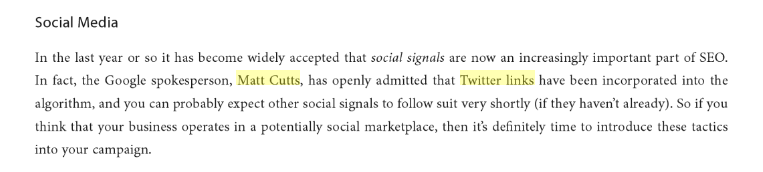

Furthermore, you will find below proof that shows Google was more open in old days related to its algorithm.

P.S: In 2014, they have stopped publishing this information, they said "World get bored…" instead of "We want to hide our algorithm".

Related to Social Media, you will find a changelog below from Google's own official blog. Like Matt Cutts' explanation related to Twitter Links and Author Reputation, it says that they are getting better on indexing and creating relations between profiles.

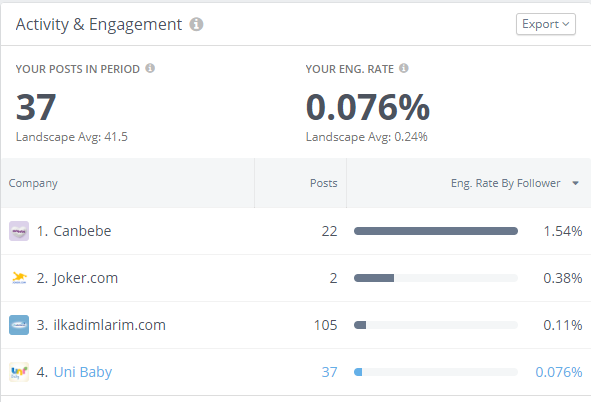

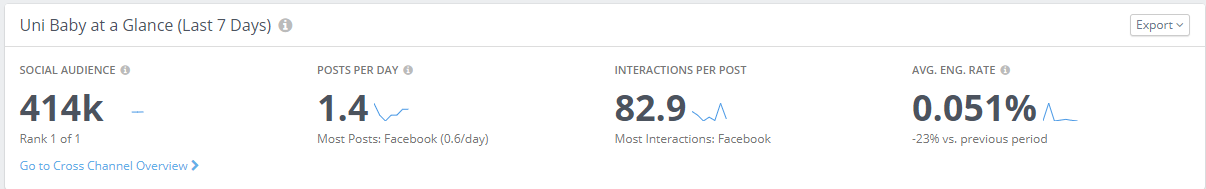

- I have used RivalIQ, Twitter Analytics, Youtube Analytics, and Google Analytics to examine social media traffic and behavior to the web site.

- I have researched the hashtags, engagement rates, and user engagement time.

- I have researched the Youtube hashtags, comments' most included words, video titles, descriptions, and their links along with info cards.

- We have created links that connect social media profiles to the domain and prepared an organization structure data which includes them.

- We have researched the social media contents which are taking organic traffic via Google and their features.

- We have started using all of the social media accounts parallelly more active and semantic for creating a better topical relevance and authority with certain types of main queries.

- We have used Brand Name, Category Name, and Sub Topic together on the social media posts and hashtags like in URLs of the Web Entity and linked them to each other.

- We have increased our related index count in Google with social media accounts.

- We have recommended open Medium, Reddit, and Trustpilot Accounts also.

And our list can be longer for this SEO case study, but we can't cover them all in just one article with SEO theories and practices. Also, I can't talk about more topics in this article for the sake of readers and editorial standards.

We've worked with page layouts, the speed of providing information to the user, how Semantic HTML and Structured Data can be used together, how Site-Hierarchy can send signals to Search Engine, and much more.

So, I need to ask the last question and try to answer it properly.

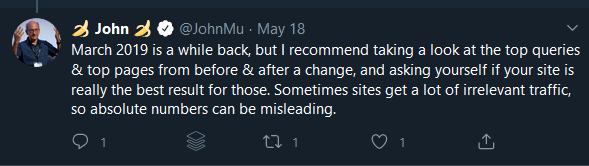

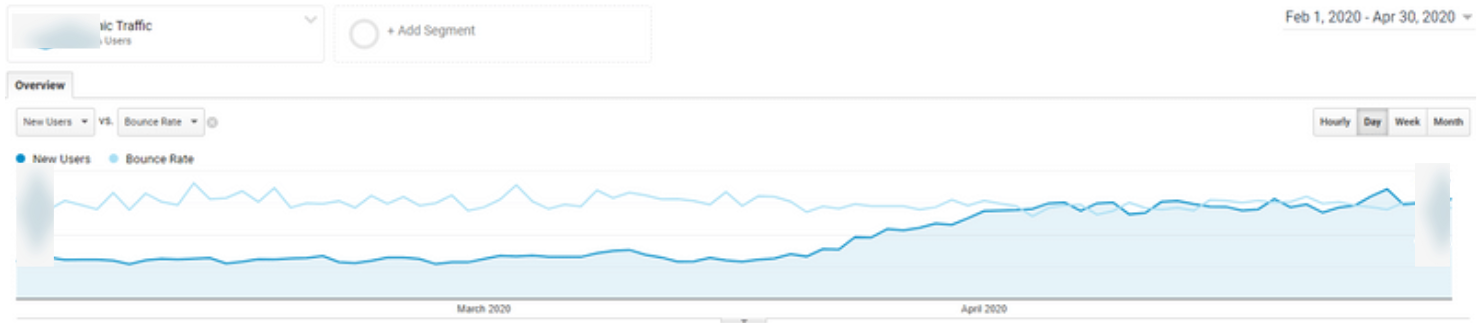

Can a Web Entity grow more than 190% in two months and lose a Google Core Algorithm Update at the same time?

We will see the answer, in the last article of this SEO Case Study along with its reasons.

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Website Audit

Website SEO analysis – gain detailed insights into your website's technical health

Batch Analysis of Competitors' Domains

DA Domain Checker – get valuable information about the competitors' domains

AI Content Tools

AI Content Marketing Tools – simplify and optimize the content creation process

Local SEO Tool

Our local SEO platform – optimize your website for maximum impact

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.