Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

What's the purpose of website log analysis

- shows how much crawling budget is lost and at which point;

- helps to identify and correctly configure 404, 500 errors and others;

- allows you to find pages that are rarely crawled or ignored by search engines;

- many other opportunities.

What is a website log file?

Why analyze the log?

How to get log files of a website

- Using your hosting provider's control panel. A file manager is included with several hosting platforms. Look for terms like "file management," "files," "file manager," and so on.

- FTP. You'll need the following items:

- Access the server through FTP

- Get FTP address, login, and password

The most common mistakes

- Lack of a centralized log system. Despite the fact that you'll be receiving log data from a variety of sources, it's critical to have centralized logs to make maintenance easier.

- Carry out an analysis only after a security breach. You can operate proactively and potentially fix a security concern before it grows by monitoring logs on a regular basis.

How web server log file works

https://your_site_address.com/example.htmlThe server name is converted to an IP address through the domain name server. The HTTP GET request is then sent to the web server through the appropriate protocol for the requested page or file, while the HTML is returned to the browser and then it is interpreted to format the visible page on the screen. Each of these requests is recorded to the log file of the web server.

To put it simply, the process looks like this: the visitor makes a redirection on the page, the browser passes his request to the server on which the website is located. In response, the server returns the page requested by the user. And after that, it records everything that happens in the log-file.

All you need to analyze a website's search engine crawling is to export data and filter out requests made by a robot, for instance, Googlebot. It is more convenient to use a browser and IP range.

The log file itself is raw information, a solid text. But proper processing and analysis provide an unlimited source of information.

Log file content and structure

- IP address request;

- date and time;

- geography;

- GET/POST method;

- URL request;

- HTTP status code;

- browser.

See the record example including the data above: 111.11.111.111 - - [12 / Oct / 2018: 01: 02: 03 -0100] « GET / resources / whitepapers / retail-whitepaper / HTTP / 1.1 « 200 »-« »Opera / 1.0 (compatible; Googlebot / 2.1; + http://www.google.com/bot.html)

Additional attributes that can be sometimes available include the following:

- host name;

- request/client IP address;

- loaded bytes;

- time spent.

WordPress log file export

Next, find the line: "That's all, stop editing! Happy blogging. " Before it, add a new line of code:

define( 'WP_DEBUG', true );Now run error recording in the log file. To do this, add a new one right below the previous code line:

define( 'WP_DEBUG_LOG', true );

This will open the log file that you need to copy and transfer to Excel for easier sorting. Monthly period data is usually used for analysis purposes.

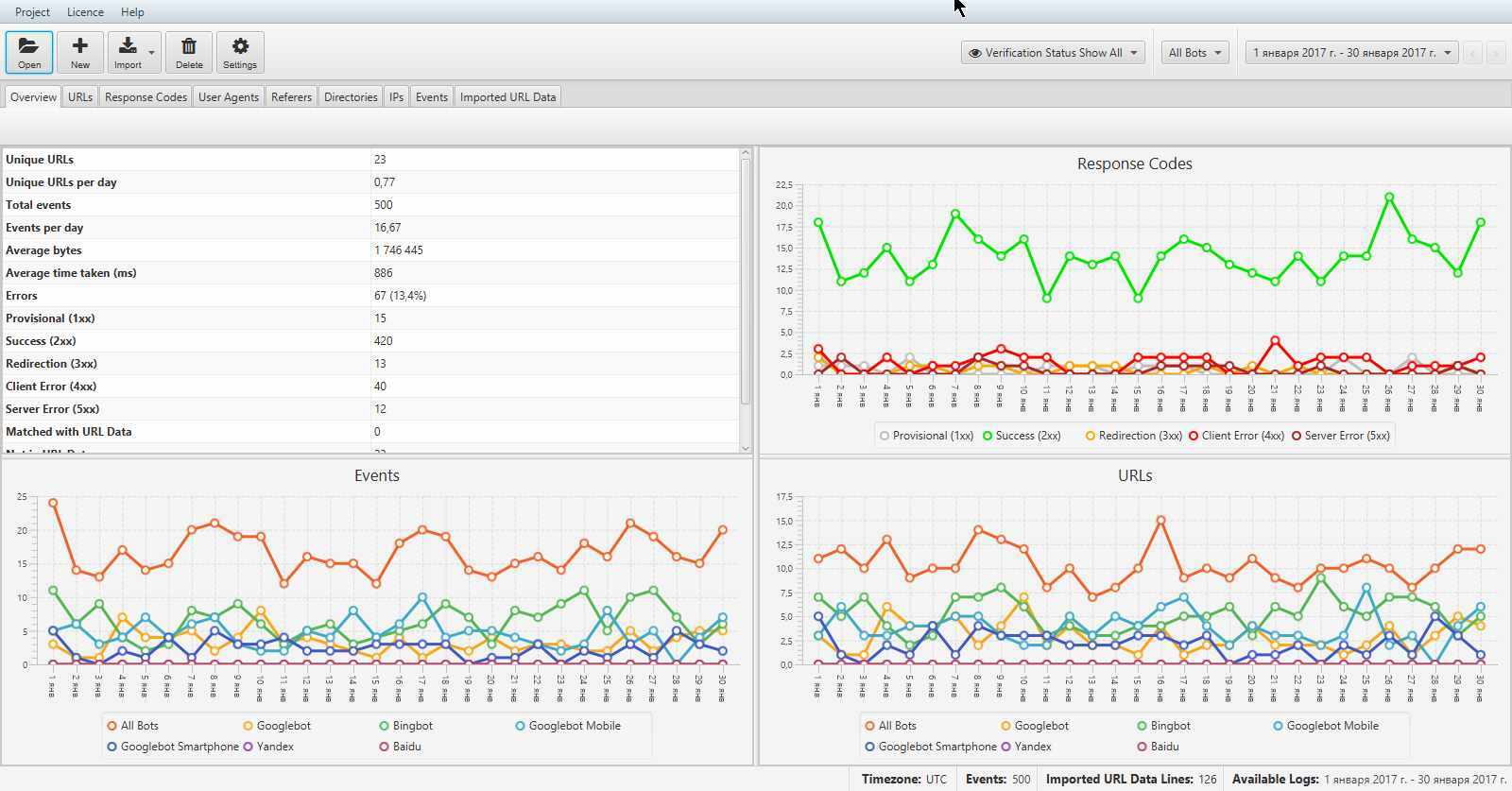

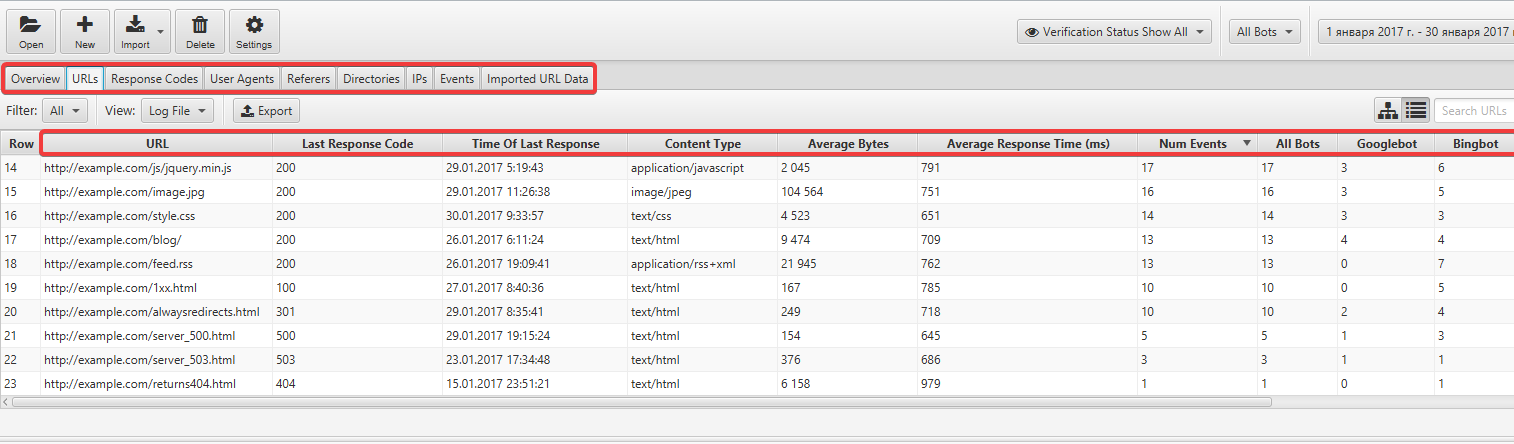

Screaming Frog Log File Analyzer

The tool provides access to the free version, limiting the event log to one thousand lines. For a small project, this amount will be enough.

Download and install the software on your computer, then upload the log files or make a list of all the URLs that are present on the website. The file export is described above in this article. Open the tool and create a new project using the New button on the top panel:

Other analyzers will have a brief review.

GoAccess is designed for quick data analysis. Its main idea is to quickly look at the server logs and analyze them in real time without using your browser.

Splunk lets you process up to 500 MB of data per day for free. This is a great way to collect, store, search, compare and analyze website logs.

Logmatic.io is a log analysis tool designed specifically to improve software performance. The focus is on program data, which includes logs. Currently, the tool has got paid version only.

Logstash is a free, open-source tool for managing events and logs. It can be used for collecting logs, their storage, and analysis.

FAQ. Common questions about analyzing website logs

How do I find website logs?

Hosting log files are stored in the /var/log directory, where: site_name. access_log — site access log, site_name.

What do Web server logs show?

A web server log commonly includes request date and time, client IP address, requested page, bytes served, HTTP code, referrer, and user agent.

Conclusion

In most cases, the tool contains the following data:

- IP address request;

- date and time;

- geography;

- GET/POST method;

- URL request;

- HTTP status code;

- browser.

In order to analyze a file, use sorting and do it manually in an Excel spreadsheet or install Screaming Frog Log File Analyzer or a similar tool. There are also a number of tools that are installed directly on the website server. This option is suitable if you have your own servers.

This article is a part of Serpstat's Checklist tool

| Try Checklist now |

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Tools for Keywords

Keywords Research Tools – uncover untapped potential in your niche

Serpstat Features

SERP SEO Tool – the ultimate solution for website optimization

Keyword Difficulty Tool

Stay ahead of the competition and dominate your niche with our keywords difficulty tool

Check Page for SEO

On-page SEO checker – identify technical issues, optimize and drive more traffic to your website

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.