Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

How A Web Entity Can Grow Traffic And Lose Google Core Algorithm Update At The Same Time

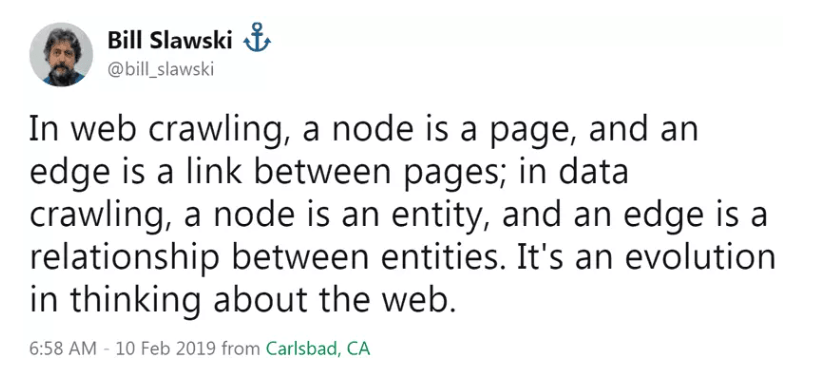

No one knows the exact number of Google Ranking Factors. But if they have millions of baby algorithms, I am sure that it is too far above 200 hundred. If, as SEOs, we want to win every Google Core Algorithm Update, we need to think in a more logical, analytical, and free attitude about our Web Entity's situation.

When we ask all of these questions, we can understand why we couldn't win the Core Algorithm Update.

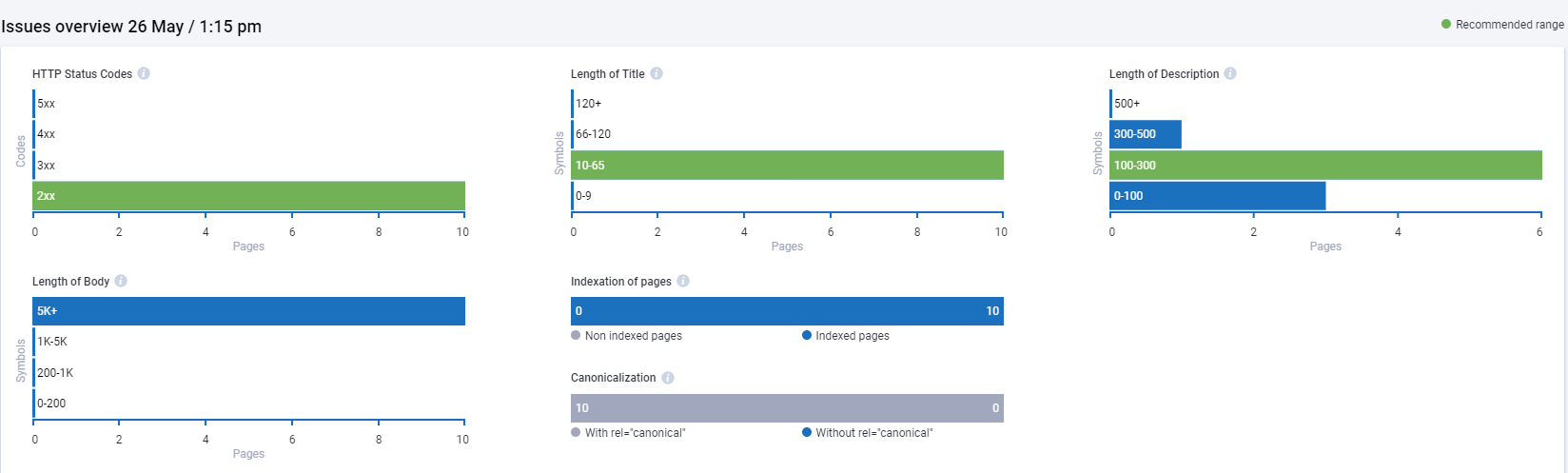

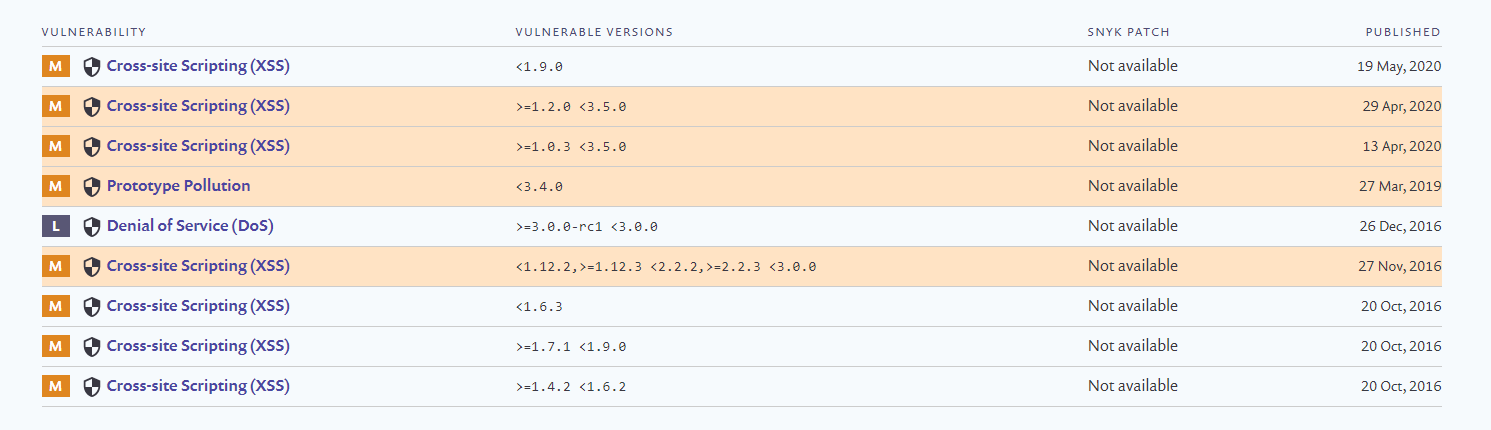

To help you imagine this situation, you will find a list of undone things list for Unibaby.com.tr.

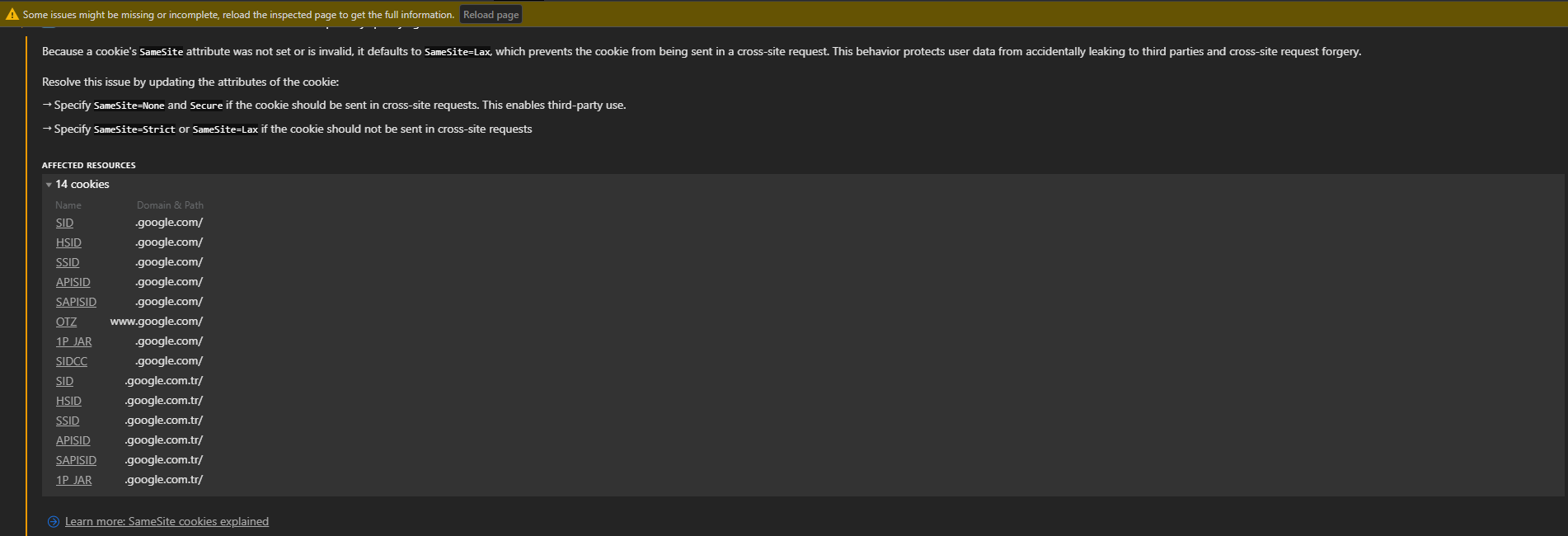

Furthermore, we have not optimized above the fold part for better user experience for every browser and device. You may find a Google patent and Matt Cutts explanation from 2004 related to above the fold part.

Ezgi Ergene,

Digital Marketing Specialist,

Eczacıbaşı Consumer Products

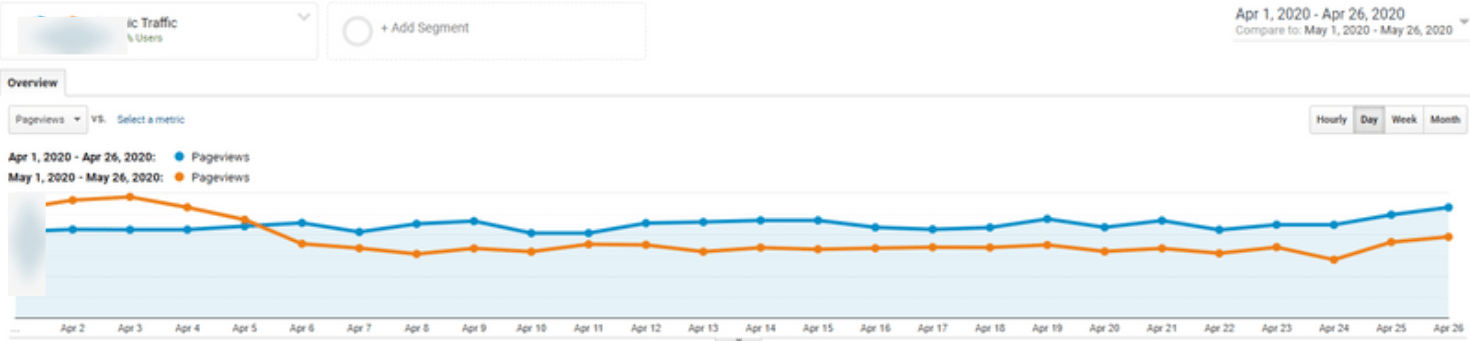

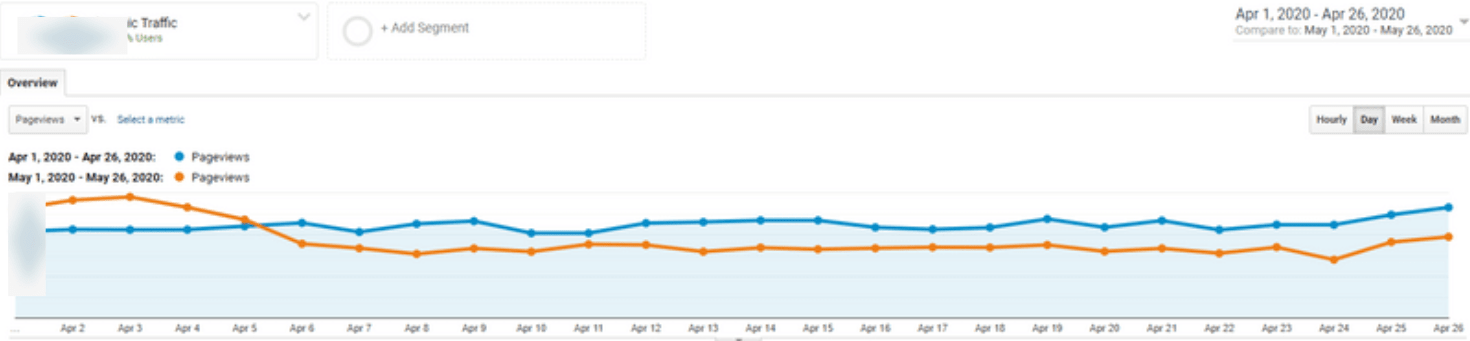

So, let me answer the magic question. After the Core Algorithm Update, did we lose traffic?

Yes, we have lost around 10% of our monthly organic traffic after 180% organic traffic growth. Also, our growth has stopped. The interesting thing is that most of our competitors also have lost the Core Algorithm Update.

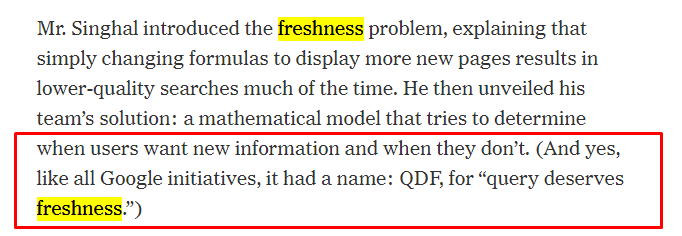

In this situation, Google changes its preferences in terms of Site Profiles. It starts to prefer another kind of website from a different identity and sector to others. Sometimes, it changes its opinions about page layout or content structure, navigation structure preferences.

Finally, if you are an SEO that knows the importance of thinking analytically, you should ask yourself two questions about Core Updates every day, as I mentioned in my previous SEO Case Studies.

- When will the next Google Core Algorithm Update be?

- What will the next Google Core Algorithm Update be about?

Stay safe.

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.