Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

How To Use Serpstat API To Analyze A Niche And Hypothesize In SEO: WebX.page Experience

In particular, the information in this article represents one of the first developments that allowed us to take a look at new niches as a whole since there was quite a big range of data for that. At the same time, it was fast, affordable, and with the potential of enhancement. Let's check out the steps taken and look at how it all works.

Serpstat API will help us to take data out of getSummaryData section, since it returns about 30 parameters (51 in total, but some are not active anymore and will be removed in the nearest updates) and takes very few limits (1 per each analyzed URL). It's also worth mentioning that very large websites (Google, Wikipedia, Youtube, etc.) are not supported and return errors (we'll talk about it later).

If you want to learn more about my solution, take the following steps:

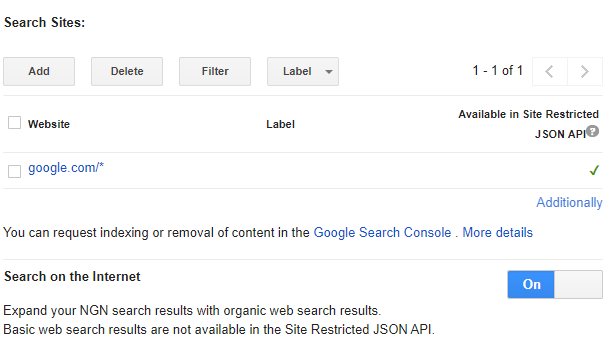

The basic settings in the console are pretty simple. There are only two main points you should pay attention to:

Here are the highlights for you if you're going to write your own solution:

At the time of writing the article, getSummaryData report is giving away 51 parameters + some general information via API: how many limits are left, how many pages there are in the current results, what the sorting order is, etc., which are of little importance for us.

Query example:

Endpoint: https://api.serpstat.com/v4/?token=YOUR_TOKEN_HERE

POST body:

{

"id": {{webx.page}}, /*id can be any set of letters and numbers, I usually use the domain of the working project*/

"method": "SerpstatBacklinksProcedure.getSummary", /*the used method*/

"params": {

"query" : {{domain}} /*the analyzed domain received in CSE before*/

}

}

Here’s the example of the answer received (no outdated data, just the useful ones):

{"id":"webx.page",

"result":{

"data":{"referringDomains":320,

"referringSubDomains":30,

"referringLinks":153445,

"totalIndexed":1591,

"externalDomains":321,

"noFollowLinks":21219,

"doFollowLinks":219879,

"referringIps":71,

"referringSubnets":57,

"outlinksTotal":50330,

"outlinksUnique":525,

"typeText":240651,

"typeImg":447,

"typeRedirect":0,

"typeAlt":0,

"referringDomainsDynamics":1,

"referringSubDomainsDynamics":0,

"referringLinksDynamics":210,

"totalIndexedDynamics":0,

"externalDomainsDynamics":0,

"noFollowLinksDynamics":2,

"doFollowLinksDynamics":1009,

"referringIpsDynamics":2,

"referringSubnetsDynamics":1,

"typeTextDynamics":1011,

"typeImgDynamics":0,

"typeRedirectDynamics":0,

"typeAltDynamics":0,

"threats":0,

"threatsDynamics":0,

"mainPageLinks":393,

"mainPageLinksDynamics":0,

"domainRank":26.006519999999998}

}For starters, 1500 queries available in the cheapest pricing plan would be perfectly enough. They'll give you detailed information on 75 keywords (20 search results for each).

Actually, there are pre-built and customizable solutions (PowerBI, Tableau, etc.), but they are mostly paid and not fully customizable. After all, it's much more interesting to do everything manually minimizing the time spent.

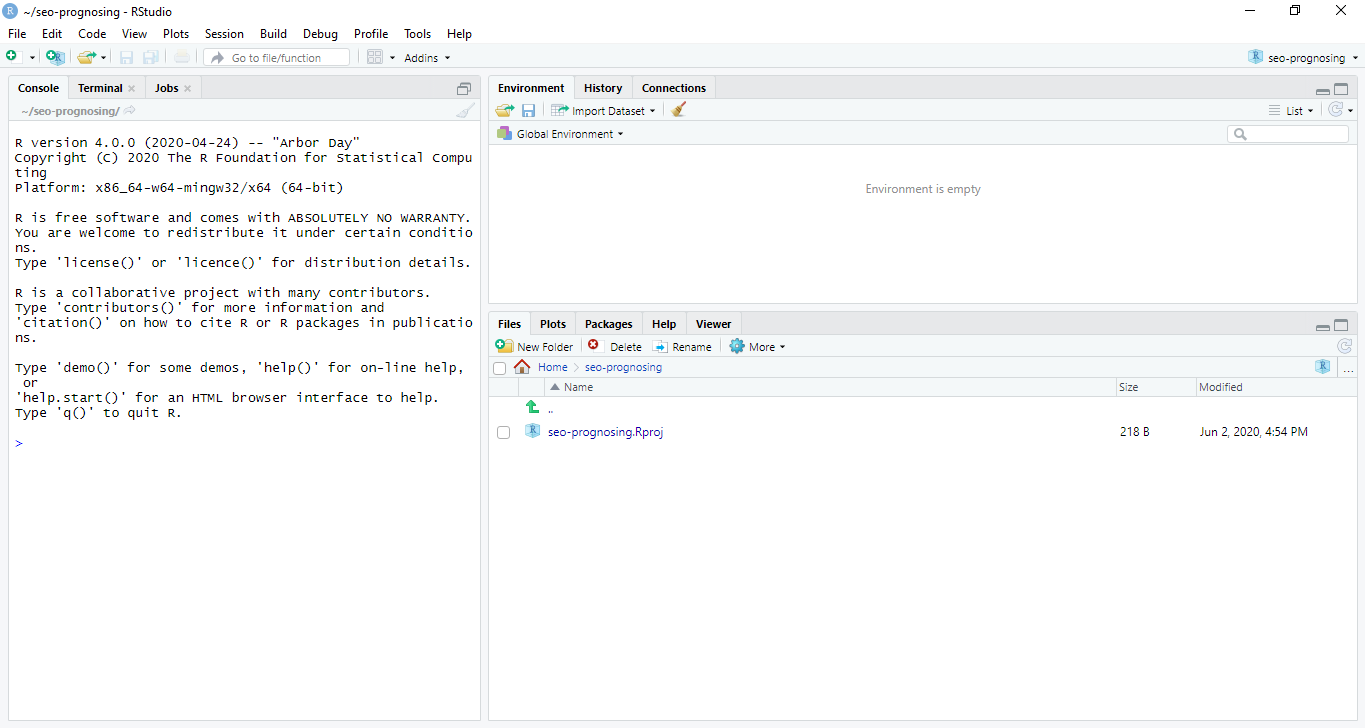

The R Studio interface may seem a bit bulky at first glance. That feeling should vanish just after the first graph made on your own :)

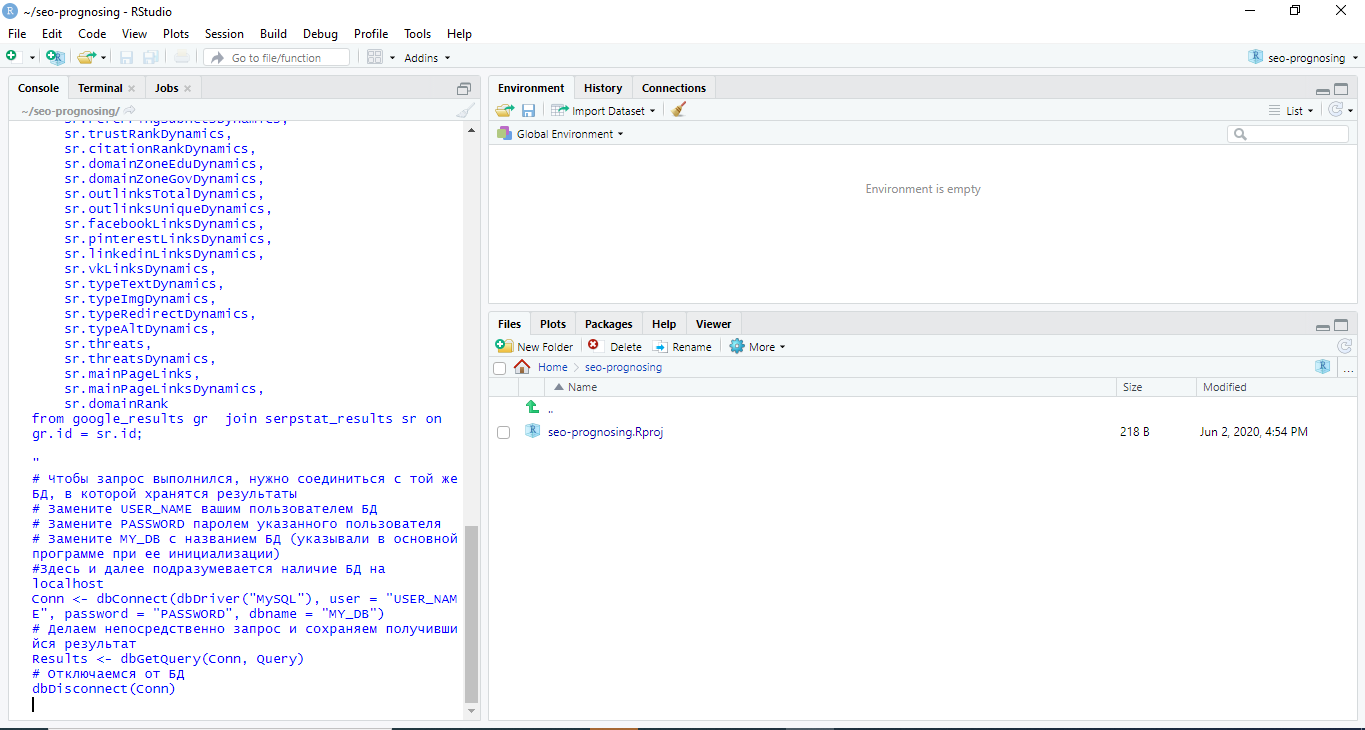

To avoid struggling with the third database, let's perform a join (use id as some duplicate-related errors may occur while linking). To use SQL bases when working with R, you should install an additional RMySQL library. Let's call the general range of data 'Results'.

The first script will look like this:

install.packages('RMySQL')

library(RMySQL)

#write a query

##gr - google_results, sr - serpstat_results

#note that to avoid duplicating the columns, you should clearly

#specify which columns you are using from serpstat_results

Query <- "

select

gr.*,

sr.referringDomains,

sr.referringSubDomains,

sr.referringLinks,

sr.totalIndexed,

sr.externalDomains,

sr.noFollowLinks,

sr.doFollowLinks,

sr.referringIps,

sr.referringSubnets,

sr.trustRank,

sr.citationRank,

sr.domainZoneEdu,

sr.domainZoneGov,

sr.outlinksTotal,

sr.outlinksUnique,

sr.facebookLinks,

sr.pinterestLinks,

sr.linkedinLinks,

sr.vkLinks,

sr.typeText,

sr.typeImg,

sr.typeRedirect,

sr.typeAlt,

sr.referringDomainsDynamics,

sr.referringSubDomainsDynamics,

sr.referringLinksDynamics,

sr.totalIndexedDynamics,

sr.externalDomainsDynamics,

sr.noFollowLinksDynamics,

sr.doFollowLinksDynamics,

sr.referringIpsDynamics,

sr.referringSubnetsDynamics,

sr.trustRankDynamics,

sr.citationRankDynamics,

sr.domainZoneEduDynamics,

sr.domainZoneGovDynamics,

sr.outlinksTotalDynamics,

sr.outlinksUniqueDynamics,

sr.facebookLinksDynamics,

sr.pinterestLinksDynamics,

sr.linkedinLinksDynamics,

sr.vkLinksDynamics,

sr.typeTextDynamics,

sr.typeImgDynamics,

sr.typeRedirectDynamics,

sr.typeAltDynamics,

sr.threats,

sr.threatsDynamics,

sr.mainPageLinks,

sr.mainPageLinksDynamics,

sr.domainRank

from google_results gr join serpstat_results sr on gr.id = sr.id

;

"

The big advantage of R, in this case, is having the source object ('Results' that contains the data imported from the database), the new one (df, data frame with the same results) and you can use either of them if necessary. So now nothing will prevent you from running the analysis.

Also, I'm not going to describe the basic syntax (it can be easily googled or found in the official documentation) and the mathematical models applied (it would be good to fill the gaps in understanding the basic maths analysis if there are any, or to do a relevant course. Or as I did — ask a Data Scientist to explain the moments which I failed to understand :) )

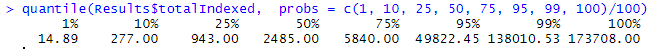

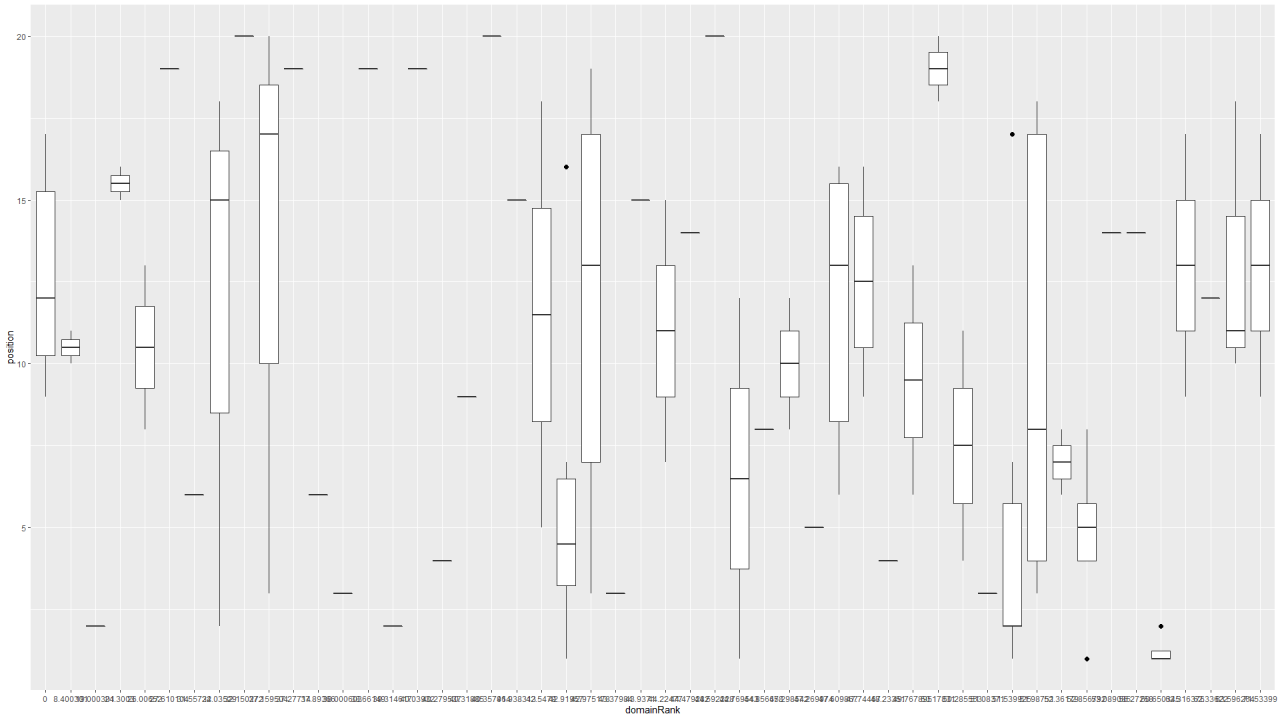

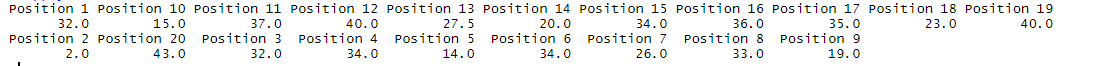

For instance, you can build a graph of positions on SERP on the selected keywords and look at the number of incoming links to the domain that is cumulative for each position (remember about Serpstat limits? Here's the moment when the data may be slightly inaccurate).

library(plotly)

pg <- plot_ly(df, x = ~position, y = ~referringLinks, type = 'bar')

pg

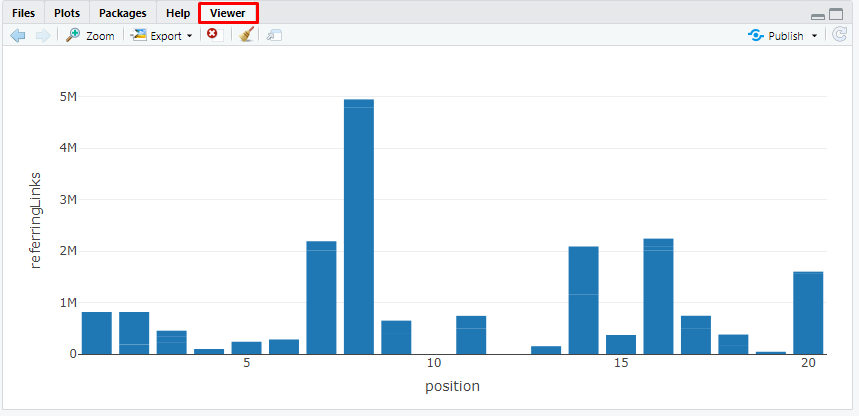

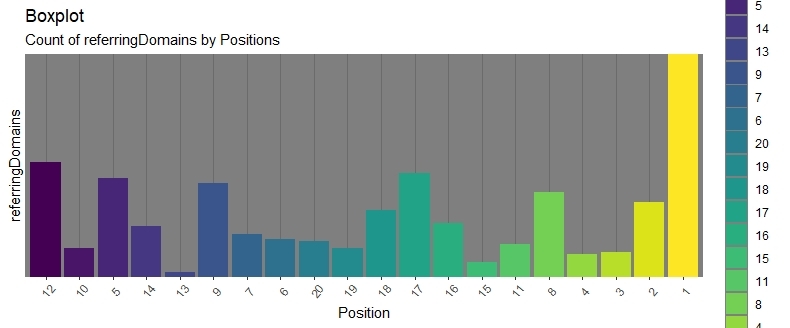

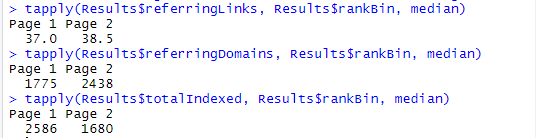

For instance, tapply helps to view the median values of the backlinks count for domains, unique domains and indexed pages that are listed on the first and second SERP:

But links are not the only important thing. Serpstat has an interesting parameter — domainRank (we might never learn how it is calculated), which can be used to hypothesize in different ways.

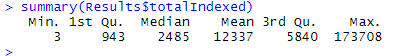

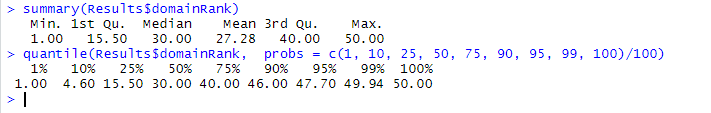

First, let's check if the data have been received correctly and we can deal with them:

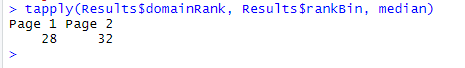

And let's check the basic information with tapply:

It means that there are many active competitors beyond TOP, and they're increasing their rate to be ready to get into top-10.

Let's check the same thing but in terms of positions, not the whole pages. It'll help to make sure the calculations are right.

After providing access to the data by a particular link, you can make more accurate predictions (e.g., calculate the median of backlinks to get to the first position) that will have a good impact on work. Or you can study the rest of the indicators to detect some other patterns.

What's important is that you get an opportunity to use the powerful SEO tools for a minimum amount of time and resources spent (you should only pay for Serpstat API plan). And that is the base for other data (more extensive) which can be layered gradually.

It's worth remembering that search engine ranking algorithms are changing all the time both in general and in separate niches (just remember the medicine update). So you should be able to keep up with the changes. Data analytics from different sources allows doing that in time and even in advance.

Let all of you have high positions, no sanctions and nice user behavior on your websites! :)

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.