Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

AI 101: Technology's Trending Glossary

Are you curious about artificial intelligence? Keeping up with the latest terminology and concepts can be overwhelming as the field continues to evolve and expand. With numerous articles and publications discussing AI, it's easy to get lost in the jargon and miss the vital context. That's why the Serpstat team has created an AI glossary to help you expand your knowledge and understand the latest terms and concepts in one go. We hope you find our glossary informative and enjoyable to read!

Definition

What it means

Adversarial attack

An adversarial attack attempts to deceive a machine-learning model into making a mistake. This can be done by creating a modified version of an input designed to fool the model into making a wrong prediction.

Adversarial attacks can be very difficult to defend against and potentially undermine the reliability of machine learning models in various applications. It is important to be aware of the risks posed by adversarial attacks and to take steps to defend against them.

AI planning

A subfield of AI that creates action sequences for intelligent agents, robots, and unmanned vehicles.

AI planning involves developing algorithms and techniques for optimal plans for a given problem or scenario. This typically involves analyzing and processing large amounts of data and identifying the best course of action in an unpredictable environment. Applications of AI planning include robotic control, logistics optimization, and resource allocation in various industries.

Artificial General Intelligence (AGI)

The development of intelligent machines that exhibit general cognitive abilities and can comprehend the world around them from the perspective of a human.

AGI allows machines to understand, reason, and act in the same way that humans do. This form of AI requires the development of technologies such as natural language processing, image recognition, voice control, decision-making, and problem-solving capabilities.

Artificial immune system (AIS)

It is a category of rule-based machine learning systems that draws inspiration from the processes and principles of the vertebrate immune system.

These algorithms are designed to imitate the learning and memory capabilities of the immune system and are commonly used for problem-solving purposes.

Artificial Neural Networks (ANN)

Any computing system that draws inspiration from biological neural networks.

ANN or connectionist systems aim to simulate how the brain processes information and learns from experience by using interconnected nodes or "neurons" to analyze and classify data. These systems are commonly used in applications such as image and speech recognition, natural language processing, and predictive analytics.

Definition

What it means

Bag-of-words model

A model used in NLP (natural language processing) and IR (information retrieval) that represents text as a collection of words with no regard for grammar or word order.

The bag-of-words model is a common technique for extracting text features for classification, clustering, and other NLP tasks. It involves creating a histogram of word occurrences in a given document. Although the model is simple, it can be effective for tasks like sentiment analysis, topic modeling, and spam filtering.

BERT

(Bidirectional Encoder Representations from Transformers)

BERT is a fine-tuned and pre-trained on task-specific datasets NLP model with a particular architecture and a specific training algorithm.

BERT is a powerful language model that can understand the context and meaning of words in sentences, paragraphs, and documents. This technology has become widely adopted in the NLP community and has achieved state-of-the-art performance on various benchmarks.

Definition

What it means

Capsule neural network, CapsNet

It is a type of artificial neural network (ANN) designed to model hierarchical relationships more biologically-inspiredly. This approach is based on "capsules", which are groups of neurons collectively representing a specific feature or object in an image or other input data.

In a CapsNet, each capsule represents a particular input aspect, such as a specific object or feature. The approach is based on "dynamic routing," which allows information to be passed between capsules in a way that more closely resembles biological neural organization. This technique has shown promise in various image and video recognition tasks and has been the subject of ongoing research in the machine-learning community.

Cognitive Computing

A type of AI that mimics human cognitive processes such as perception, reasoning, and problem-solving.

Cognitive computing is a subset of artificial intelligence that focuses on creating machines that think and reason like humans. Cognitive computing aims to create more natural and intuitive interactions between humans and machines, such as through natural language processing and conversational interfaces. Applications of cognitive computing include healthcare, finance, and customer service.

Computer Vision

An interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos.

Computer vision is a field of study that focuses on creating algorithms and systems that can analyze, interpret, and understand digital images and videos. This involves applying computer science, artificial intelligence, and cognitive psychology techniques to create machines that can "see" and understand the visual world. Computer vision applications include object recognition, facial recognition, and autonomous driving.

Conversational AI

A technology used to create chatbots and virtual assistants for various use cases. These systems can be integrated into messaging platforms, social media, SMS, and websites to provide users with a natural language interface for interacting with software and services.

Conversational AI systems can be designed to understand and respond to various user inputs, such as text, voice, and gestures. They are typically built using machine learning algorithms and can be trained on large datasets of human language to improve their performance over time. Conversational AI platforms often provide developer APIs allowing third parties to extend and customize the system for their needs.

Convolutional Neural Network (CNN)

A class of deep neural networks mostly used for analyzing visual imagery.

A convolutional neural network is a model commonly used for image recognition and processing. CNNs can learn to recognize features within images through convolution and pooling. The architecture typically consists of multiple layers of neurons trained to identify and classify objects within an image.

Definition

What it means

Data augmentation

Data augmentation is a technique used in machine learning to increase a dataset's size artificially.

Data augmentation can improve the performance of machine learning models by reducing overfitting and providing more training data for the model to learn from. It can be a powerful tool for improving the performance of ML models. However, it is important to use it carefully to avoid introducing bias into the model.

Data Drift

Changing input data distribution over time. It is also known as a covariate shift.

Data drift includes changes in seasonality, consumer preferences, adding existing products, etc. Model drift can have a significant impact on machine learning model performance. Prediction accuracy, precision, recall, and overall model effectiveness may decrease as the model's predictions become less accurate. Model drift can fail a model.

Data Extraction

The process of collecting or retrieving disparate types of data from various sources, many of which may need to be better organized or completely unstructured.

A data extraction tool retrieves information from multiple sources, such as relational databases, SaaS applications, legacy systems, web pages, and unstructured data files (such as PDFs or text) to analyze, manipulate, or store information. This can include web scraping, text mining, and other methods to extract and transform data into a usable format for analysis.

Data Mining

Discovering patterns in large data sets using methods from machine learning, statistics, and database systems.

Data mining aims to discover useful information from large amounts of data that would be difficult or impossible to identify through manual analysis. It can help businesses and organizations make better decisions by uncovering patterns and trends that would go unnoticed.

Data Science

An interdisciplinary field that uses scientific methods, algorithms, and systems to extract knowledge and insights from structured and unstructured data.

Data science aims to uncover hidden patterns, correlations, and trends in large datasets and to use this information to make data-driven decisions. This involves collecting, cleaning, processing, and analyzing data using various techniques, including statistical analysis, machine learning, data mining, and data visualization.

Data Set

A data collection corresponds to a single database table or statistical data matrix, where each column represents a variable, and each row corresponds to a data set member.

Data sets are used in many fields, including statistics, machine learning, and computer science. They are typically used for analysis, modeling, and prediction and may be obtained from various sources, such as surveys, experiments, observations, or simulations.

Data Warehouse

A system for reporting and data analysis that stores current and historical data from disparate sources in one central repository. Also known as an enterprise data warehouse (EDW).

The data is typically historical and spans long, allowing for trend analysis and identification of patterns and relationships. Data warehouses are used in various industries and applications, including business intelligence, financial analysis, healthcare, and retail. They are often critical to a company's data architecture, providing a single source of truth for important business metrics and KPIs.

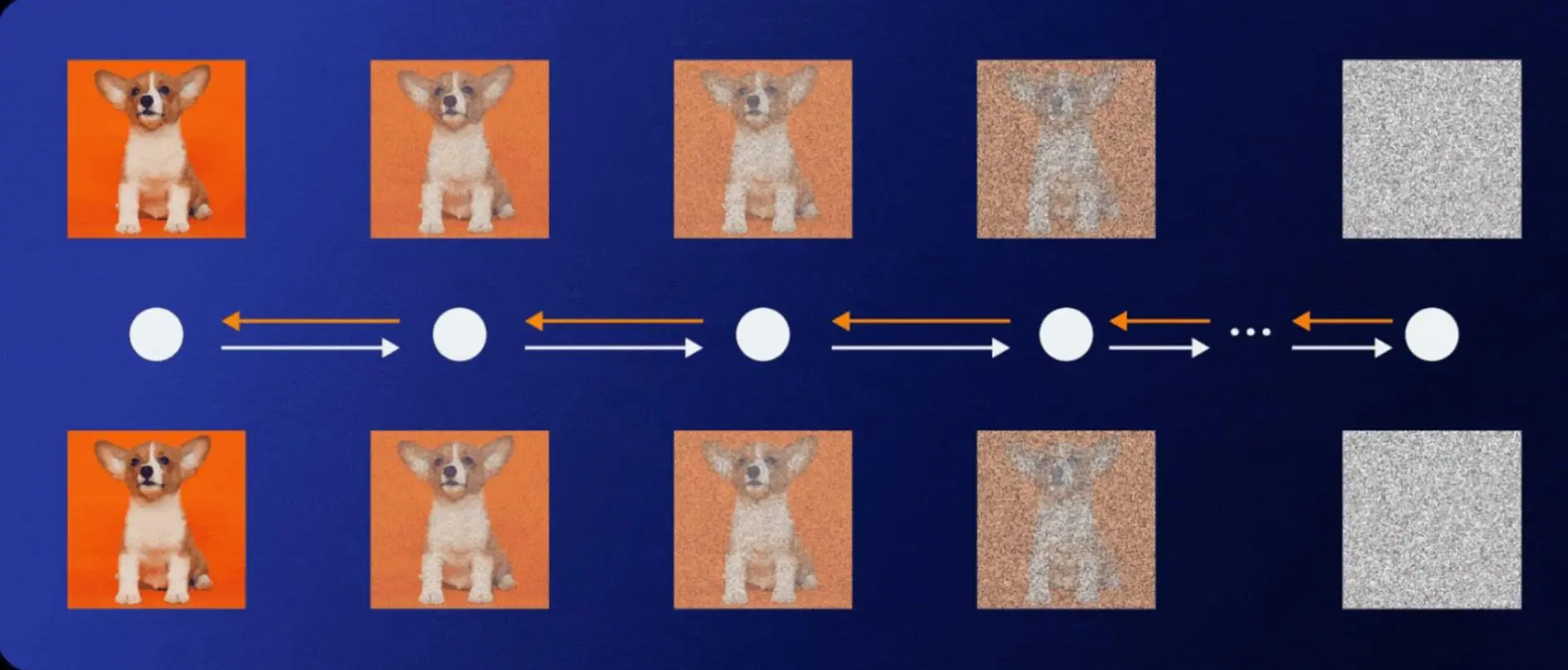

Diffusion Models

Generative models that destroy training data by adding Gaussian noise, then learn to recover the data by reversing the noising process. They are used to generate data similar to the trained data.

This can be useful for tasks such as image and text generation, where we want to generate new data similar to the data we have seen before. Diffusion models have gained popularity in recent years, particularly for their ability to create high-quality images and other data types.

Source: https://medium.com/augmented-startups/how-does-dall-e-2-work-e6d492a2667f

Definition

What it means

Embedding

A technique in machine learning that maps high-dimensional data into a lower-dimensional space while preserving the underlying structure of the data.

Embedding transforms data, such as text or images, into a vector representation that can be used as input for machine learning models. The resulting vectors have a lower dimensionality than the original data and can capture meaningful relationships between the input data.

Emotion AI

It is a field of AI that aims to understand, respond and simulate human emotions.

This technology can analyze facial expressions, vocal tones, and other physiological signals to infer human emotions and respond accordingly — applications of emotion AI range from improving mental health to enhancing customers.

ETL (Extract — Transform — Load)

A process in data management that involves extracting, transforming, and loading data from one or more sources into a target system.

ETL is a data management process that involves extracting data from various sources, transforming it to fit a target schema, and loading it into a destination system.

Definition

What it means

Few-shot learning (FSL)

A type of machine learning that can learn from a small number of examples.

What it means: It uses prior knowledge to generalize to new tasks, which can come from previous experience, training data, or other sources. This makes FSL well-suited for tasks where data is scarce or expensive to collect. In finance, FSL can be used to predict stock prices from a few historical data points.

Fine-tuning (machine learning)

A technique in transfer learning where a pre-trained model is further trained on a new task or dataset. Fine-tuning can be done on either the entire pre-trained model or a subset of its layers.

Fine-tuning is a machine-learning technique that involves taking a pre-trained model and adapting it to a new task or dataset by further training the model. The weights of the pre-trained model are typically frozen, and only the weights of the additional layers are trained on the new task. Fine-tuning can be a useful approach for tasks where the amount of training data is limited or for improving the performance of a pre-trained model on a specific task.

Friendly artificial intelligence (FAI)

FAI research focuses on developing and ensuring constraints on an AGI that will guide it toward beneficial outcomes.

Friendly AI, or FAI, is a concept in AI research that involves designing AGI systems to behave in a way that aligns with human values and goals. FAI research aims to create AGI that will positively impact humanity while avoiding the potential risks and negative consequences of uncontrolled or poorly controlled AI systems. FAI research is closely related to machine ethics and other fields concerned with AI's ethical development and regulation.

Definition

What it means

Game theory

Game theory is a mathematical framework used to analyze situations where multiple decision-makers interact with each other.

Game theory is commonly used in economics, political science, and other fields to understand strategic decision-making and predict the outcomes of interactions between individuals or groups.

Generative adversarial network (GAN)

A class of machine learning systems consists of two neural networks competing in a zero-sum game framework. One network generates data samples, while the other evaluates the generated models and provides feedback to improve the generator.

The generator produces synthetic data samples, while the discriminator evaluates the samples and provides feedback to the generator to improve its performance. GANs have been used for various applications, including image generation, text generation, and data augmentation. GANs are effective at generating realistic samples that are difficult to distinguish from real data.

Definition

What it means

Hallucination

In artificial intelligence, hallucination is a confident response by an AI that does not seem to be justified by its training data.

In other words, the AI model generates outputs that do not align with the training data it was fed. This can occur in various AI applications, including natural language processing, computer vision, and speech recognition. Hallucinations can be a problem for AI models, as they can lead to incorrect predictions or decisions. Researchers are working on developing techniques to reduce the occurrence of hallucinations and improve the accuracy and reliability of AI models.

Definition

What it means

Intelligent agent (IA)

An intelligent agent is a self-directed thing that can accomplish goals by using sensors to observe its surroundings and tools (called actuators) to interact with them. It's called intelligent because it can use knowledge or learn from experience to better reach its goals.

Intelligent agents are used in a variety of applications, including robotics, automation, and decision support systems.

Definition

What it means

Large language model (LLM)

A type of artificial intelligence model that is capable of generating human-like language by analyzing vast amounts of text data.

LLMs can generate coherent sentences, paragraphs, and even full articles based on a prompt or input. They are commonly used in natural language processing, text generation, and other language-related applications. LLMs such as GPT-3 can perform language translation, summarization, and even coding tasks.

LangOps (Language Operations)

LangOps, short for Language Operations, is the workflows and practices that support the training, creation, testing, production deployment, and ongoing curation of language models and natural language solutions.

LangOps involves a variety of tasks such as data preparation, model training and tuning, deployment, and ongoing maintenance and optimization. It is critical in developing and implementing natural language processing applications such as chatbots, voice assistants, and machine translation systems.

Lemma

The base form of a word that represents all its inflected forms.

A lemma is the base form of a word. For example, the lemma of the word "running" is "run." Lemmatization is the process of reducing a word to its lemma. Lemmatization is commonly used in NLP to simplify text and improve accuracy. It is advantageous in tasks such as text classification and sentiment analysis.

Definition

What it means

Machine learning (ML)

Is the branch of artificial intelligence that focuses on creating computer systems that can automatically learn and improve from experience.

ML enables systems to learn and improve from experience without being explicitly programmed: algorithms are trained on large datasets and can make predictions or take actions based on patterns and relationships discovered in the data.

Markov chain

A mathematical model used to represent a system that transitions from one state to another based on a set of probabilities.

The probability of transitioning to the next state depends only on the current state, not on any previous states. Markov chains are often used in ML and NLP applications.

Metadata

Data that provides information about other data.

Metadata can include details such as the author of a document, the date it was created, the file size, or keywords that describe its content. It is used to help organize and classify data and to make it easier to search and retrieve.

Definition

What it means

Natural language processing (NLP)

A field of computer science and artificial intelligence that focuses on interactions between computers and humans through natural language.

It is an area of ML that enables machines to interact with humans in their native language. It involves text classification, sentiment analysis, and language translation.

Neural networks

A type of ML algorithm that builds on the structure of how the human brain works, consisting of layers of interconnected nodes that receive input signals, process these signals and provide output.

Neural networks are a subset of ML algorithms consisting of interconnected nodes (neurons) that process information in parallel, with each node performing a simple computation. Neural networks can be used for various tasks, from facial and image classification to natural language understanding and pattern recognition.

Looking to enhance your SEO and content marketing strategies with AI-powered tools?

Try Serpstat GPT-based instruments for free with a 7-day trial period! Our tools utilize the latest advances in machine learning to provide you with the best insights and solutions for your digital needs.

Neural machine translation (NMT)

It is an approach to machine translation that uses an artificial neural network to predict the likelihood of a sequence of words, typically modeling entire sentences in a single integrated model. NMT is a way to translate text from one language to another using a computer.

NMT can produce more fluent translations than traditional statistical machine translation methods.

Neurocybernetics

Also known as a brain-computer interface (BCI), is a technology that establishes a direct communication pathway between an enhanced or wired brain and an external device.

In short, neurocybernetics aims to create a direct link between the human brain and computers or other devices for various purposes. BCIs are used to research, map, assist, augment, or repair human cognitive or sensory-motor functions. They differ from neuromodulation in that they allow for bidirectional information flow.

Definition

What it means

Predictive analytics

Predictive analytics is a technique that analyzes current and historical data to predict future events.

Such technics mean using data mining, statistical algorithms, and machine learning to identify patterns and relationships in data and then apply these insights to make predictions.

Definition

What it means

Reinforcement learning (RL)

RL is a type of machine learning that enables an agent to learn how to behave in an environment by trial and error. The agent receives rewards or punishments for its actions and learns to take actions that maximize its rewards.

RL is often used when it is difficult or impossible to pre-program an agent with all the necessary knowledge. For example, RL can be used to train an agent to play chess, where there are millions of possible moves. RL is a powerful tool that can be used to solve various problems. However, it can be difficult to design and train RL agents, and they can be susceptible to overfitting.

Reinforcement Learning from Human Feedback (RLHF)

RLHF is an approach in artificial intelligence where an AI agent learns from feedback provided by humans, such as preferences or rankings, to improve its decision-making process.

It is useful when it may be difficult to specify exact rules for decision-making or where human intuition and preferences are important factors. It has applications in recommendation systems, personalized advertising, and game-playing AI.

Recurrent Neural Networks (RNN)

RNN is a type of neural network that can process sequential data by using information from previous steps as context.

This allows information to persist over time for the network's predictions. It is commonly used in NLP, and speech recognition, where understanding the context of a sentence or phrase is important for accurate results. RNNs have also been used in other fields, such as image captioning, stock prediction, and music composition.

Responsible AI

Responsible AI refers to the practice of developing and deploying AI systems in an ethical and socially responsible manner.

This includes ensuring transparency, fairness, accountability, and privacy in designing, developing, and using AI technologies.

Definition

What it means

Supervised learning

A type of machine learning where an algorithm learns from labeled training data to make predictions or decisions based on new and unseen data.

In supervised learning, each training example consists of an input and a corresponding output, or target, that the model is trying to predict. Supervised learning aims to find a function that accurately maps inputs to outputs, enabling the model to make accurate predictions on new data.

Definition

What it means

Technological Singularity

A hypothetical future when technological progress will have accelerated to such a degree that it will cause an irreversible change in human civilization. It is often associated with the creation of superintelligence.

Some experts have raised concerns about its potential impact on society and humanity. However, singularity is still a highly debated topic, and there is no clear consensus on whether or when it will occur.

Tokens

The individual words or units of meaning that are used to construct sentences in natural language.

Tokens are the basic units of meaning in natural language processing, representing individual words or other units of meaning used to build sentences and other linguistic structures. Tokenization is breaking text into tokens, an essential step in many natural language processing tasks.

Transhumanism

Is a philosophical movement that advocates for using advanced technologies to transform the human condition and enhance human intellect and physiology.

This can include various technologies, such as genetic engineering, brain-computer interfaces, and AI. The ultimate goal of transhumanism is to create a post-human species that is more intelligent, healthier, and longer-lived than humans.

Definition

What it means

Unsupervised Learning

A type of machine learning in which an algorithm learns to find patterns in data without being explicitly trained on labeled examples.

Unsupervised learning is helpful for discovering previously unknown patterns or structures in data. It is often used in data clustering, dimensionality reduction, and anomaly detection.

Definition

What it means

Weak AI, Narrow AI

It refers to artificial intelligence that is designed and trained to perform a single task or a narrow range of tasks.

Weak AI is currently the most common form of artificial intelligence, and it is used in a wide range of applications, such as voice assistants, image recognition, and recommendation systems.

Discover More SEO Tools

Text Analysis Tool

Unlock the power of your text data with our advanced Text Analytics Tools

AI Content Detection

Ai Content Checker – realize if the text is AI-generated

AI Text Generator

Try our AI Content Writer tool and streamline your content creation process

AI Content Tools

AI Content Marketing Tools – simplify and optimize the content creation process

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.