Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

5 Cutting-Edge Technologies to Explore Using Neural Networks

Serpstat’s Founder, Oleg Salamaha, Explains and Gives Advice

The rise of AI has made it hard to ignore this trend. In this article, we interviewed the Founder of Serpstat, who has trained AI models for a long time. So here you can get his insights into what neural networks can do and provide definitions for those who may be new to the technology.

We will also shortly discuss popular types of neural networks. Finally, we will provide insight into the current trends in NLP (natural language processing), giving you an idea of what will be developed in the future.

Curious about how machine learning and neural networks work? Keep reading this article that breaks it down in plain language.

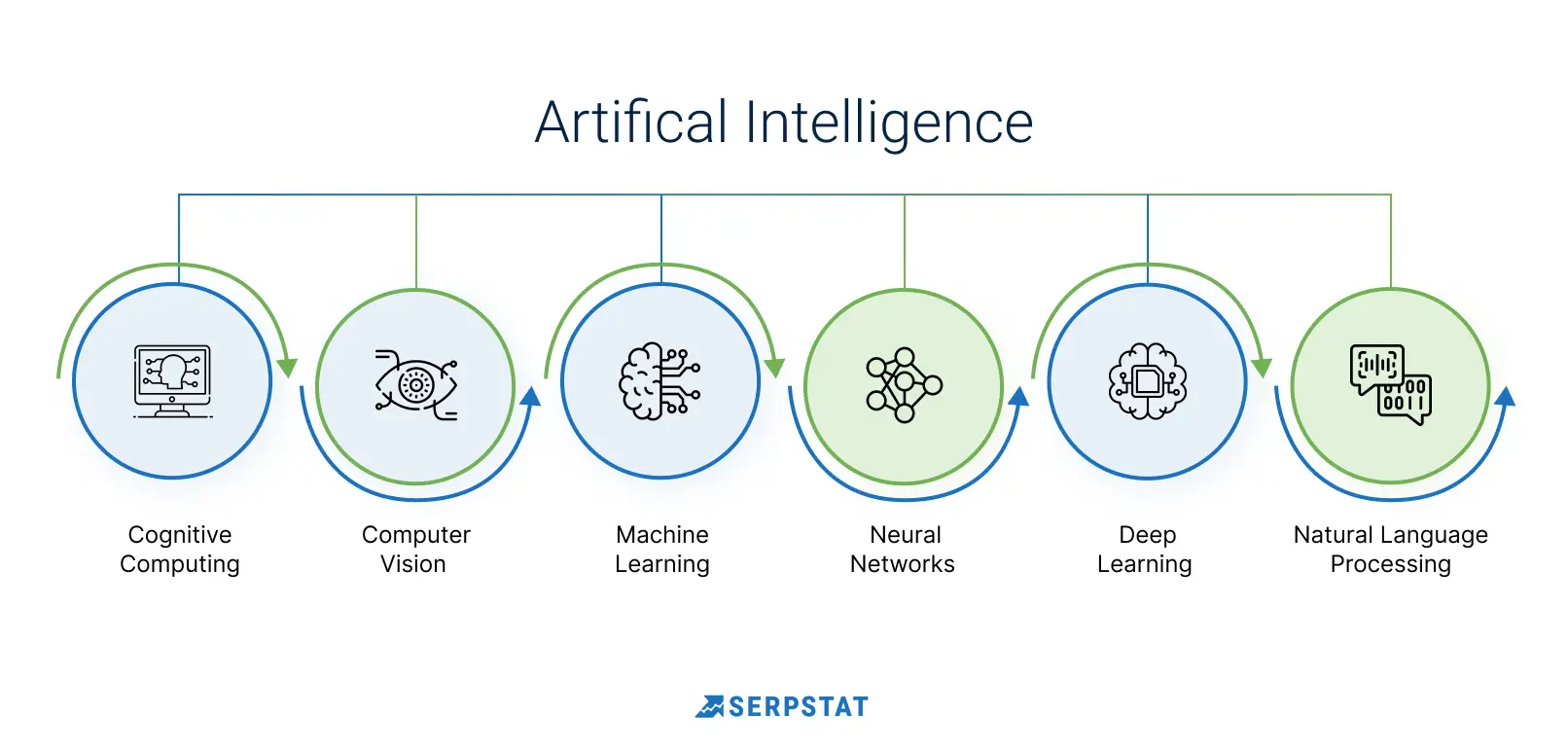

AI Glossary:

Machine learning (ML) is the branch of artificial intelligence that focuses on creating computer systems that can automatically learn and improve from experience.

Neural networks are a type of ML algorithm that builds on the structure of how the human brain works, consisting of layers of interconnected nodes that receive input signals, process these signals and provide output.

Neural networks can be used for various tasks, from facial and image classification to natural language understanding and pattern recognition.

Natural language processing (NLP) is an area of ML that enables machines to interact with humans in their native language.

Artificial General Intelligence (AGI) is the development of intelligent machines that exhibit general cognitive abilities and can comprehend the world around them from the perspective of a human. AGI will allow machines to understand, reason, and act in the same way that humans do. This form of AI requires the development of technologies such as natural language processing, image recognition, voice control, decision-making, and problem-solving capabilities.

MindCraft: machine learning from a scientific perspective

Do machines have the ability to mimic human learning? Artificial neural networks (ANNs) are sometimes colloquially referred to as AI because they are designed to mimic the functioning of our brains. They can make decisions and perform tasks, similar to how humans think. While machines may not yet be able to compete with human intelligence in many aspects, they can still help find information or offer advice. Neural networks were created to bridge this gap and revolutionize the field of AI. Artificial neurons are structured like real neurons, enabling them to learn continuously with new data, analyze it, and gradually improve their results.

A type of machine learning and artificial intelligence that mimics how humans acquire knowledge is deep learning. In the same way as humans, machines are fed with observations (data). Data is analyzed by the learning algorithm, which finds the patterns that most closely match the observations.

This pattern is expressed in the model that was learned. A mathematical function defines the relationship between the models. Without patterns, there can be no learning — neither human nor machine. The fundamental building blocks of a neural network are called layers, which work together to analyze training data in increasingly broad ways, thus allowing the model to learn patterns.

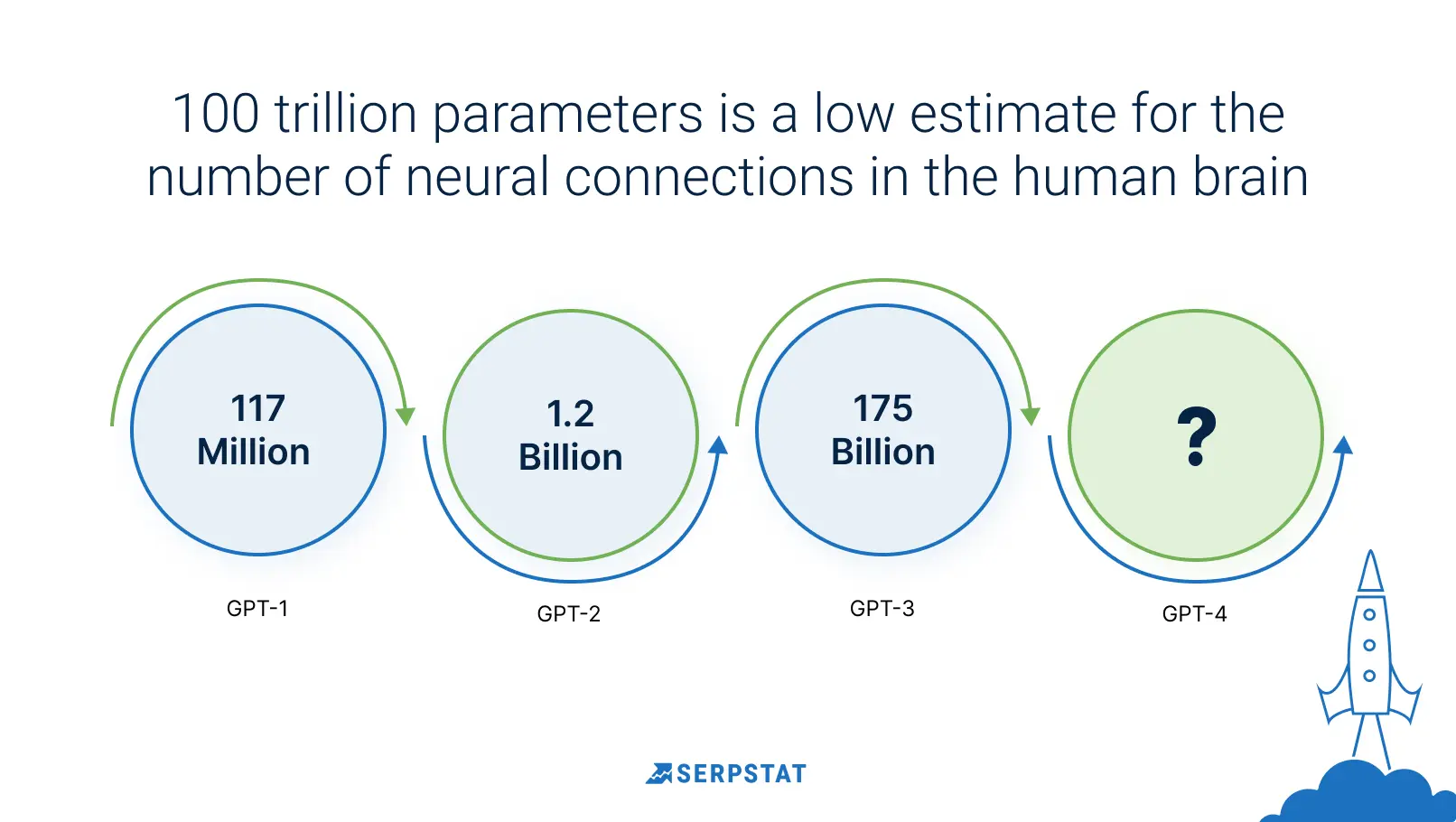

In language models, there are many parameters, and from the deep learning point of view, they are determined mainly by the size of the dataset used for training. Generally, the more data fed into a neural network, the better it can be trained and the more parameters it has to work with. Additionally, the number of parameters is also related to the structure and complexity of the neural network itself. For example, adding additional layers or more neurons in each layer can increase the number of parameters, resulting in a more complex model and potentially better performance.

Scaling parameters of GPT models

Data source: https://uxplanet.org/gpt-4-facts-rumors-and-expectations-about-next-gen-ai-model-52a4ddcd662a#:~:text=GPT%2D1%20had%20117%20million,size%2C%20with%20175%20billion%20parameters.

With OpenAI development becoming more popular, new models such as Stanford's Alpaca 7B have come about. This language model is fine-tuned from Meta's LLaMA 7B model on 52K instruction-following demonstrations. Initial evaluations suggest that Alpaca behaves similarly to OpenAI's text-davinci-003.

In machine learning, a parameter refers to a configuration variable the model learns during training. In GPT, these parameters are primarily in the form of weights and biases, which are used to calculate the output of each neuron in the network.

However, it's important to note that the amount of data used for training GPT-4 is private by OpenAI, and how much data was introduced needs to be clarified. There are some researchers' opinions that the model was trained on the Common Crawl dataset (60% of GP-3's training data), a broad scrape of the 60 million domains on the internet and a large subset of the sites they link to, the more reputable — like the BBC, MIT, or Harvard and the less reputable ones — Reddit. OpenAI's researchers also fed in other curated sources, such as Wikipedia and the full texts of historically relevant books.

The scientific perspective of machine learning emphasizes the importance of understanding the underlying principles and mechanisms of learning algorithms. By developing a deeper understanding of these systems, we can improve their performance and create more efficient and effective solutions to complex problems.

Speech processing AI bots: ChatGPT's uniqueness and popularity

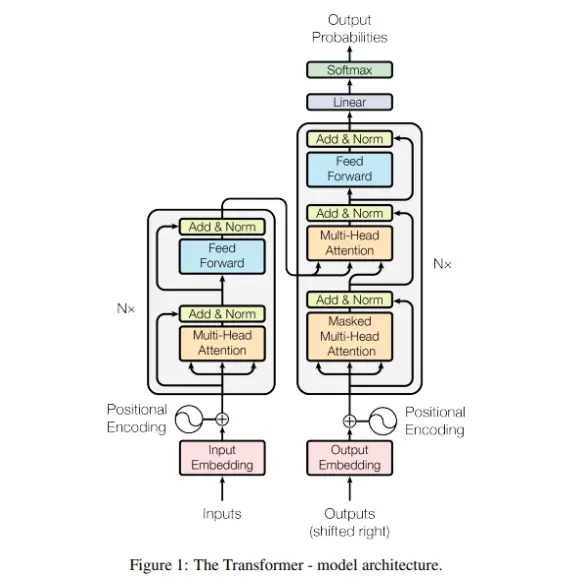

GPT-3 is based on the transformer architecture, which allows it to process sequential data such as text effectively. This architecture enables it to handle more complex tasks, such as understanding context, and generate more coherent and human-like text.

The Transformer is a type of computer program that uses a specific design made up of layers that pay attention to different parts of the information it's given and layers that connect different parts of the data.

The architecture is separated into two parts, encoder and decoder.

It has a specific layout, as shown in a picture:

Attention Is All You Need, Google Research Paper, 2017

OpenAI has released different versions of GPT-3, including the original, versions fine-tuned for specific tasks, and more advanced versions like "Davinci" and "Curie". GPT-3.5 is not an official version of GPT-3. It's an informal name for an updated or customized version.

ChatGPT is fine-tuned from a model in the GPT-3.5 series, which finished training in early 2022. It has many advantages, but its output still needs to be checked and corrected. The model still lacks a human-like understanding of real-life situations.

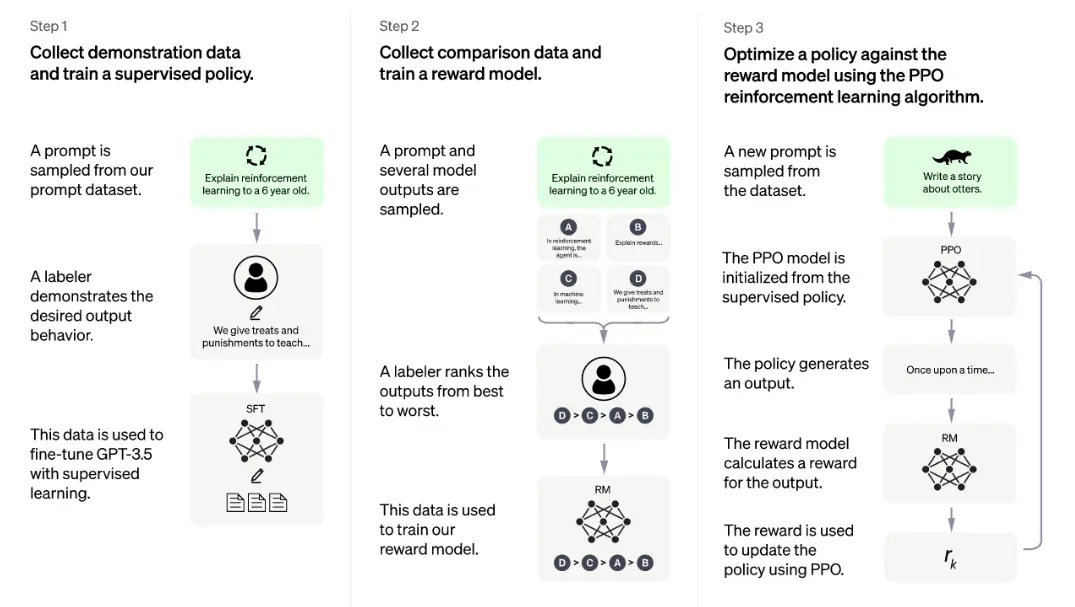

Reinforcement Learning from Human Feedback was used to train this model, initially trained, it used supervised fine-tuning, where human trainers acted as both user and AI assistant and used model-written suggestions to compose their responses. This new dialogue data was combined with the InstructGPT, which was converted to dialogue format.

With ongoing research and development in the field of AI, the potential applications for speech-processing bots are virtually limitless, and we can look forward to exciting new developments in this area in the years to come.

What are the most relevant NLP tasks in language modeling? 5 things to think of

Natural language processing can be broken down into two main categories: understanding and generation. Some tasks commonly covered by language models include sentiment analysis, question-answering, query resolution, text summarization, and more. All of these tasks help to improve the accuracy and efficiency of language models.

Without being too technical, the 5 functions you need to know about language models, in particular, and neural networks, in general, are the specificities of their tasks.

Answer the questions

GPT-3 has been pre-trained on a wide range of tasks, such as language translation and question answering, which allows it to be fine-tuned for specific tasks with minimal additional training data.

One characteristic of answering questions using neural networks is that they can be trained end-to-end. This means that the models can learn to rank subject-predicate pairs to retrieve relevant facts given a question.

Summarization using neural networks

In short, text summarization involves shortening a text while retaining important information and meaning. Automating this task is becoming more popular due to the time and effort required for a manual resume. Text summarization has many applications in NLP tasks, such as text classification, question answering, and summarizing legal and news texts. It can also be integrated into systems to reduce document length.

Clustering/classification

The model can be trained on labeled as well as unlabeled data. Neural networks and other deep learning models have an advantage as they can perform unsupervised learning, when the model groups similar objects together without knowing what those objects are. In contrast, supervised learning occurs when the model is given data and corresponding labels to learn correlations between them.

Neural networks are highly effective for supervised learning tasks like classification and unsupervised learning tasks like clustering.

Text generation as one of the main functions of ANN

Text generation is a crucial component of natural language processing, where computer algorithms interpret a free-form text in a particular language or generate similar text based on training examples. After years of stagnation, shallow learning techniques have struggled with text generation, but deep learning algorithms have revitalized the field.

However, the challenge of comprehending the genuine intention behind language and its contextual meanings still persists. Currently, genuine AGI (Artificial General Intelligence) systems, which are described as AI that is comparable to human intelligence, do not exist and are confined to the realm of science fiction. According to Sam Althman, CEO of Open AI, their systems are getting closer to AGI, and they have become increasingly cautious with creating and deploying new models.

Serpstat also has launched AI tools based on GPT-3 and GPT NeoX. With these tools, you can test out AI-generated titles, descriptions, and article outlines and take advantage of our flexible article builder tools.

Don't miss the chance to try out Serpstat's powerful AI tools with a free 7-day trial!

Get access to our cutting-edge technology and discover how our tools can help you optimize your content and assist with your routine tasks.

Sign up!Translation

Neural machine translation (NMT) is an approach to machine translation that uses an artificial neural network to predict the likelihood of a sequence of words, typically modeling entire sentences in a single integrated model. NMT is a way to translate text from one language to another using a computer.

The advantage of this approach is that it can be trained on large amounts of text in both languages and produce more natural-sounding translations. It is possible to train neural networks on multiple parallel data sets where the exact text is available in two languages. Through this training, the network learns to recognize patterns in the data and develop a mapping between the input and output vocabularies.

The translation function of neural networks has been extensively used in machine translation applications, where it has shown impressive results in accurately translating text from one language to another.

AI+human: enhancing capabilities

From the times of IBM's Deep Blue chess-playing expert system, the best teams consisted of AI and humans.

In 1996, when a computer beat a world chess champion for the first time, it was an impressive feat of artificial intelligence, which had been developed since the 1950s. Nowadays, modern computer chess engines can easily defeat Deep Blue thanks to advances in computing power and AI learning technology — something that Deep Blue lacked — allowing them to consider much more than just programmed rules.

The process is ever-changing: as technology advances, people's views will also shift accordingly. However, one thing remains certain: the effect of ChatGPT and other AI systems will be determined by what they are capable of and how people perceive them.

However, while AI has made significant progress in some areas, such as image and speech recognition, it cannot still reason and think creatively as humans do. For example, AI may be able to make predictions based on past data, but it cannot generate new ideas or apply common sense reasoning in new situations. Therefore, we must ensure proper quality as we delegate more autonomy to machines in decision-making.

This is why AI is often used with human intelligence, such as in collaborative robots or virtual assistants, where humans can provide the creativity and strategic thinking that AI lacks. It has become a cliché, but the most appropriate phrase is “AI will not get your job, but people who use AI will”.

A lot of employees today have to deal with a lot of administrative tasks and bureaucratic processes. Future institutions will, however, use AI to manage these tasks and processes. It will be possible for humans and AI to work together within future organizations. The use of artificial intelligence can disrupt and improve many of the existing processes in a company. Therefore, humans can focus more on people (your employees and customers) within your organization, making it more humane.

Neural networks face several roadblocks. These models’ learning can be accomplished in two ways: architecturally (improved training) and through additional training (a larger network) and require a lot of resources and can be very expensive to train.

Biases based on race, gender, and religion have also been found in these models, likely due to tendencies present in the data used for training. To prevent these issues, it's important to take precautions and closely monitor the deployment of powerful AI models like GPT-3.

Another issue with AI adoption is the ethical and explainability concerns arising from these systems' black-box decision-making processes. Humans and machines both make decisions, but humans have the advantage of seeking help and guidance to prevent mistakes. Decision-making should involve multiple people adding their knowledge to create a decision tree, considering both technical and business aspects.

It's important to note that utterly bias-free decision-making is impossible, as humans are prone to biases. However, these biases can be corrected when detected. We need to proactively detect and address such bugs in AI tools to ensure they are used ethically and responsibly.

We are only at the beginning of the adoption of AI tools. Such co-pilots should earn the trust of people, and only after that people will start using them in daily work.

FAQ

How long will it take for artificial intelligence to become an advanced tool that replaces certain professions?

It's a bit of a complicated question, so it depends :) According to Serpstat's founder prediction, there might also be transformational changes inside some professions and new ones, such as "GPTChat prompt specialist." There is also a concept of prompt engineering in artificial intelligence already.

How can AI work in tandem with human intelligence, and what kinds of jobs are best suited for this collaborative approach?

While artificial neural networks simulate the brain's signaling process, they still fall short regarding strategic and innovative thinking, which is essential for many jobs. Therefore, AI is more likely to complement human intelligence rather than replace it entirely through collaborative approaches that leverage AI and human creativity.

How does GPT-4 differ from ChatGPT regarding its ability to handle different types of media?

In the past, people wondered if GPT-4 would only be able to work with text like ChatGPT or if it would be able to handle different types of media, such as images or videos. Models that can work with multiple types of media are called multimodal models. AAs it turns out, GPT-4 supports images for both input and output, but only one company can now test this feature in collaboration with OpenAI.

What does Google think of AI-generated content?

AI-powered content creation tools don't just create content by themselves. It's the human element that prompts them. Before the AI tool generates content, marketers can input descriptions, tone of voice, and any key elements they wish to include.

The quality of content will constantly increase as soon as people realize that artificial intelligence is not a ready-made solution but an assisting tool that needs human touch and rechecking.

Google will rank good content following the user behavior and value to the reader and meet Google's AI principles. https://developers.google.com/search/blog/2023/02/google-search-and-ai-content

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.