Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

How to define robots.txt?

What is robots.txt

You can Serpstat Site Audit to find all website pages that are closed in robots.txt.

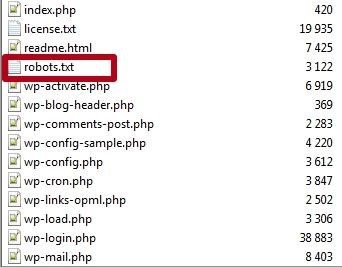

How to create a robots.txt file

The creation and location of the file are the most crucial aspects of it. Write a robots.txt file with any text editor and save it to:

What should be in robots.txt

The user-agent specifies which search engines the directives that follow are intended for.

The * symbol denotes that the instructions are intended for use by all search engines.

Disallow: User agent disallow is a directive that tells the user agents what content they can't see.

/wp-admin/ is the path that the user-agent can't see.

In a nutshell, the robots.txt file instructs all search engines to avoid the /wp-admin/ directory.

Why it's important to manage indexing

But you don't have a simple business card website with a couple of pages (although such websites have long been created on CMS like Wordpress/MODx and others) and you work with any CMS (which means programming languages, scripts, database, etc.) ) - then you will come across such "trappings" as:

- page duplicates;

- garbage pages;

- poor quality pages and much more.

The main problem is that the search engine index gets something that shouldn't be there, like pages that don't bring any benefit to people and simply stuff up the search.

There is also such a thing as a crawling budget, which is a certain number of pages that the robot can scan at the same time. It is determined for each site individually. With the bunch of uncovered garbage, pages can be indexed longer because they don't have enough crawling budget.

What should be closed in robots.txt

Even if you cannot close 100% of the issues at once, the rest will be closed at the indexing stage. You cannot immediately predict all the drawbacks that might occur, and they don't always come out due to technical issues. You need to take into account a human factor in this case.

The impact of the robots.txt file on Google

Therefore, it is safer to close pages from indexing through the robots meta tag:

<html>

<head>

<meta name=“robots” content=“noindex,nofollow”>

<meta name=“description” content=“This page….”>

<title>…</title>

</head>

<body> Online generators

All it does is substitutes the words "Disallow" and "User-agent" for you. As a result, you save zero time, zero benefit, and zero usefulness.

Structure and proper setup of robots.txt

- Directives for execution by this robot

- Additional options

Robot Indication 2

- Directives for execution by this robot

- Additional options

Etc.

The order of the directives in the file doesn't matter, because the search engine interprets your rules depending on the length of the URL prefix (from short to long).

It looks like this:

- /catalog - short;

- /catalog/tag/product - long.

I also want to note that the spelling is important: Catalog, CataloG and catalog are three different aliases.

Let's consider the directive.

User-agent directive

- User-agent: * (for all robots);

Google:

- Google APIs - the user agent that the Google API uses to send push notifications;

- Mediapartners-Google - AdSense analyzer robot

- AdsBot-Google-Mobile - checks the quality of advertising on web pages designed for Android and IOS devices;

- AdsBot-Google - checks the quality of advertising on web pages designed for computers;

- Googlebot-Image - a robot indexing images;

- Googlebot-News - Google News Robot;

- Googlebot-Video - Google Video Robot;

- Googlebot - the main tool for crawling content on the Internet;

- Googlebot - a robot indexing websites for mobile devices.

Disallow Directive

There is a website search that generates a URL:

- /search?q=search-query

- /search?q=search-query-2

- /search?q=search-query-3

We see that it has a /search basis. We look at the website structure to make sure that with the same core, there is nothing important and it closes the entire search from indexing:

Disallow: /search Host Directive

Disallow: /cgi-bin

Host: site.comNow it's enough to have a 301 redirect from the "Not main" mirror to the "Main" one.

Sitemap Directive

Sitemap: https://site.com/site_structure/my_sitemaps1.xml Clean-param Directive

Crawl-Delay Directive

It seems that you can make the bot visit the website 10 times per second by specifying a value of 0.1, but in fact, you can't.

- Crawl-delay: 2.5 - Set a timeout of 2.5 seconds

Addition

The * character is any sequence of characters.

Use case:

You have products, and each product has reviews on it:

- Site.com/product-1/reviews/

- Site.com/product-2/reviews/

- Site.com/product-3/reviews/

We have a different product, but the reviews have the same alias. We cannot close reviews using Disallow:/reviews, because the prefix doesn't start with /reviews, but with /product-1, /product-2, etc.Therefore, we need to skip the names of the products:

Disallow: /*/reviews - Site.com/product-1/reviews/George

- Site.com/product-1/reviews/Huan

- Site.com/product-1/reviews/Pedro

If we use our option with Disallow:/*/reviews - the George's review will die, as well as all his friends. But George left a good review!

Solution:

Disallow: /*/reviews/$ Yes, we could just get back to George's review using Allow and repeat two more times for two other URLs, but this is not rational, because if you need to open 1,000 reviews tomorrow, you won't write 1,000 lines, right?

Six popular screw-ups at robots.txt

Empty Disallow

Disallow: Name error

Always spelled robots.txt

Folder listing

Disallow: /category-1

Disallow: /category-2

File listing

Ignoring checks

Always use a validator!

Example of robots.txt

Disallow: /wp-content/uploads/ # Close the folder

Allow: /wp-content/uploads/*/*/ # Open folders of pictures of a type /uploads/close/open/

Disallow: /wp-login.php # Close the file. You don’t need to do this

Disallow: /wp-register.php

Disallow: /xmlrpc.php

Disallow: /template.html

Disallow: /cgi-bin # Close folder

Disallow: /wp-admin # Close all service folders in CMS

Disallow: /wp-includes

Disallow: /wp-content/plugins

Disallow: /wp-content/cache

Disallow: /wp-content/themes

Disallow: /wp-trackback

Disallow: /wp-feed

Disallow: /wp-comments

Disallow: */trackback # Close URLs containing /trackback

Disallow: */feed # Close URLs containing /feed

Disallow: */comments # Close URLs containing /comments

Disallow: /archive # Close archives

Disallow: /?feed= # Close feeds

Disallow: /?s= # Close the URL website search

Allow: /wp-content/themes/RomanusNew/js* # Open only js file

Allow: /wp-content/themes/RomanusNew/style.css # Open style.css file

Allow: /wp-content/themes/RomanusNew/css* # Open only css folder

Allow: /wp-content/themes/RomanusNew/fonts* # Open only fonts folder

Host: romanus.com # Indication of the main mirror is no longer relevant

Sitemap: http://romanus.com/sitemap.xml # Absolute link to the site map Robots.txt file for popular CMS

Therefore, if you find non-standard solutions or additional plugins that change URLs, etc., there may be problems with indexing and closing the excess.

Therefore, I suggest that you can get familiar and take robots.txt as the basis for the following CMS:

- Wordpress

- Joomla

- Joomla 3

- DLE

- Drupal

- MODx EVO

- MODx REVO

- Opencart

- Webasyst

- Bitrix

FAQ. Common questions about robots.txt

Does Google respect robots txt?

GoogleBot will no longer obey a robots.txt directive relating to indexing, according to the company. Because the robots.txt directive isn't an official command, it won't be supported. This robots.txt directive was previously supported by Google, however this will no longer be the case.

How to upload robots txt file?

The robots.txt file saved on the computer must be uploaded to the site and made available to search robots. There is no specific tool for this as the download method depends on your site and server. Contact your hosting provider or try to find their documentation yourself. After uploading the robots.txt file, check if robots have access and if Google can process it.

Conclusion

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Tools for Keywords

Keywords Research Tools – uncover untapped potential in your niche

Serpstat Features

SERP SEO Tool – the ultimate solution for website optimization

Keyword Difficulty Tool

Stay ahead of the competition and dominate your niche with our keywords difficulty tool

Check Page for SEO

On-page SEO checker – identify technical issues, optimize and drive more traffic to your website

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.