Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

What pages should be closed from indexing

Reasons to close pages from indexing

Let's consider the reasons why you should remove indexing from the website or individual pages:

Such content can include technical and administrative website pages, as well as personal data information. Besides, some pages can give the illusion of duplicate content, which is a violation and may lead to penalties on the entire resource.

A crawling budget is a certain number of website pages that a search engine can crawl. We are interested in spending server resources only on valuable and high-quality pages. In order to quickly and efficiently index important resource content, you need to close unnecessary content from crawling.

What pages should be removed from indexing

Website pages under development

Website copies

Printed pages

In fact, the printed page is a copy of its main version. If this page is open for indexing, the search robot can choose it as a priority and consider it more relevant. To properly optimize a website with a large number of pages, you should remove indexed pages for printing.

To close the link to the document, you can use the content output using AJAX, close the pages using the meta tag <meta name = "robots" content = "noindex, follow" />, or close all indexed pages from indexing in robots.txt.

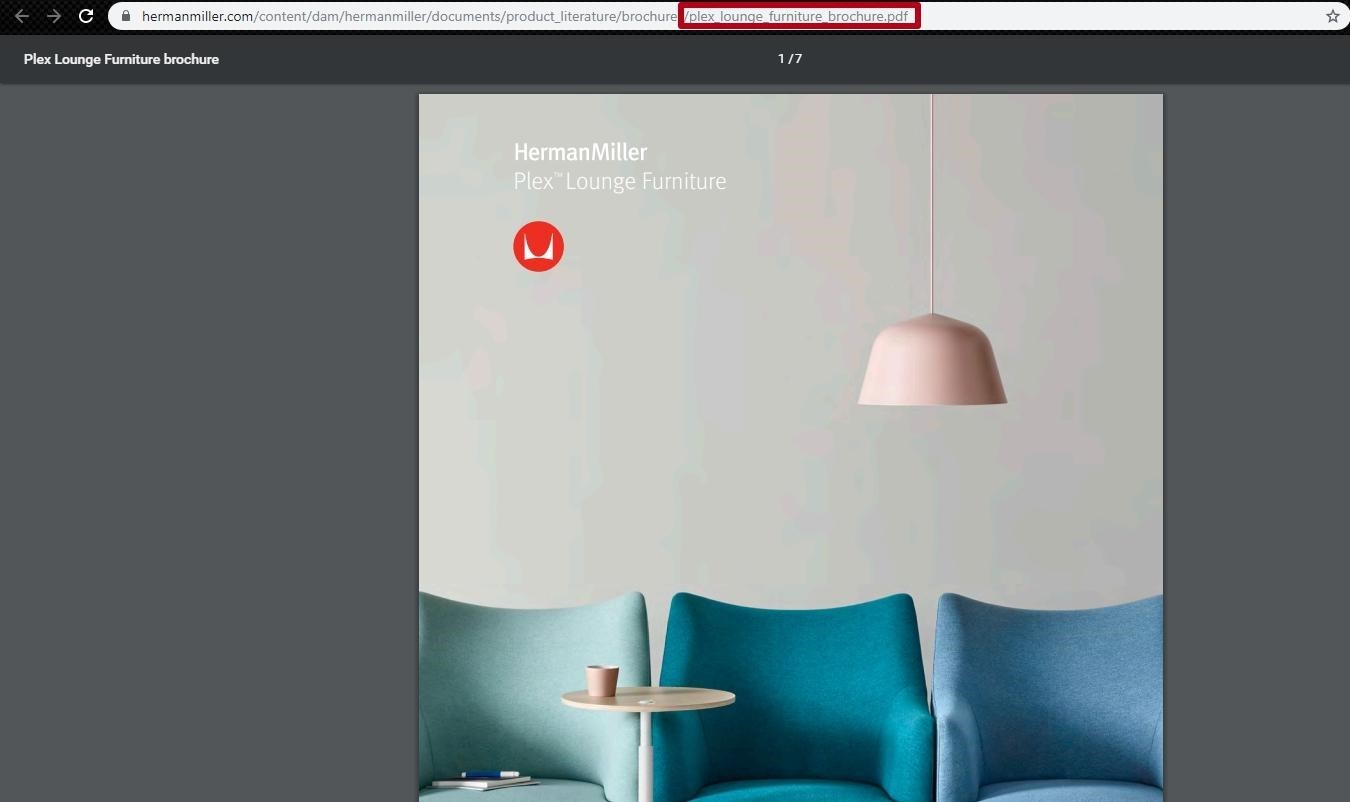

Unnecessary documents

Perhaps the content of these files doesn't meet the needs of the target audience of the website. Or documents appear in the search results above the html pages of the website. In this case, indexing documents is undesirable, and it's better to close them from crawling in the robots.txt file.

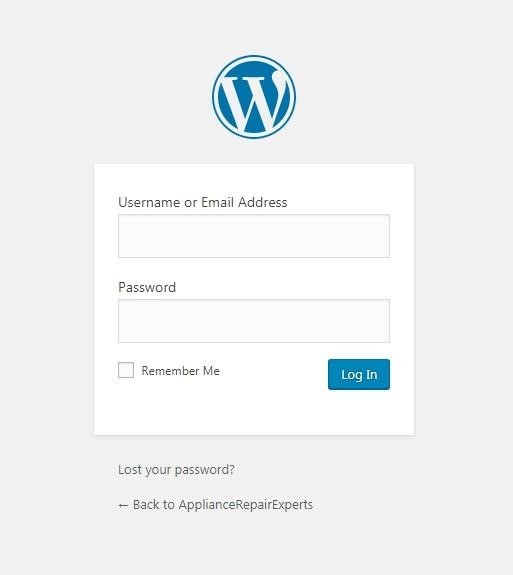

User forms and elements

Website technical data

Personal customer information

Sorting pages

Pagination pages

How to close pages from indexing

Robots meta tag with noindex value in html file

When you are using this method, the page will be closed for crawling even if there are external links to it.

To close the text from indexing (or a separate piece of text), and not the entire page, use the html tag: <noindex> text </noindex>.

Robots.txt file

You can limit the indexing of pages through the robots.txt file in the following way:

User-agent: * #search engine name

Disallow: /catalog/ #partial or full page URL to be closed.htaccess configuration file

AuthType Basic

AuthName "Password Protected Area"

AuthUserFile path to the file with password

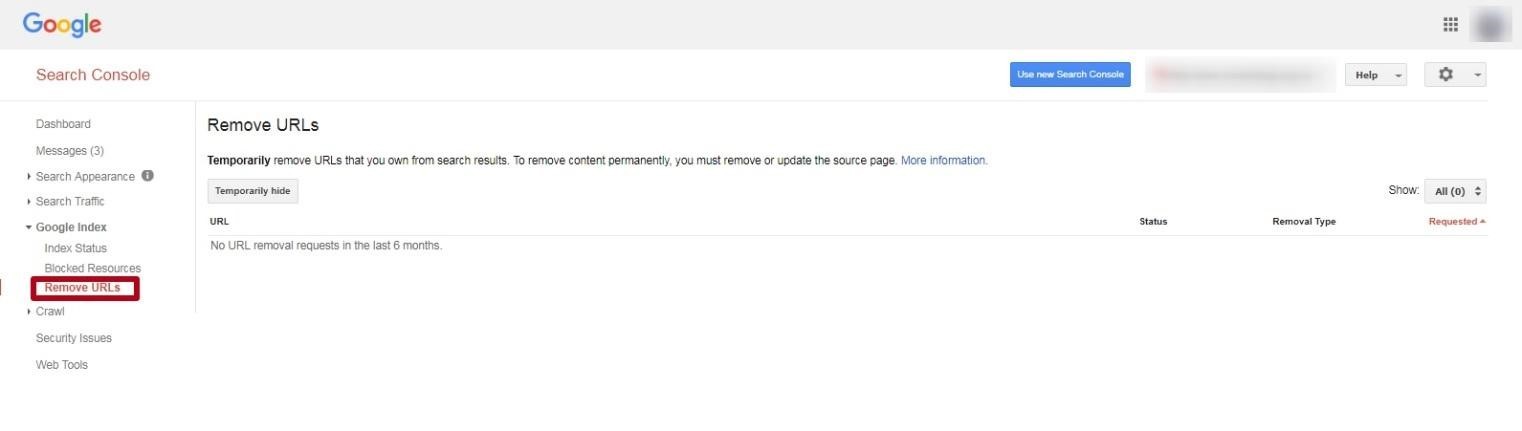

Require valid-userRemoving URLs through Webmaster Services

Conclusion

Restricting access to certain pages and documents will save search engine resources and speed up indexing of the entire website.

This article is a part of Serpstat's Checklist tool

" title = "What pages should be closed from indexing in Google 16261788341697" />

" title = "What pages should be closed from indexing in Google 16261788341697" /> | Try Checklist now |

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Tools for Keywords

Keywords Research Tools – uncover untapped potential in your niche

Serpstat Features

SERP SEO Tool – the ultimate solution for website optimization

Keyword Difficulty Tool

Stay ahead of the competition and dominate your niche with our keywords difficulty tool

Check Page for SEO

On-page SEO checker – identify technical issues, optimize and drive more traffic to your website

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.