Under The Hood: How We Build Processes In The Development Department

Max Astakhov

Product/Project Manager at Serpstat

This article answers the following frequently asked questions:

Why is this or that functionality a priority?

What stages does an idea go through before it gets into production?

What are the sizes of our teams, and how does each one work?

How is the problem of fixing a bug solved?

Also, I will tell you how our processes are built. Perhaps novice teams will be able to learn from our experience and adopt specific flow for themselves, thereby saving a lot of time and avoiding the many mistakes we encountered.

Contents

1. Intro

2. Project? → Product? → CEO?

3. Where the ideas come from

3.1 Feedback/requests from users

3.2 Competitor analysis

3.3 Generation of ideas by team members

4. Validation of ideas, planning, thinking over details, design

5. Task preparation and sprint planning

6. Development, testing, release

7. Bugs, problems, user requests

8. Interaction with other departments: (Marketing, Sales and Customer Success, Support)

9. Routine, meetings

10. Sprint control, statistics collection

11. Afterword

2. Project? → Product? → CEO?

3. Where the ideas come from

3.1 Feedback/requests from users

3.2 Competitor analysis

3.3 Generation of ideas by team members

4. Validation of ideas, planning, thinking over details, design

5. Task preparation and sprint planning

6. Development, testing, release

7. Bugs, problems, user requests

8. Interaction with other departments: (Marketing, Sales and Customer Success, Support)

9. Routine, meetings

10. Sprint control, statistics collection

11. Afterword

Intro

At Serpstat, sprints last 10 business days = 80 business hours per person. Although, you can find different meanings in different sources: from a week to a month.

The start of a sprint is Tuesday, the end of a sprint is Monday. We chose this schedule not to end the sprint on Friday and release before the weekend; if an issue occurs, it wouldn't have to be fixed on Saturday/Sunday.

My team has four developers and two testers. There are also two designers whose work and tasks we share between other teams.

The purpose of the sprint is to release at least one visible feature for users, fix bugs and optimize the service.

The team faces difficulties during the sprint due to bugs, requests from users, and team members' illnesses.

The start of a sprint is Tuesday, the end of a sprint is Monday. We chose this schedule not to end the sprint on Friday and release before the weekend; if an issue occurs, it wouldn't have to be fixed on Saturday/Sunday.

My team has four developers and two testers. There are also two designers whose work and tasks we share between other teams.

The purpose of the sprint is to release at least one visible feature for users, fix bugs and optimize the service.

The team faces difficulties during the sprint due to bugs, requests from users, and team members' illnesses.

The remaining empty slots are used for questions, unscheduled meetings, description of tasks for future releases, studies of competitors, etc.

Sprint time was distributed in the following proportions:

Sprint time was distributed in the following proportions:

40 hours to develop features;

20 hours for critical bugs and calls from users with priority Critical and Blocker;

10 hours for meetings and current issues, at this time also includes unplanned meetings;

10 hours for low-priority bugs typed at the beginning of the sprint (Low, Normal, High).

Project? → Product? → CEO?

A few words about Project/Product Managers at Serpstat.

We are not the classic managers that are written about in articles and books. Being a manager at Serpstat is an excellent opportunity to jump through hoops. We have established processes, but this doesn't mean that they don't change. It is a flexible, breathing system that responds to many factors. We follow the list of rules and flow to achieve maximum efficiency, speed, and quality.

Each Project/Product Manager at Serpstat is a universal soldier who manages more than just a development team or a separate module. He searches for ideas or generates them himself, validates them with the help of surveys and analytics, describes, discusses with the team, and interacts with the UX designer to get the most convenient and simple layout.

Quite a lot of time is spent on thinking through an idea, as well as many small things that are associated with the rest of the product. We don't have separate technical writers, so the writing of ToR and documentation also falls entirely on the manager. And besides all this, monitoring the sprint itself, meetings with developers, maintaining spirit in the team, reporting, and many more are also a part of a manager's work.

We are not the classic managers that are written about in articles and books. Being a manager at Serpstat is an excellent opportunity to jump through hoops. We have established processes, but this doesn't mean that they don't change. It is a flexible, breathing system that responds to many factors. We follow the list of rules and flow to achieve maximum efficiency, speed, and quality.

Each Project/Product Manager at Serpstat is a universal soldier who manages more than just a development team or a separate module. He searches for ideas or generates them himself, validates them with the help of surveys and analytics, describes, discusses with the team, and interacts with the UX designer to get the most convenient and simple layout.

Quite a lot of time is spent on thinking through an idea, as well as many small things that are associated with the rest of the product. We don't have separate technical writers, so the writing of ToR and documentation also falls entirely on the manager. And besides all this, monitoring the sprint itself, meetings with developers, maintaining spirit in the team, reporting, and many more are also a part of a manager's work.

Microteams

Since October of 2018, the entire development department has been divided into microteams. The current structure of the department is as follows: 5 PMs and PM Teamlead, each team has 5 to 10 people. Each PM is responsible for a Serpstat module.

The main objective is a full development cycle, including issues such as data storage, promotion tasks for the marketing department, documentation for Customer Support department, demonstrations and presentations for Sales and Customer Success departments.

My area of responsibility is the Rank Tracker module, which allows users to track site positions in the search results for specific keywords.

Additionally, on support, there is a SERP Crawling feature for keywords through the API.

The main objective is a full development cycle, including issues such as data storage, promotion tasks for the marketing department, documentation for Customer Support department, demonstrations and presentations for Sales and Customer Success departments.

My area of responsibility is the Rank Tracker module, which allows users to track site positions in the search results for specific keywords.

Additionally, on support, there is a SERP Crawling feature for keywords through the API.

Personal demonstration

Our specialists will contact you and discuss options for further work. These may include a personal demonstration, a trial period, comprehensive training articles, webinar recordings, and custom advice from a Serpstat specialist. It is our goal to make you feel comfortable while using Serpstat.

Where the ideas come from

There are several sources of ideas in Serpstat that can reach the implementation stage and deployment to production or be wrapped up at one of the steps:

Feedback/requests from users.

Analysis of competitors and the selection of interesting features.

Generation of ideas by team members.

Feedback/requests from users

Typically, there are two types of requests: direct and indirect.

Direct request: "Add sorting by frequency in the Keywords report for rank tracking."

Indirect request: "It is inconvenient to use the report because of the long name of the region since the column takes up a lot of space."

In a direct request, it is most often clear what needs to be done; in an indirect, we discuss it with our UX designer and look for an acceptable solution to the user's problem.

Sources of such reviews:

Direct request: "Add sorting by frequency in the Keywords report for rank tracking."

Indirect request: "It is inconvenient to use the report because of the long name of the region since the column takes up a lot of space."

In a direct request, it is most often clear what needs to be done; in an indirect, we discuss it with our UX designer and look for an acceptable solution to the user's problem.

Sources of such reviews:

1

Demo results for leads.

2

Feedback from Customer Support (when discussing potential problems with our users).

3

Feedback from the Sales/Customer Success department (upon retraining our customers).

4

Direct requests from users in our Twitter chat and Facebook group.

5

Internal requests (requests from other departments that are not directly related to the development of the module).

6

Interviews conducted by a UX designers and PMs with clients.

Competitor analysis

Regularly, the PM of each module monitors the news of competitors about the functionality they implemented. Each manager analyzes the features of only his module; this allows you to study specific features in more detail.

All attractive features are written to a special form and further discussed with the teamlead.

For each feature, we add the following parameters:

All attractive features are written to a special form and further discussed with the teamlead.

For each feature, we add the following parameters:

Date of discovery.

The essence of a feature (briefly).

Screenshot or screencast.

Do we have it or not.

Commentary why did it attract attention.

The conclusion to implement it or not (made with the team lead).

This is the first validation of ideas. The general teamlead's view of a product allows you to distribute found features between modules and teams correctly.

The next step is to add the product to the backlog; it is stored in Google Spreadsheets with minimal detail and filters.

The next step is to add the product to the backlog; it is stored in Google Spreadsheets with minimal detail and filters.

Generation of ideas by team members

These are the ideas:

That the PM of the team comes up with.

During the planning and implementation of the functional, team members offer solutions to a particular problem (in this case, each developer has the full right to suggest a different implementation option, and his plan will be considered and evaluated).

During functional testing, additional convenient features may arise that are not immediately visible at the design stage. They are also analyzed and implemented either immediately or are planned for one of the future iterations.

Validation of ideas, planning, thinking over details, design

Ideas are collected in a backlog and go through the initial screening with a teamlead.

Every 2-3 weeks, depending on the speed of collecting requests, the entire team of PMs (including team lead) assess the viability of the idea and set priorities within the product.

Previously, the primary indicator that a particular feature needs to be implemented was only the number of requests from different sources. But it was risky because we could spend several sprints without a visible result, while other, smaller features were idle, although they could greatly simplify the lives of our customers now.

A few months ago, we implemented the RICE system as the basis for evaluation. Our team leader adapted it to the realities of the product to evaluate our users' requests.

We evaluate the feature by 4 parameters:

Every 2-3 weeks, depending on the speed of collecting requests, the entire team of PMs (including team lead) assess the viability of the idea and set priorities within the product.

Previously, the primary indicator that a particular feature needs to be implemented was only the number of requests from different sources. But it was risky because we could spend several sprints without a visible result, while other, smaller features were idle, although they could greatly simplify the lives of our customers now.

A few months ago, we implemented the RICE system as the basis for evaluation. Our team leader adapted it to the realities of the product to evaluate our users' requests.

We evaluate the feature by 4 parameters:

Reach - an estimate of the percentage of active users who will use this feature:

5 - all users;

4 - users of three or more modules;

3 - users of two modules;

2 - all users of one module;

1 - individual users of one module.

Lead product manager adds it when adding features to the backlog.

4 - users of three or more modules;

3 - users of two modules;

2 - all users of one module;

1 - individual users of one module.

Lead product manager adds it when adding features to the backlog.

Impact - an estimate of the number of requests from users:

5 — >10;

4 — 8−9;

3 — 6−7;

2 — 4−5;

1 — 3.

Lead product manager adds it when analyzing the tab where we store the feedback from users.

4 — 8−9;

3 — 6−7;

2 — 4−5;

1 — 3.

Lead product manager adds it when analyzing the tab where we store the feedback from users.

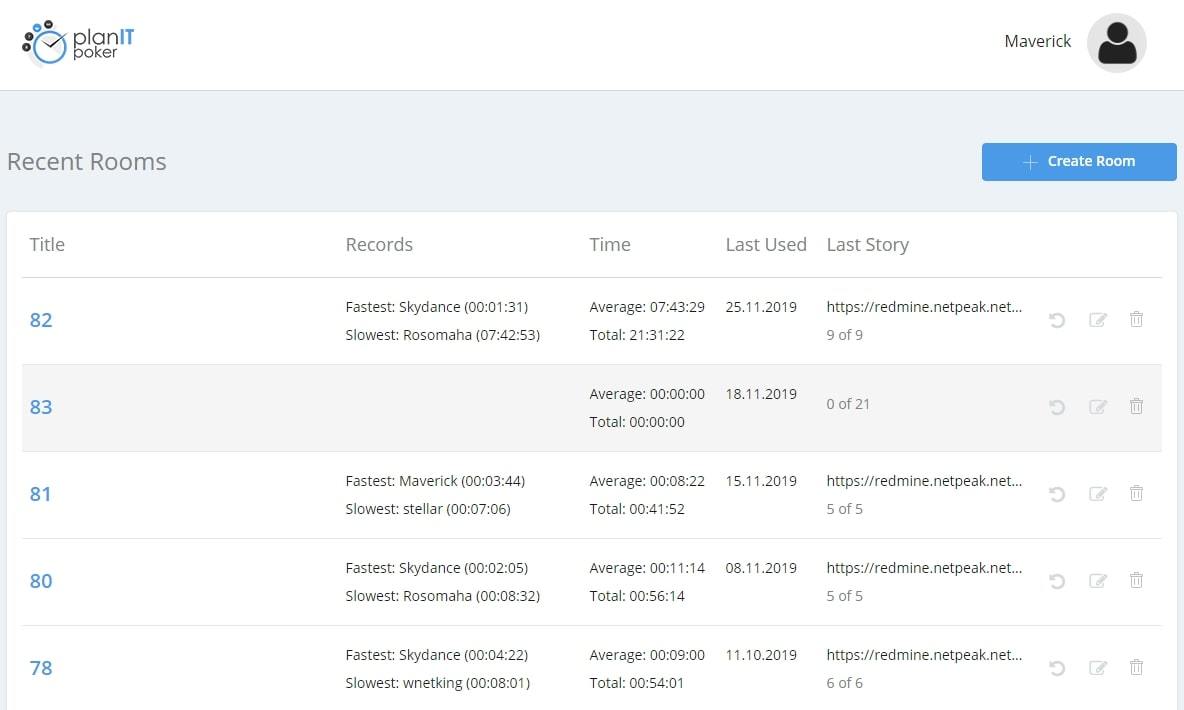

Confidence - a score after a poker meeting with PM:

5 - sure;

4 - rather sure;

3 - 50/50;

2 - rather unsure;

1 - not sure.

It is added by poker lead using the planitpoker service.

This parameter shows how each PM considers the feature useful for the product at this stage with the current level of implementation.

4 - rather sure;

3 - 50/50;

2 - rather unsure;

1 - not sure.

It is added by poker lead using the planitpoker service.

This parameter shows how each PM considers the feature useful for the product at this stage with the current level of implementation.

Effort - an estimate of the number of sprints for implementation (1 sprint = 10 working days):

5 — >3;

4 — 3;

3 — 2;

2 — 1;

1 — <1.

Each PM estimates an approximate number of sprints necessary for feature implementation. Sometimes it is required to conduct preliminary research to determine the possibility more accurately. Often in simple features, PM himself can estimate the time. This includes all stages, from design to final testing and release to a production server.

4 — 3;

3 — 2;

2 — 1;

1 — <1.

Each PM estimates an approximate number of sprints necessary for feature implementation. Sometimes it is required to conduct preliminary research to determine the possibility more accurately. Often in simple features, PM himself can estimate the time. This includes all stages, from design to final testing and release to a production server.

When the idea has passed validation and received approval, the collection of initial requirements for the ToR for the UX designer is started.

Sources of requirements can be details from the analysis of competitors, direct requests from users, which can often be combined into a collective functional, and interview results. The module manager describes the future vision of the tool and sets the task for the designer.

While working on a task, the initial vision is overgrown with details designed to improve user experience: tips, validation of fields and input data, alerts with important information, etc.

To simplify the work of designers, I compiled a checklist with best practices, which must be taken into account when designing. Here are some examples:

Sources of requirements can be details from the analysis of competitors, direct requests from users, which can often be combined into a collective functional, and interview results. The module manager describes the future vision of the tool and sets the task for the designer.

While working on a task, the initial vision is overgrown with details designed to improve user experience: tips, validation of fields and input data, alerts with important information, etc.

To simplify the work of designers, I compiled a checklist with best practices, which must be taken into account when designing. Here are some examples:

design is checked for low monitor brightness;

the layout is tested not only on displays with Retina but also on very simple ones;

all fields must have a sign and a placeholder with a hint;

all buttons should show several states: pressed, inactive, on hover, etc.

When a draft of the layout is ready, it is given for review, edits, and PM comments.

Next, PM conducts a layout test for behavioral cases. Often, adding even one field or button entails many nuances that need to be displayed in the design.

Most often checked moments:

Next, PM conducts a layout test for behavioral cases. Often, adding even one field or button entails many nuances that need to be displayed in the design.

Most often checked moments:

What will happen if there is no data for the selected date?

What if you apply both filters and sorting?

How will the current functionality affect export?

How does the user know about the changes?

Further, the task has several ways:

Collecting additional information on the use of particular functionality.

Returning to the designer with comments for revision.

Particularly important tasks are discussed with the teamlead or PO of Serpstat.

The UI designer begins working according to the layouts and recreates the interface in its final form.

Task preparation and sprint planning

To prepare the task for assessment and further participation in the sprint, we go through several mandatory steps.

1

PM describes the task in detail; if both the front-end and the back-end are involved, the description is divided into two groups not to mix requirements and avoid confusion.

2

In addition to the detailed description, a link to the layout/design is attached.

3

Project Manager adds translation keys, which will later be used for texts visible to users.

4

The marketing department is involved at this stage: they make corrections and read texts to avoid errors and incorrect wording. This part is obligatory when releasing new reports and modules.

5

After this, the tasks go through the stage of cross-proofreading by other PMs, which allows you to avoid mistakes.

6

After that, the team gets acquainted with the Story and Duty, adjusts and clarifies the description. Usually, one to two days is enough.

Story is a container for tasks, which is considered a minimum working set of functionality that is visible to the user.

Duty is a container for tasks solving optimization issues, problems related to technical debt, as well as other functions invisible to the user.

Story is a container for tasks, which is considered a minimum working set of functionality that is visible to the user.

Duty is a container for tasks solving optimization issues, problems related to technical debt, as well as other functions invisible to the user.

While reading the tasks before the assessment, each member of the team writes down questions or suggestions. The potential executor should describe the suggested development algorithm that will be evaluated.

After the task is evaluated by complexity and gets an executor, the next step is to divide it into sub-tasks, which are assessed in hours.

An additional task is created for testing this functionality. One task should not exceed 7 hours so that it can be completed during the day since an hour will be spent on meetings and other things.

After all the stories and duties are divided into subtasks, PM plans how many tasks can be taken in the sprint. The remaining time is used for bug fixes - planned and unplanned.

If Story or Duty turns out to be very large, PM revises the functionality and divides it into several smaller tasks.

After the task is evaluated by complexity and gets an executor, the next step is to divide it into sub-tasks, which are assessed in hours.

An additional task is created for testing this functionality. One task should not exceed 7 hours so that it can be completed during the day since an hour will be spent on meetings and other things.

After all the stories and duties are divided into subtasks, PM plans how many tasks can be taken in the sprint. The remaining time is used for bug fixes - planned and unplanned.

If Story or Duty turns out to be very large, PM revises the functionality and divides it into several smaller tasks.

Development, testing, release

After the start of the sprint, developers perform tasks in order of priority. When all tasks are completed, the developer rechecks the developed functionality on the test server and sends it for the code review to another developer.

Code review is a process that allows all developers to double-check each other for compliance with general rules. With a code review, a task can return to a fix or go further for testing.

After the team leader received the task, the tester takes it to work. The tester checks the functionality for compliance with the description, passes all the positive use cases, and tests the most common negative ones. From testing, the task can return to clarify the newly opened case, to fix the bugs found, or it can be given the "Ready" status.

Every last Thursday of the sprint, the team lead of the development department collects all the tasks that have "Ready" status. They are collected in a separate GitLab release branch on the server and retested to ensure that there are no problems with the joint work of all the features.

Thursday, Friday, and Monday are dedicated to testing these tasks and bugs that are fixed at this time.

Tuesday is the day of release. All tasks that were tested together are deployed to the production server, where they become available to our users.

Code review is a process that allows all developers to double-check each other for compliance with general rules. With a code review, a task can return to a fix or go further for testing.

After the team leader received the task, the tester takes it to work. The tester checks the functionality for compliance with the description, passes all the positive use cases, and tests the most common negative ones. From testing, the task can return to clarify the newly opened case, to fix the bugs found, or it can be given the "Ready" status.

Every last Thursday of the sprint, the team lead of the development department collects all the tasks that have "Ready" status. They are collected in a separate GitLab release branch on the server and retested to ensure that there are no problems with the joint work of all the features.

Thursday, Friday, and Monday are dedicated to testing these tasks and bugs that are fixed at this time.

Tuesday is the day of release. All tasks that were tested together are deployed to the production server, where they become available to our users.

Bugs, problems, user requests

Bug prioritization system:

1

Blocker: the feature doesn't work, and because of this, the other part of the service also cannot work (sending emails, report generator, registration, authorization doesn't work).

2

Critical: the bug does not allow potential paid users to take advantage of the functionality (it is impossible to use the API, export, to pay, or use the referral program). Either a security hole or the user spends more money than he should.

3

High: a bug doesn't allow several paid users or potential paid users to use the functionality (for example, subscribe to a newsletter, read an article on a blog), and we cannot solve the problem using another entry point.

4

Normal: the bug doesn't allow our paid users or potential paid users to use the functionality, and we can solve the problem using another entry point (example: the service doesn't load on one page).

5

Low: minor visual problems (broken pixels, picture not loading, button of the wrong color, etc.) that don't interfere with the main functionality of the pages.

In addition to the planned features and tasks of technical debt, bugs are also taken into the sprint.

All Blocker and Critical bugs go to the current sprint. Bugs of lower priority are planned as part of the sprints. They are fixed after the release branch is built (a set of functionality that is tested separately and will become available to users after release).

If bugs that are lower in priority than those that were discovered during the iteration are included in the sprint, then they are replaced.

If the number of critical bugs exceeds the available time, they displace features from the sprint for which development has not yet begun.

If the number of bugs exceeds the available time in the sprint, or there are several Critical and Blocker bugs, or the module doesn't work, then developers from other teams are involved. Only the Daily meeting remains obligatory. We declare a military mode in the department which is controlled by the CTO. A PM keeps the CEO and heads of other departments up to date and updates statuses using a special telegram channel.

All Blocker and Critical bugs go to the current sprint. Bugs of lower priority are planned as part of the sprints. They are fixed after the release branch is built (a set of functionality that is tested separately and will become available to users after release).

If bugs that are lower in priority than those that were discovered during the iteration are included in the sprint, then they are replaced.

If the number of critical bugs exceeds the available time, they displace features from the sprint for which development has not yet begun.

If the number of bugs exceeds the available time in the sprint, or there are several Critical and Blocker bugs, or the module doesn't work, then developers from other teams are involved. Only the Daily meeting remains obligatory. We declare a military mode in the department which is controlled by the CTO. A PM keeps the CEO and heads of other departments up to date and updates statuses using a special telegram channel.

Interaction with other departments: (Marketing, Sales and Customer Success, Support)

After the release, we send a newsletter to the departments with a shortlist of the current sprint's innovations. Departments further use this information for promotion in social networks, sales, training of existing users.

The Marketing department checks translations before uploading features to the production server (in the current version it is English, Ukrainian, and Russian), receives tasks for promoting certain essential elements in the module, and writes articles and posts about updates of the module.

The Sales department is provided with information about new features released and closed requests of individual users. Often, this becomes the starting point for re-calling with the lead to close the plan's purchase, as the necessary functions become available.

Information for the Customer success department allows them to record a webinar or train our users in cases that can be solved using the functionality that has appeared.

The Support department gets a clear idea of what functionality is currently available and how it should work. In this case, any deviation can be regarded as a bug, and with the help of support, it will be sent to the QA department, where they will open a bug.

The Marketing department checks translations before uploading features to the production server (in the current version it is English, Ukrainian, and Russian), receives tasks for promoting certain essential elements in the module, and writes articles and posts about updates of the module.

The Sales department is provided with information about new features released and closed requests of individual users. Often, this becomes the starting point for re-calling with the lead to close the plan's purchase, as the necessary functions become available.

Information for the Customer success department allows them to record a webinar or train our users in cases that can be solved using the functionality that has appeared.

The Support department gets a clear idea of what functionality is currently available and how it should work. In this case, any deviation can be regarded as a bug, and with the help of support, it will be sent to the QA department, where they will open a bug.

Routine, meetings

Daily (10-15 minutes)

Purpose:

To synchronize all team members regarding the current status of tasks and their daily plan.

Daily is held twice. Initially, the manager takes part in the team daily. After that, he takes part in the daily between teams, where team members and all PMs participate.

The daily format implies answers to three main questions:

To synchronize all team members regarding the current status of tasks and their daily plan.

Daily is held twice. Initially, the manager takes part in the team daily. After that, he takes part in the daily between teams, where team members and all PMs participate.

The daily format implies answers to three main questions:

What did I do yesterday? (only something that can concern other members of the team)

What difficulties did I encounter? (even if they have already been resolved, we still discuss them)

What do I plan to do today?

Sprint approval with Lead PM, CTO, VP of Product (15-30 minutes)

Purpose:

To approve the sprint with heads, get comments, edits, or recommendations on the sprint plan.

All tasks planned for the sprint are subject to additional verification/approval by VP of Product (at the moment this applies to the modules Search Analytics, Rank Tracker and Site Audit.)

This meeting is mandatory and is scheduled no later than three days before the sprint. There should be time for making changes and familiarizing the team with the tasks and their evaluation.

Technically complex and essential tasks that may affect the operation of other modules or related to data transfer and temporarily stop the process of the module are consulted and approved by CTO. The Lead product manager confirms the tasks of other teams and less critical.

To approve the sprint with heads, get comments, edits, or recommendations on the sprint plan.

All tasks planned for the sprint are subject to additional verification/approval by VP of Product (at the moment this applies to the modules Search Analytics, Rank Tracker and Site Audit.)

This meeting is mandatory and is scheduled no later than three days before the sprint. There should be time for making changes and familiarizing the team with the tasks and their evaluation.

Technically complex and essential tasks that may affect the operation of other modules or related to data transfer and temporarily stop the process of the module are consulted and approved by CTO. The Lead product manager confirms the tasks of other teams and less critical.

Poker planning (60-90 minutes)

Purpose:

To evaluate all tasks according to the complexity index in SP (Story points) and discuss what is planned to be done in the next sprint.

Tasks are collected in a separate list and imported into the planitpoker service.

The difficulty scale is measured in Story Points on a scale: 1, 2, 3, 5 or ?, where

Assessment of the task consists of several processes:

To evaluate all tasks according to the complexity index in SP (Story points) and discuss what is planned to be done in the next sprint.

Tasks are collected in a separate list and imported into the planitpoker service.

The difficulty scale is measured in Story Points on a scale: 1, 2, 3, 5 or ?, where

- 1sp - small style changes.

- 2sp - changes in the data format, alteration of components.

- 3sp - writing a procedure with 0 or creating a new component.

- 5sp - creating separate pages on the front, writing several procedures, or their substantial alteration.

- ? - I have questions regarding the description, I can't evaluate it.

Assessment of the task consists of several processes:

1

Quick refreshment of the essence of the task and primary voting. If the previous assessment gives a unanimous score - go to the next task. If the ratings differ or someone voted for "?" then the discussion begins.

2

First, the one who voted for "?" is questioned, next - those who voted above or below the average rating.

3

The task passes the second round of assessment.

4

Also, the checklist template is examined, which shows what need to be done for the task.

Sprint retrospective (45-60 minutes)

Purpose:

To evaluate what has been fixed since the last sprint, check the progress of the team, and give recommendations. Deal with the reasons for the failure of the sprint.

Sprint retrospective occurs the day after the sprint is released.

The meeting is divided into parts:

Sprint failures

The manager prepares a list of tasks that were not completed as part of the sprint. Each team member has the opportunity to express their point of view on the reasons of the failure. Based on these comments, the manager is required to take the necessary actions to prevent a recurrence of the situation.

Team KPI

In Serpstat, each developer has a KPI system. Based on the results of the system, the best developer of the month and the best team of the quarter are selected. During the sprint, each member of the team has access to the results to track their positions.

To evaluate what has been fixed since the last sprint, check the progress of the team, and give recommendations. Deal with the reasons for the failure of the sprint.

Sprint retrospective occurs the day after the sprint is released.

The meeting is divided into parts:

Sprint failures

The manager prepares a list of tasks that were not completed as part of the sprint. Each team member has the opportunity to express their point of view on the reasons of the failure. Based on these comments, the manager is required to take the necessary actions to prevent a recurrence of the situation.

Team KPI

In Serpstat, each developer has a KPI system. Based on the results of the system, the best developer of the month and the best team of the quarter are selected. During the sprint, each member of the team has access to the results to track their positions.

Sprint control, statistics collection

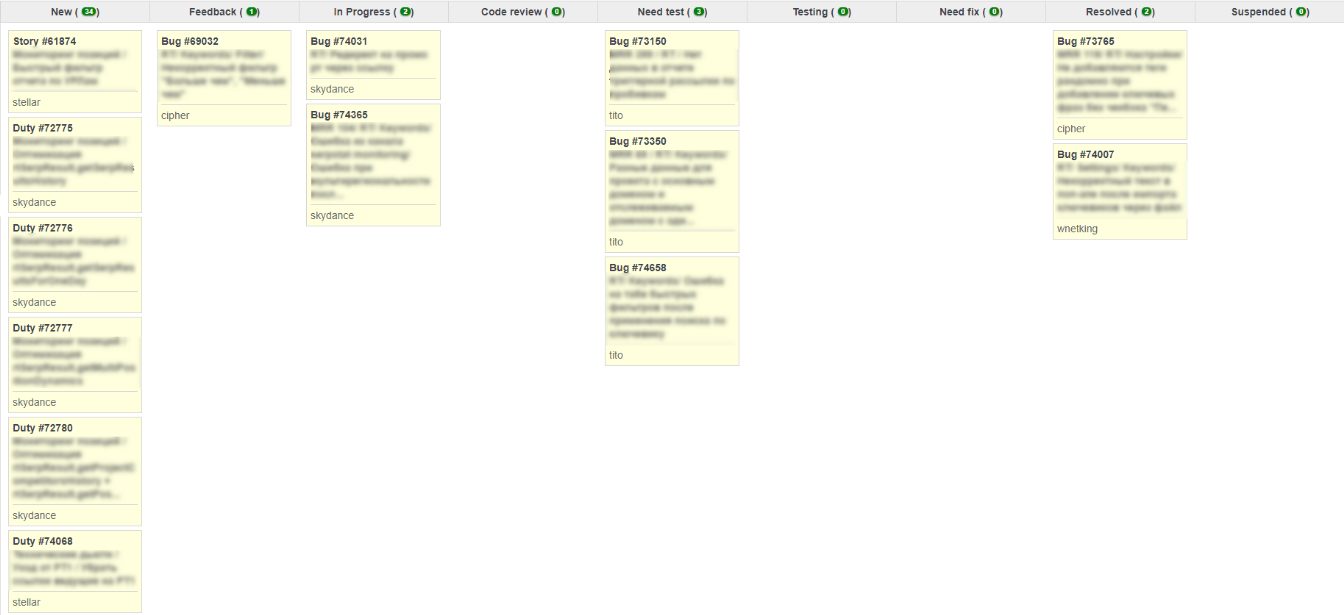

As the main tool, we use the open-source tool Redmine.

This is a fairly flexible platform that can be further customized with the help of a large number of plug-ins, both paid and free.

In Redmine we store bugs and scheduled tasks for several sprints.

This is a fairly flexible platform that can be further customized with the help of a large number of plug-ins, both paid and free.

In Redmine we store bugs and scheduled tasks for several sprints.

There is a special filter that allows you to understand what is happening with the sprint and in what state the tasks are.

A minimum of the most useful information is displayed: whose task it is, its status, planned time and time spent.

We have a sprint success rate, measured as a percentage, which shows how many tasks were completed and closed in the current sprint from the ones planned at the beginning.

The metric was introduced to train teams to complete tasks on time and, accordingly, to increase the planning term for the release of important features.

A minimum of the most useful information is displayed: whose task it is, its status, planned time and time spent.

We have a sprint success rate, measured as a percentage, which shows how many tasks were completed and closed in the current sprint from the ones planned at the beginning.

The metric was introduced to train teams to complete tasks on time and, accordingly, to increase the planning term for the release of important features.

Afterword

We just started working on the established flow. There are still imperfections in the processes that every team member is working to eliminate.

The important points that we found out during this time:

The important points that we found out during this time:

1

Experiments. In order to correct errors in the flow, it is necessary to constantly conduct experiments to eliminate errors.

2

Scaling. It is advisable to conduct experiments on a small group of people or one team. This will help reduce the consequences of incorrect decisions, plus making changes this way is easier.

3

Documentation. It is important to constantly maintain documentation not only of technical code or user behavior, but also of the processes and rules themselves. This information must be kept in writing in an up-to-date state, so that at any time it is possible to bring a new person or team into the course of affairs, without additional time wasted on explanations.

4

Flexibility. If something does not work for some reason, then this is not a reason not to observe it. It is necessary to make changes. Flow and rules should be as flexible, understandable and automated as possible. If someone does not fully understand why this or that item is being implemented, then it is necessary to discuss it.

I hope this article helped you to understand what is happening in our company. How we prioritize what we do with non-working processes and what difficulties we face. For unresolved questions, I will be happy to answer in the comments below.

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Found an error? Select it and press Ctrl + Enter to tell us

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.

Are you sure?

Thank you, we have saved your new mailing settings.