Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

How To Specify Robots.txt Directives For Google Robots

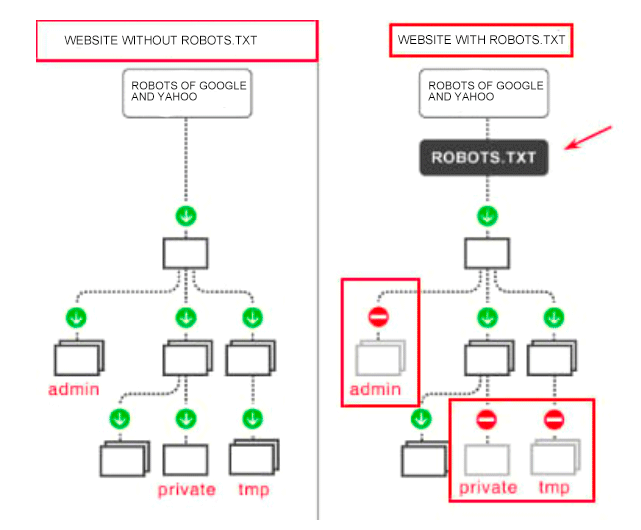

Why a website should have robots.txt

Normally, technical pages are closed for indexing. Cart pages, personal data and customer profiles should also be protected from indexing. They should also be protected from indexing via the robots.txt file.

The document is created via Wordpad or Notepad++, and it should have the ".txt" extension. Add the necessary directives and save the document. Next, upload it to the root of your website. Now let's talk more about the contents of the file.

There are two types of commands:

- allow scanning (Disallow);

- close scanning access (Allow);

- the crawl-delay;

- the host;

- map of the website pages (sitemap.xml).

You can use Serpstat Site Audit tool to find all website pages that are closed in robots.txt.

Characters in robots.txt

The symbol "*" means any character sequence. Thus, you can specify that scanning is allowed up to a certain folder or file:

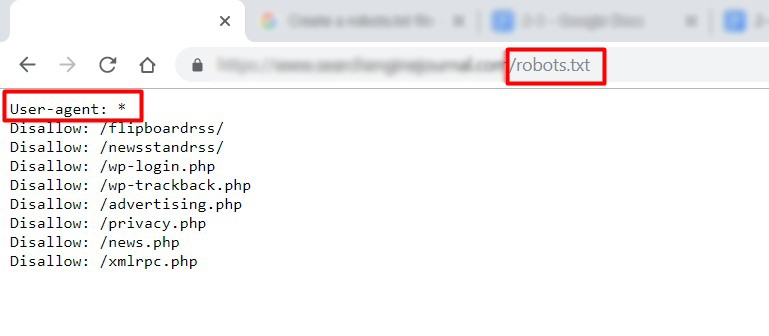

Disallow: */trackbackYou can address the search engine bot via the User-Agent + the bot name to which you apply the rule, for example:

User-agent: GoogleChecking robots.txt in Google

- Googlebot is a crawler indexing pages of a website;

- Googlebot Image scans pictures and images;

- Googlebot Video scans all video content;

- AdsBot Google analyzes the quality of all advertising published on desktop pages;

- AdsBot Google Mobile analyzes the quality of all advertising published on mobile website pages;

- Googlebot News assesses pages before they go to Google News;

- AdsBot Google Mobile Apps assesses the quality of advertising of Android applications, similarly to AdsBot.

User-agent: GoogleDisallow: /

Now, let's allow all bots to index the website to provide an example:

User-agent: *Allow: /

Let's put down the link to the sitemap and host of your website. As a result, we'll get the robots.txt for https:

User-agent: *Allow: /

Host: https://example.com

Sitemap: https://example.com/sitemap.xml

Thus, we reported that our site could be scanned without any restrictions, and we also indicated the host and sitemap. If you need to limit scanning, use the Disallow command. For example, block access to the technical components of the website:

User-agent: *Disallow: /wp-login.phpDisallow: /wp-register.phpDisallow: /feed/Disallow: /cgi-binDisallow: /wp-admin

Host: https://example.com

Sitemap: https://example.com/sitemap.xml

If your website uses the HTTP protocol instead of HTTPS, don't forget to change the contents of the lines.

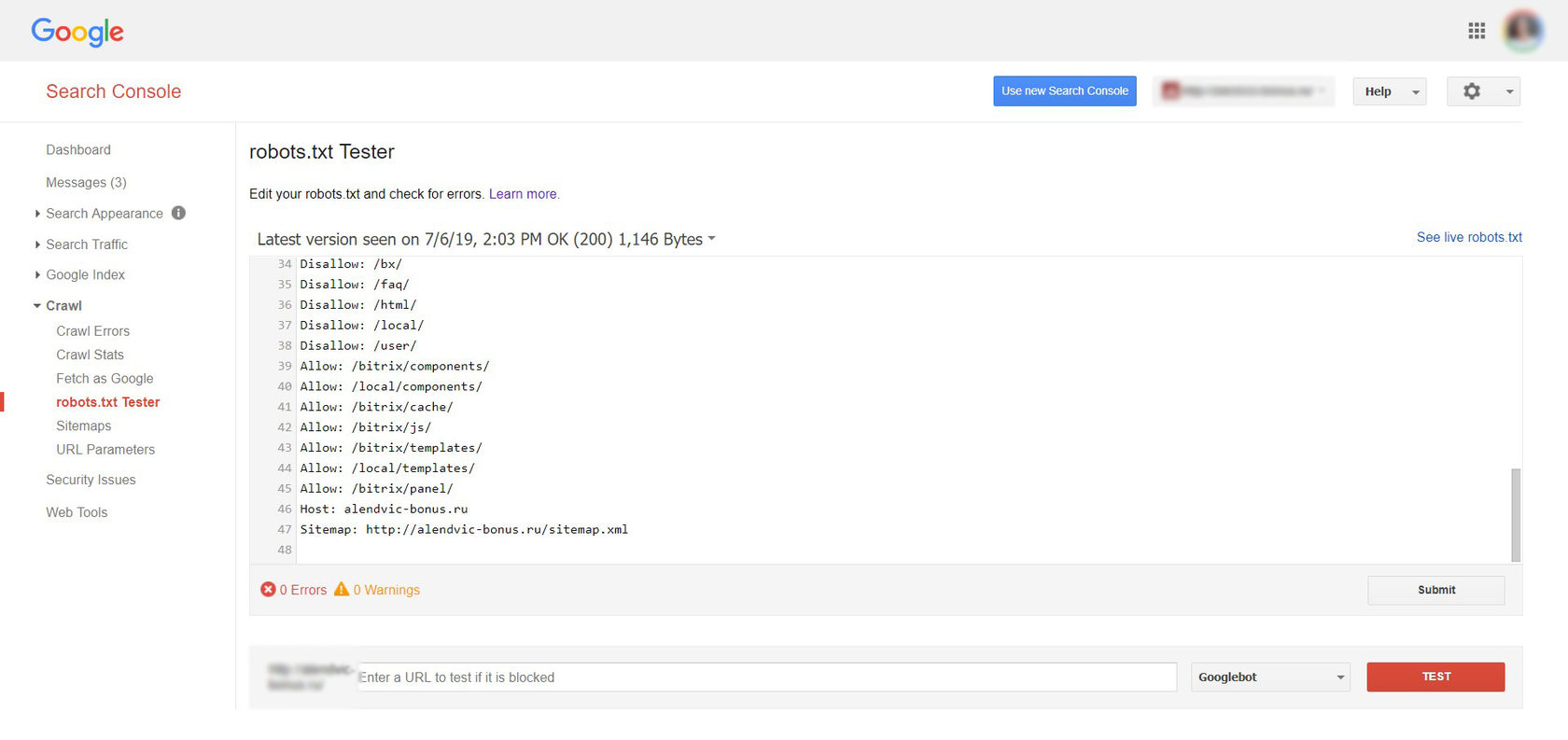

Here's an example of the real file for a web resource:

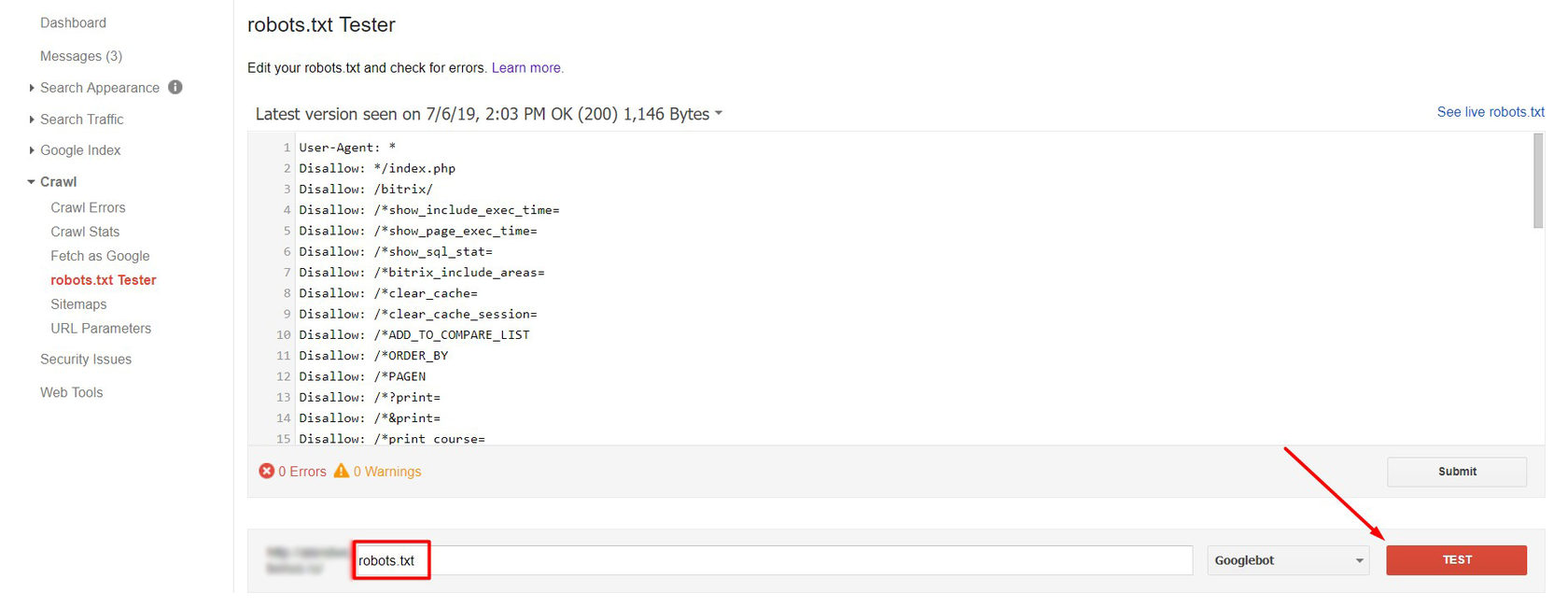

Checking the accuracy of robots.txt

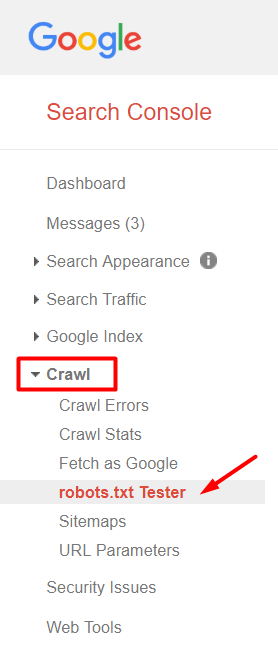

Go to the panel and select the tobots.txt Tester tool in the left-hand menu:

Conclusion

Write down what search robots you are addressing and send them a command as described above.

Next, verify the file accuracy through built-in Google tools. If no errors occur, save the file to the root folder and once again check its availability by clicking on the link yoursiteadress.com/robots.txt. If the link is active, everything is done correctly.

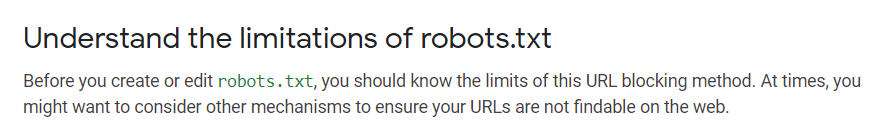

Remember that originally, directives are advisory, and you need to use other methods to completely ban page indexing.

This article is a part of Serpstat's Checklist tool

" title = "How To Specify Robots.txt Directives For Google Robots 16261788322512" />

" title = "How To Specify Robots.txt Directives For Google Robots 16261788322512" /> | Try Checklist now |

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Tools for Keywords

Keywords Research Tools – uncover untapped potential in your niche

Serpstat Features

SERP SEO Tool – the ultimate solution for website optimization

Keyword Difficulty Tool

Stay ahead of the competition and dominate your niche with our keywords difficulty tool

Check Page for SEO

On-page SEO checker – identify technical issues, optimize and drive more traffic to your website

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.