Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

Decoding the Process: A Deep Dive into How Search Engines Crawl and Index the Web

SEO standards and requirements are constantly changing, so professionals have to pay attention to their strategies and adapt them on time for prominent results in the long run. It isn’t enough to establish an SEO-friendly website. By engaging the site’s crawlers used by Google to look for pertinent content, it is possible to enhance your page’s credibility, visibility, and traffic.

Finding and organizing tons of information on an estimated 1.7 billion websites lies behind these complicated terms, but getting a closer look at their peculiarities will be handy for SEO practitioners.

Our Twitter Chat experts will share their experience:

Join #serpstat_chat to discuss trends, and updates with SEO experts.

We conduct them every Thursday at 2 pm ET | 11 am PT on our Twitter channel by hashtag #serpstat_chat.

What Does It Mean to “Index a Page”?

Beginners in the SEO field commonly interchange indexing and ranking. However, this is a mistake of an epic-failure level. Indexing should happen throughout the entire website development process. If some content is indexed at a poor speed or non-indexed at all, it is impossible to gain organic traffic and achieve your marketing and other goals.

To “Index a Page” means the page is included in the storage system (database etc.) and has associations applied (such as keywords, intent type, etc.).

What is Google indexing in SEO? For specialists, this term refers to the search engine’s database. It is vital to ensure your site is indexed. Otherwise, users will never be able to find any content it shares. Aside from understanding that indexing helps your page appear in SERPs, SEO specialists can prepare more custom web development strategies once they discover different types of indexes:

- The Google index is considered a primary source, which determines the role of various search phrases and keywords. In turn, it influences the informational weight of your domain and how likely your site will appear in SERPs when a user types in matching queries.

- There are various kinds of indexes, distinguishing unique features which will boost performance advantages.

If your pages are not indexed, they won't appear in search engine results. It is therefore essential to check if your pages are indexed. You can use a tool like IndexCheckr that will check if they are indexed by Google. If they are not, it's time to figure out why and fix the issue, usually by improving the content.

“Indexing” means Google has crawled and created an “understanding” of the page and saved that understanding for use as a possible result. If the page ranks relative to other competing pages for the query in question, the page will display in a SERP.

If your site isn’t crawling-friendly, it means it can’t be indexed and has no value on the internet. SEO efforts can technically advance your page and ensure that bots and spiders of search engines can view and analyze all of its content without difficulty.

Are Crawling and Indexing a Page the Same Thing?

These processes can’t be considered synonymous since they signify different stages of Google Search operation:

- First of all, Google retrieves photos and other visual digital files from web sources using automated tools. What is a web crawler, and what function does it serve in a search engine? Uncovering new URL links by visiting familiar pages to extract hidden hyperlinks to other domains is how crawlers work in search engines. These instruments are usually bots and spiders. If you check the user agent string, it is possible to locate them.

- Indexing mechanisms come next after crawlers in search engines. It is a localized search of the page's video, image, and text files. All the data is then stored in the Google database, known as its index.

- The final stage occurs when users’ queries come into the system and are analyzed by it to prepare matching information from the Google database.

Crawling finds the content and sends it back to be processed by Google. This is where the decision is made on whether to index the page. Indexing is breaking the page into elements, transforming the words into tokens, and storing them in data tables.

The search engine index can be compared with a list of contents, where entries are shown in detail. According to Google, this system is steadily developing and increasing its volume — the number of pages has exceeded hundreds of billions already.

Crawling is reading the code for each page on a site utilizing the directives (server and client-based), reading the nav & folder structure and taxonomy & following the links across the site to facilitate understanding the IA & ultimately relevance of a site.

The search engines' sophisticated mechanisms are built to consider numerous indicators contributing to determining the significance and relevancy of every page. This is necessary to deliver matching results to the end users' queries. Since Google does keep these signals confidential pieces of data, understanding the principles of crawling and indexing assists in preparing lasting and custom SEO strategies.

What Is the Difference between Indexing and Caching a Page?

Crawling and indexing in search engines aren’t the only processes that lead to SEO specialists’ confusion. Let’s determine what distinguishes indexing and cashing.

A page does not have to be cached to appear in search results. A cached version is a copy retained by the search engine. Search engines make decisions to retain copies of pages for reasons not shared.

Both processes happen in Google and other systems of this kind. Still, their purposes aren’t the same:

- When Google visits your domain and adds its content to its database, it means your site is now indexed.

- When Google visited your site last time and captured a screenshot to save the details if something might go wrong, it means your page is now cached.

Caching is literally “storing a copy” of what was crawled (served to the bot). It is separate from Indexing and has no impact/influence on Indexing (you can be indexed, but there be no Cache due to an error or the usage of “no archive

or “meta robots).”

Another big difference is that caching is based on more localized storage of the pages — it can also happen on your computer.

Does a Page Have to Be Indexed to Appear in Search Results?

As has already been highlighted, populating your content on the internet is impossible without indexing. There are several ways to get indexed in Google, but the simplest one is accessible in Google Search Console. Your task is to create an account, share the details about your newly established page, verify your ownership, and request indexing from the system.

It can take a few days for a crawled page to be indexed, but sometimes QDF (query deserves Freshness) or lack of competition and good on-page SEO will see a page in results before it is indexed.

Another method of checking whether your website has a ready index status is using this formula in the search bar — site:yourdomainname.com. The number of results will represent how many pages are already indexed.

How Can You Influence Google Indexing Your Content?

To succeed in technical SEO, specialists must increase their awareness of the indexing and crawling process. Google, Bing, and other systems constantly level up their techniques from this perspective. This knowledge helps create functional and efficient domain search visibility tools and tactics.

• Permitting crawling (robots.txt)

• Aiding crawling (sitemap(s), internal links)

• Permitting indexing (robots meta/header)

• Reducing duplicates (better organization, 301s, CLE/CLR/CLS)

• Obtaining Inbound Links

• Higher quality content

• Greater originality

When it comes to indexing website in Google, several SEO strategies will help you get the desired result:

- Utilize Google Search Console features to keep track on your sites’ indexes. This way, you can update your search engine optimization campaign and solve different crawl errors — from classic 404 notifications to robots.txt and server issues.

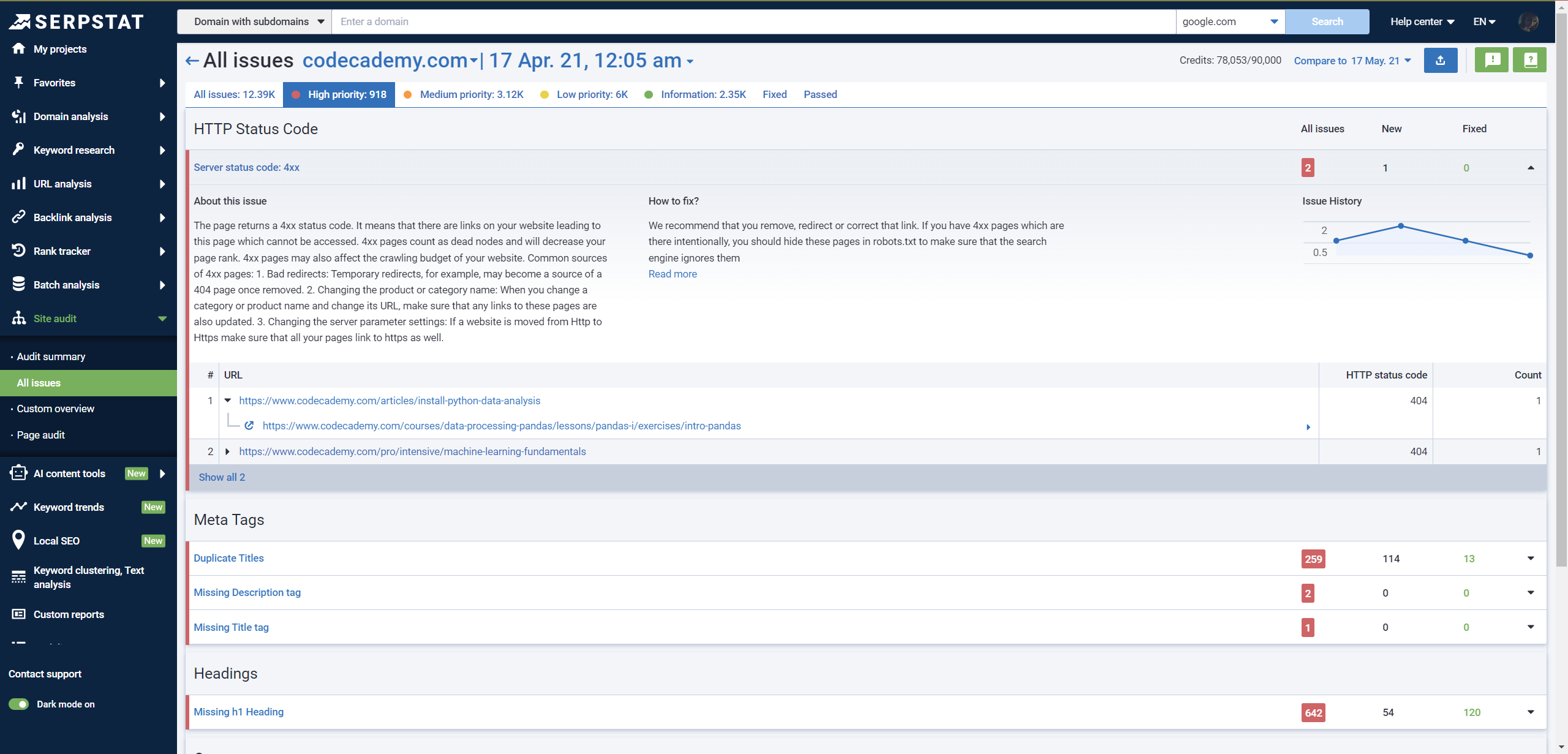

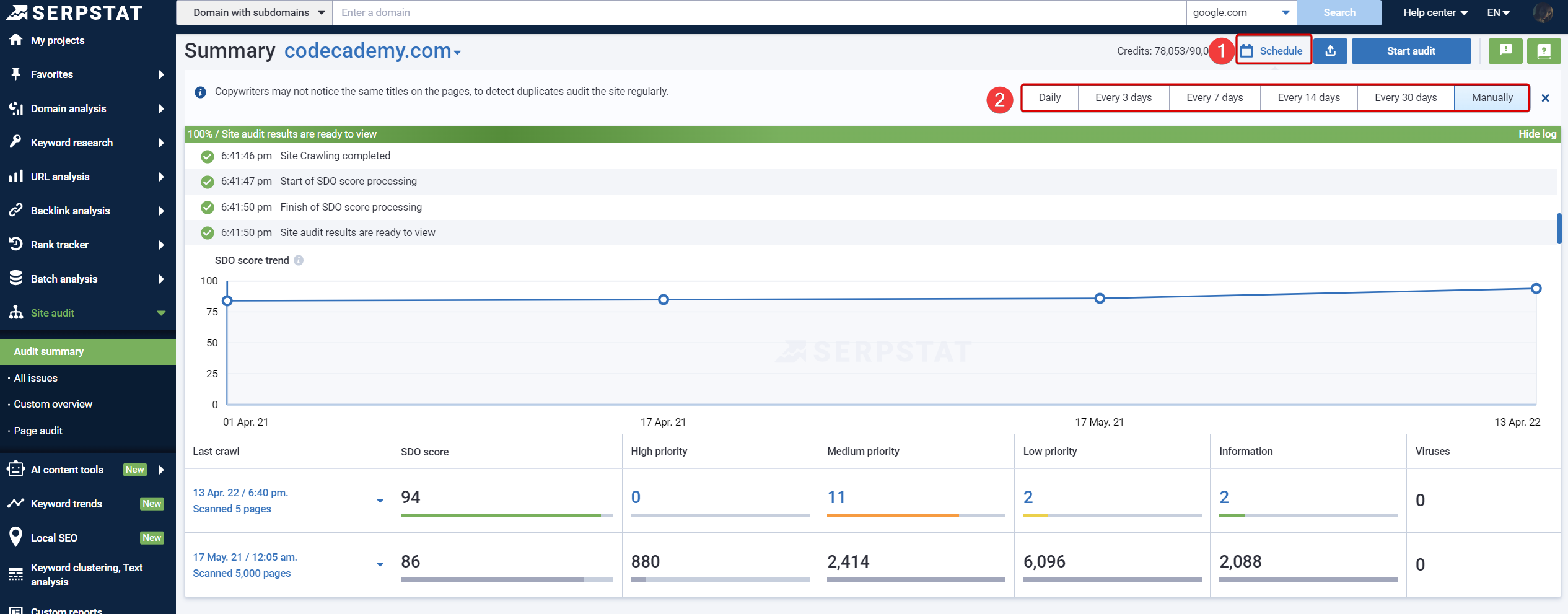

To improve your website's search engine optimization and boost your visibility on Google, it's essential to promptly identify and address critical issues. One of the best ways to achieve this is by conducting a thorough technical SEO audit using automated tools. This process will enable you to evaluate individual pages for technical issues by scanning them separately and keep track of your site's optimization level progress by monitoring growth dynamics.

To make the most of your technical SEO audit, consider using Serpstat's detailed recommendations:

Additionally, setting up automatic checks to detect issues and track progress in resolving them can help ensure you stay on top of any problems.

Discover the benefits of Serpstat with a 7-Day free trial

By conducting an SEO audit, you'll gain valuable insights into your website's strengths and weaknesses, allowing you to make data-driven decisions to enhance your online presence. At the end of the trial, you'll have the opportunity to either keep using our platform or discontinue.

Get Started- Taking your time to invest in an intuitive and fully responsive design might not seem the main task of SEO practitioners, but it will influence their operation. Desktop copies are duplicated in the index system for the missing mobile interface, but it usually reduces your page rankings. Using meta tags and working on the size of UI elements are efficient steps to succeed.

Don't underestimate internal linking —it helps search engines discover other pages.

- Update your interlinking scheme. Its consistency is a valuable long-term SEO strategy that will keep your content properly organized and indexed. If there are not very popular pages on the site, it is essential to increase their index value to prevent significant issues with the domain overall.

Explanation of the Importance of Server Logs for Website Indexing and Optimization

To start with, let’s define the meaning of server logs. Simply put, this term refers to text files with records of every activity connected to a specific server for a predetermined time. Insights into crawl priority from these records and their analysis are unmatched, allowing SEO experts to adjust their budget, strategy development, and management plan to obtain higher ranks in SERPs.

When I can, I check server logs. Unfortunately, there's a strong trend for shared hosts to disable SALs (Server Access Logs) to save resources, and CDNs stuff up Localised SALs, and often don't have CDNALs unless you pay extra for them!

Next best thing, Web Tracking.

Server logs indicate the data about search entries and provide an accurate representation of the periodic changes in requests and responses provided within the system. With HTTP response codes, URL links, IP addresses of the site visitors, and more, SEO experts can collect valuable pieces of information:

- One of the methods to utilize the potential of server logs is to analyze its data and separate good bot traffic coming from systems like Googlebot from poor sources with little SEO relevance.

- Thanks to the analysis of server logs, SEO specialists can understand how soon Google or Bing recrawls the site and its pages. If you see this period is particularly lengthy and takes months, it is a red flag to take action and locate indexable pages.

The SEO world would be a better place if we did ensure Googlebot is able to index content through server logs.

The Impact of DeepRank on Indexing Content

Established as BERT in 2019, the DeepRank system is a suite of tools and algorithms responsible for deep learning instruments for up-market ranking aspects. This Google Search incentive goes for rendering in-depth relationships in the languages as humans do.

DeepRank is googles AI for SERPs. While SEOs can and should be looking at a good amount of academic research GOOG has published on DeepRank, they have also published that provides a good overview.

There are several reasons DeepRank is worth it for SEO experts:

- This metric is a highway to determine indexable pages linked to their website.

- Analyze primary URLs and their values along with duplicates.

- These calculations are also efficient in defining what SEO trends are distinguished in your site’s SEO system. Once you are aware of them, it is simpler to make any desired alterations.

Deep ranking can give indexing a number of advantages. Help identify content more accurately and reduce the need for manual tagging. It can enable more efficient indexing and improve search accuracy by better understanding the content. Deep ranking can fine-tune.

Key Takeaway

All things considered, determining the influence of crawling and indexing processes in Google and other search engines is crucial for SEO specialists. Being an SEO practitioner doesn’t mean being able to locate a suitable keyword scheme with a few functional tools. Keeping your knowledge up-to-date ensures your tactics and strategies will hit higher rankings and more visibility of your SEO-optimized pages from a long-term perspective.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.