Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

Search Quality Metrics: What They Mean And How They Work

This article will talk about what we know about the metrics that search engines use, fundamental problems, and existing learning approaches.

By relevance, we mean the degree to which an object is related to a specific query. Suppose we have a request and several objects that correspond to it in one way or another. The higher the degree of compliance of the object with the request, the higher its relevance. The task of ranking is to return the most relevant object in response to a request. The higher the relevance, the higher the likelihood that the user will take the targeted action (go to the page, buy a product, watch a video, etc.).

With the development of information retrieval systems, the topic of ranking becomes more and more relevant. This problem arises everywhere: when distributing search results pages, recommending videos, news, music, goods, and more. Learning to Rank exists for this purpose.

Learning to Rank or machine-learned ranking (MLR) is a branch of machine learning that studies and develops self-learning ranking algorithms. Its main task is to determine the most effective algorithms and approaches based on their qualitative and quantitative assessment. Why did the problem of teaching ranking arise?

For example, let's take a page of an information resource - an article. The user enters a query into a search engine that already contains a set of files. In accordance with the request, the system extracts the corresponding files from the collection, ranks them, and gives them the highest relevance.

Ranking is performed based on the sorting model f (q, d), where q is the user's request, d is the file. The classical f (q, d) model works without self-learning and doesn't consider the connection between words (for example, Okapi BM25, Vector space model, BIR models). It calculates the file's relevance to the request based on the occurrence of the request words in each document. Obviously, with the current volume of files on the Internet, search results based on simple models may not be accurate enough.

However, such algorithms have one significant drawback - the initial data must be strictly subordinate to the rule, and the rule must strictly follow the author's task.

Suppose we set ourselves the task of manipulating the results of the algorithm's work. In that case, we can easily solve it since the algorithm's specifics initially assume that they will not be manipulated.

So the problem arose when the search began to monetize. As soon as it began to monetize, it stimulated not only to submit documents for analysis but to present them in such a way as to get preferences over competitors. Therefore, today search results based on simple models cannot have a sufficient level of accuracy.

Training data includes request, files, the degree of relevance.

The degree of compliance is determined by the file request in several ways. The most common approach assumes that the relevance of a document is based on several metrics. The higher the correspondence of one indicator, the higher the score for it. Relevance scores are derived from a set of search engine labeling that takes 5 values from 0 (irrelevant) to 5 (completely relevant). The estimates for all indicators are summed up.

As a result, the most relevant is the file, the sum of the ratings for all indicators being the highest. The learning data is used to create ranking algorithms that calculate the relevance of documents to real queries.

However, there is an important nuance here: user requests must be processed at high speed. And it is impossible to use complex scoring schemes in this variant (for each request). Therefore, the check is carried out in two stages:

Signs are divided into three groups:

To learn more about content quality, I suggest reading Google's search quality evaluator guidelines.

It's also important that the result has few or no relevance issues. An example of issue occurs when the page loses its helpfulness for the query due to the passage of time. This is very common, for example, for queries with news intent whose results can quickly become stale if they don't include the latest developments of the target story.

I believe that understanding the qualitative aspect of how search relevance works is much more important for marketers than trying to understand the actual metrics and science behind information retrieval systems. To achieve that objective, here is what I suggest:

Get into the habit of putting yourself on the search engine's shoes – and, yes, I know that everybody talks about the user's shoes but I'm trying to offer another perspective. If you were in charge of Search at Google, how would you assess the level of Expertise, Authority and Trust of a given website? How would you determine the characteristics of the most relevant results for a given query? Does your content possess these characteristics for your target queries? Reading Google's guidelines and staying up to date with what's happening in SEO and Search can definitely be of help.

In information retrieval theory, there are many metrics for assessing the performance of an algorithm with training data and comparing different ranking training algorithms. Open source states that they are created in relevance scoring sessions where judges evaluate search results' quality. However, common sense tells us that such an option is hardly possible, and here's why:

That is why we have systems that can:

Recognize cats: because during the Internet's existence, people have produced billions of ready-made data with cats.

Determine the naturalness of the language because we have digitized many books in this language, and we know for sure that it is natural.

But we cannot assess the relevance of the request to the site because we do not have such data. Even if you just put the assessors to click, they will not cope with the task since relevance is not just a match of the text to the request; it is hundreds of other factors that a person cannot yet assess in a reasonable time.

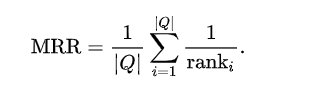

This is the simplest metric of the three: it tries to measure where is the first relevant item. It is closely linked to the binary relevance family of metrics.

This method is simple to compute and is easy to interpret; it focuses on the first relevant element of the list. It is best suited for targeted searches, such as users asking for the "best item for me." Suitable for known-item search such as navigational queries or looking for a fact.

The MRR metric doesn't evaluate the rest of the list of recommended items. It focuses on a single item from the list.

It gives a list with a single relevant item just a much weight as a list with many relevant items. It is okay if that is the target of the evaluation.

This might not be a fair evaluation metric for users that want a list of related items to browse. The goal of the users might be to compare multiple associated items.

Thus, we take all of those values and divide them by the total number to get the mean reciprocal rank.

Therefore, an MRR of 1 is ideal. It means that your search engines put the right answer at the top of the result list every time.

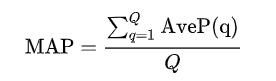

MAP is ideal for ranking results when you are looking at five or more results. That makes it ideal for evaluating related recommendations, like on an eСommerce platform.

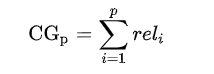

The problem with CG is that it doesn't consider the position of the result set when determining its usefulness. In other words, if we change the order of the relevance scores, we won't be able to understand better the usefulness of the result set as the CG will remain the same.

For example:

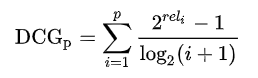

To overcome this, we are introducing DCG. DCG punishes highly relevant documents that appear lower in the search results by decreasing the ranked relevance value, which is logarithmically proportional to the position of the result:

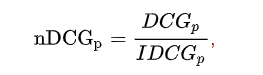

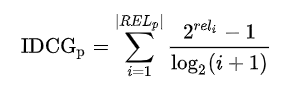

We accomplish this by sorting all the relevant documents in the corpus according to their relative relevance, getting the largest possible DCG through the p-position (also known as c).

This means that choosing a methodology that would make it possible to determine the instrument is more of fortune-telling since we cannot check anything here.

Yes, you can try to rely on patents or words published by this or that official. But 90% of all patents are rubbish that has nothing to do with programming. And phrases are only part of the puzzle, which is aggravated by the fact that all these people are tied by such a severe NDA that even the phrases that were supposedly accidentally thrown out are written in the contract.

And all we can do is analyze and combine data from various sources, resulting in a document that tells about the theoretical foundations of how search quality can be assessed.

Good luck and high positions to everyone!

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.