Market Research With Python Scripts: Comprehensive Workflow Description

Today, we’ll guide you through performing market research in specific industries, focusing on gathering keywords, filtering them by intent, and obtaining search volume data.

You’ll also discover the process of updating search engine results pages (SERP) & search volume data, merging the results, and applying click-through rates (CTR) to identify top-performing websites.

The following stages will include setting up crawling tasks, extracting data, and merging results for analysis.

Goal

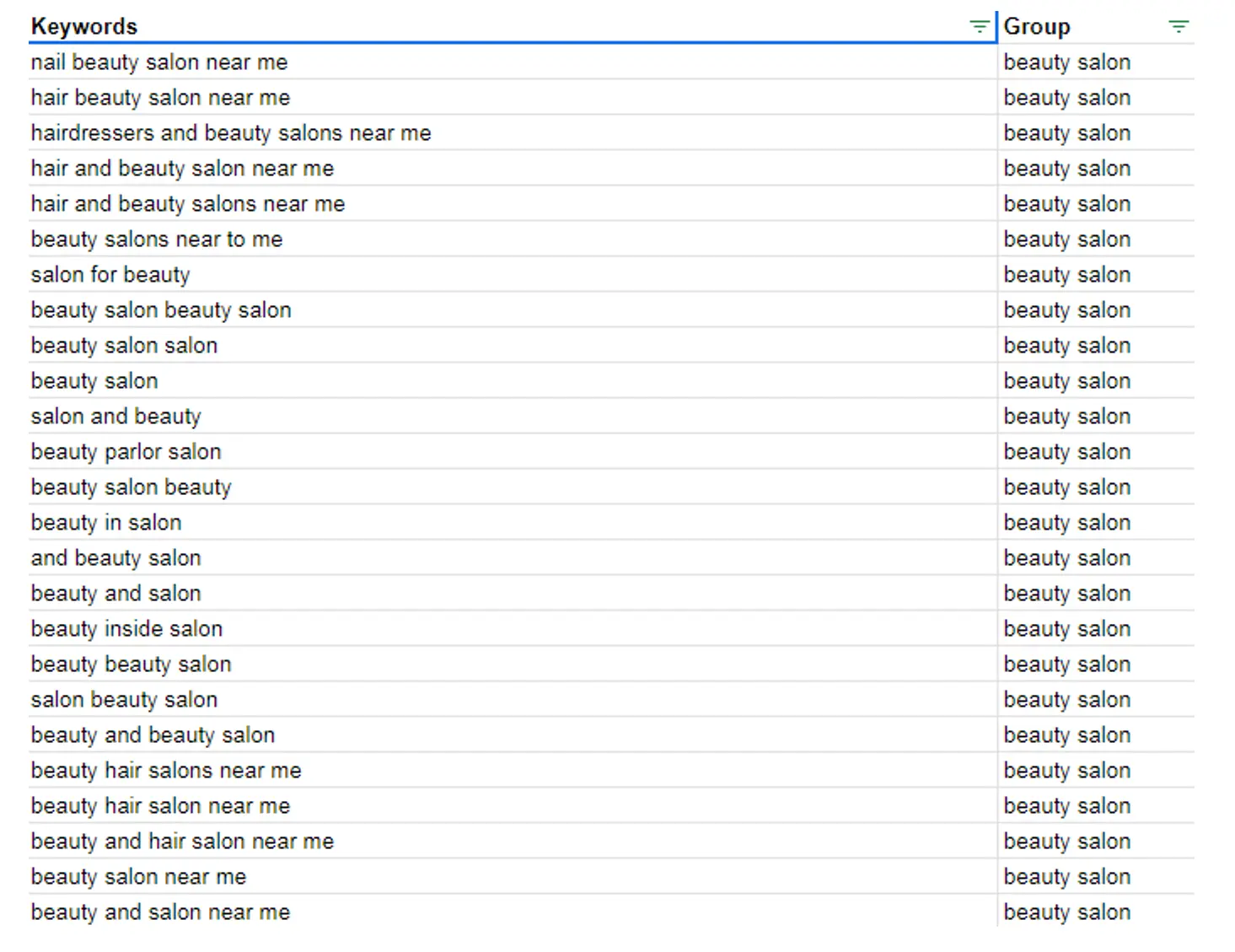

Our goal is to perform research of a specific industry and identify market players in any area that operates within this industry. The initial point is to gather all the keywords related to the industry we're looking for. We can also drill them down to the specific types of services or mark them up by intensity, and so on.

You can view the entire sheet at the following link in the “Keyword data (initial input)” section.

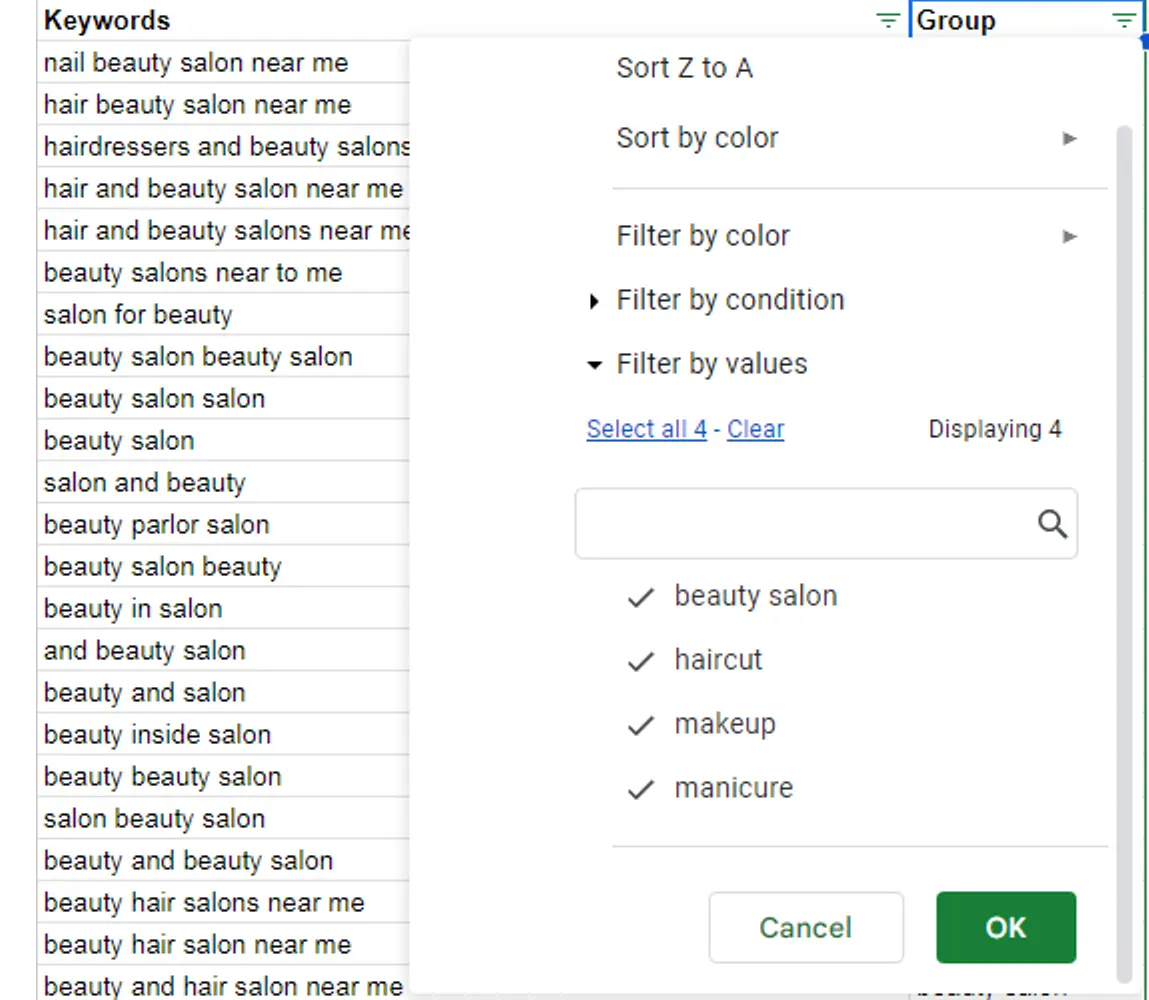

It will allow us to use these markups further to filter out the keywords that we have. In my case, I have four groups of keywords related to:

- beauty industry in general;

- haircuts;

- makeup;

- manicures.

The source of the keywords might be any. You can perform regular keyword research or generate them through AI. It doesn't make much difference. In actual case, we're looking to start with the most general, common set of keywords.

Implementation

For ease of perception, I divided the process into six key steps. Each one is a logical continuation of the predecessor. Let’s see how it performs.

Step 1: Setting Up SERP Crawling Task and Obtaining JSON File With SERPs

Our first step is to update the SERP results in the region we seek. We use a simple script that sets up the SERP crawling task based on our region ID.

You can find the sample code used in this step in the document at this link in the “1. Obtaining JSON File with SERPs” section.

Then, it splits the results by batches and extracts all SERP results into separate JSON files. After that, it merges all the batches into a single JSON file with all the SERP results.

Step 2: Obtaining JSON With the Search Volume Data

The next step is to perform a similar thing but obtain the search volume data in the same area and location. We use the same region ID and pass the same set of keywords to set up the search volume crawling task.

You can find the sample code used in this step in the document at this link in the “2. Obtaining JSON with the Search Volume data” section.

Then, we obtain batches with all of the keyword data and merge them into a single JSON file with the keyword data.

Step 3: Merging SERP and Search Volume Results

Our next step is to merge serve and search volume results and apply CTRs to the organic top 10 positions.

You can find the sample code used in this step in the document at this link in the “3. Merging the SERP and Search Volume data for traffic estimation through CTR” section.

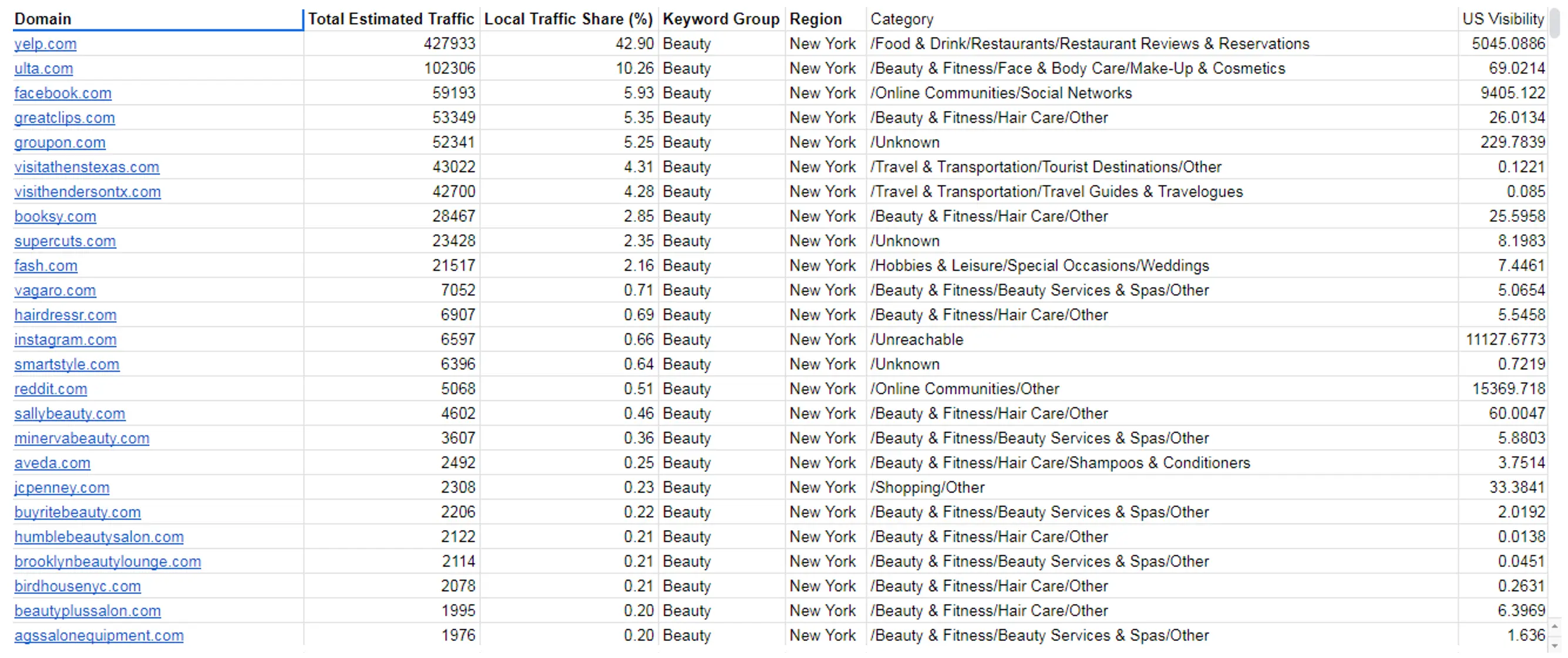

As a result, we are getting the websites that perform best and have the highest traffic share within that industry because they rank for the keywords we have picked. We can have that number of estimated organic traffic and the traffic share here. That's the way it looks:

You can view the entire sheet at the following link in the “Market share” section.

We have tons of websites in here. I can filter by keyword tags or regions that we have set up crawling for. In my case, I use New York and Los Angeles to show the difference.

Step 4: Filtering and Analyzing Websites

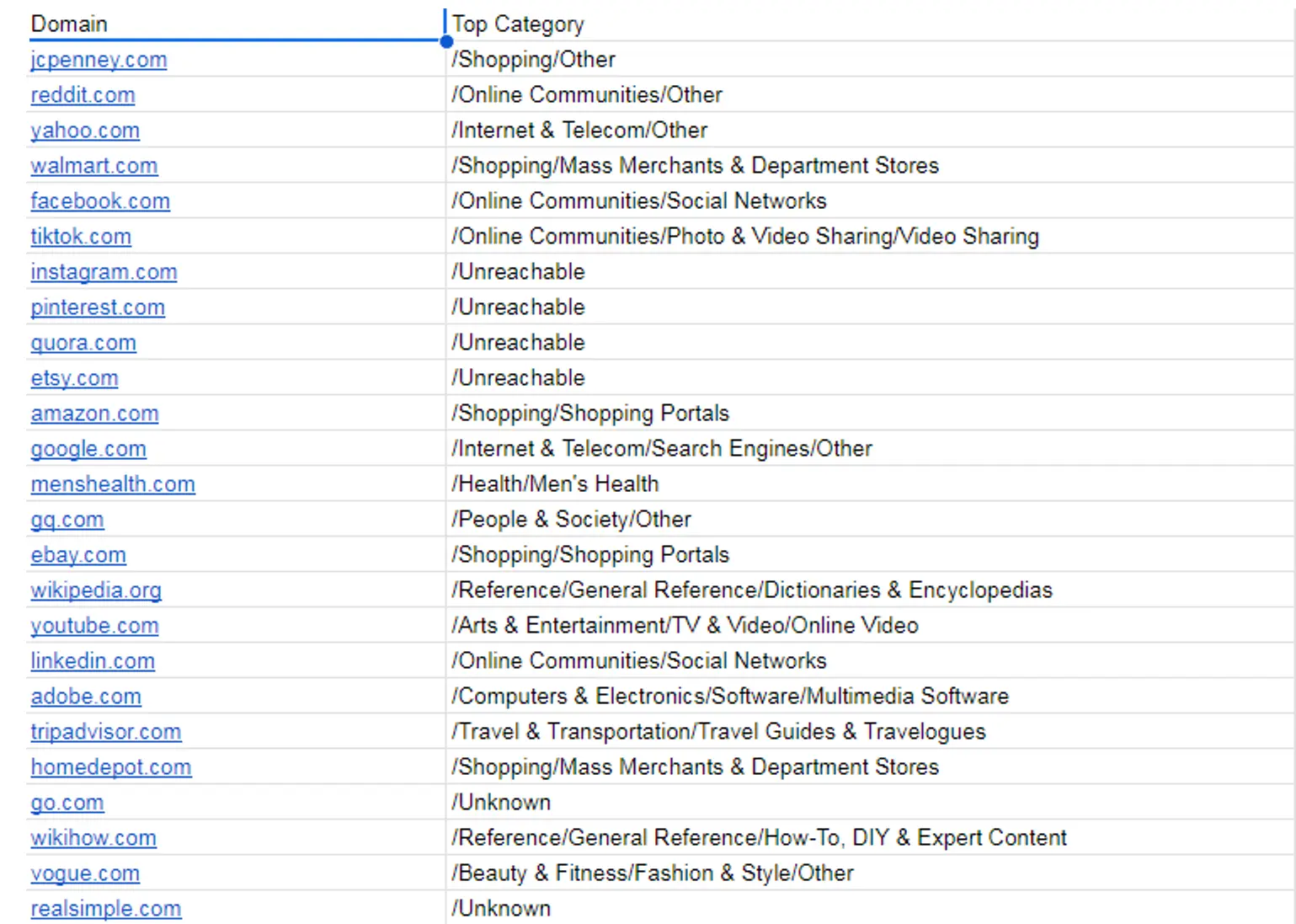

The next step for us is identifying the industry to which this specific website belongs. We set up a similar task to the search volume crawling, but we pass all of the obtained domains to crawl the websites and identify the industry they belong to.

You can find the sample code used in this step in the document at this link in the “4. Classifying obtained websites” section.

As a result, we are getting the categories to which this website belongs. By category, I mean the industry in which it performs.

You can view the entire sheet at the following link in the “Domain Category” section.

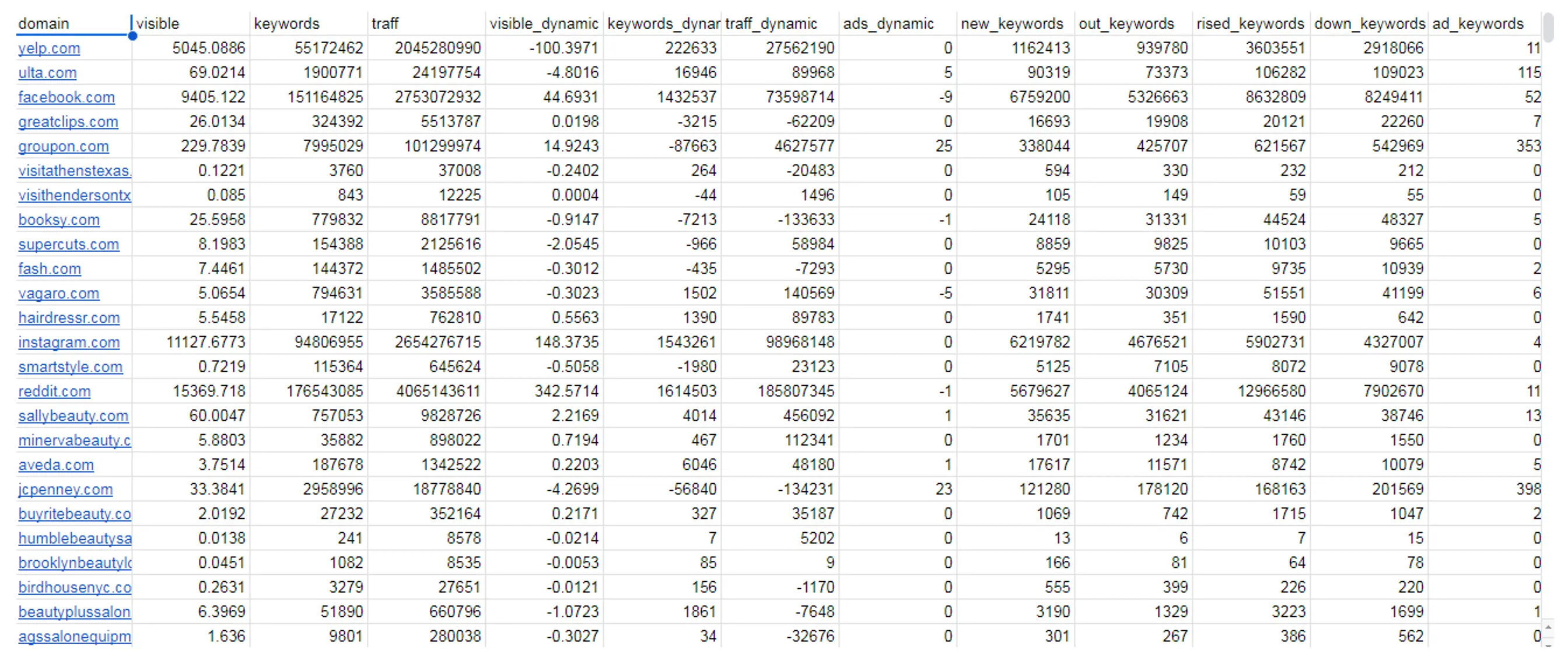

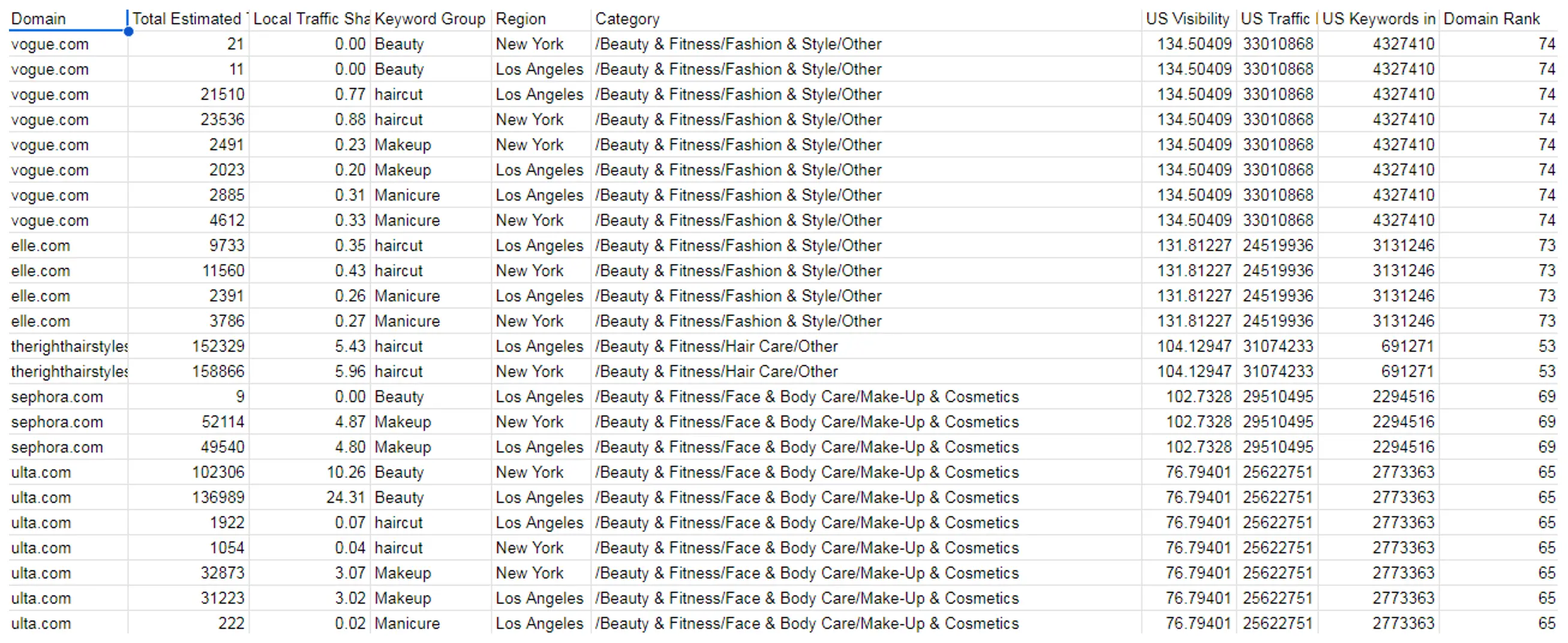

Step 5: Obtaining Ranking Metrics

Our next step is to get basic keyword metrics for these domains. We pass all of the websites related to the industry to obtain ranking metrics such as visibility, estimation of organic traffic, and number of keywords.

You can find the sample code used in this step in the document at this link in the “5. Obtaining Ranking metrics” section.

The results shown below:

You can view the entire sheet at the following link in the “Domain Keyword Metrics” section.

Step 6: Obtaining Backlinks Metrics

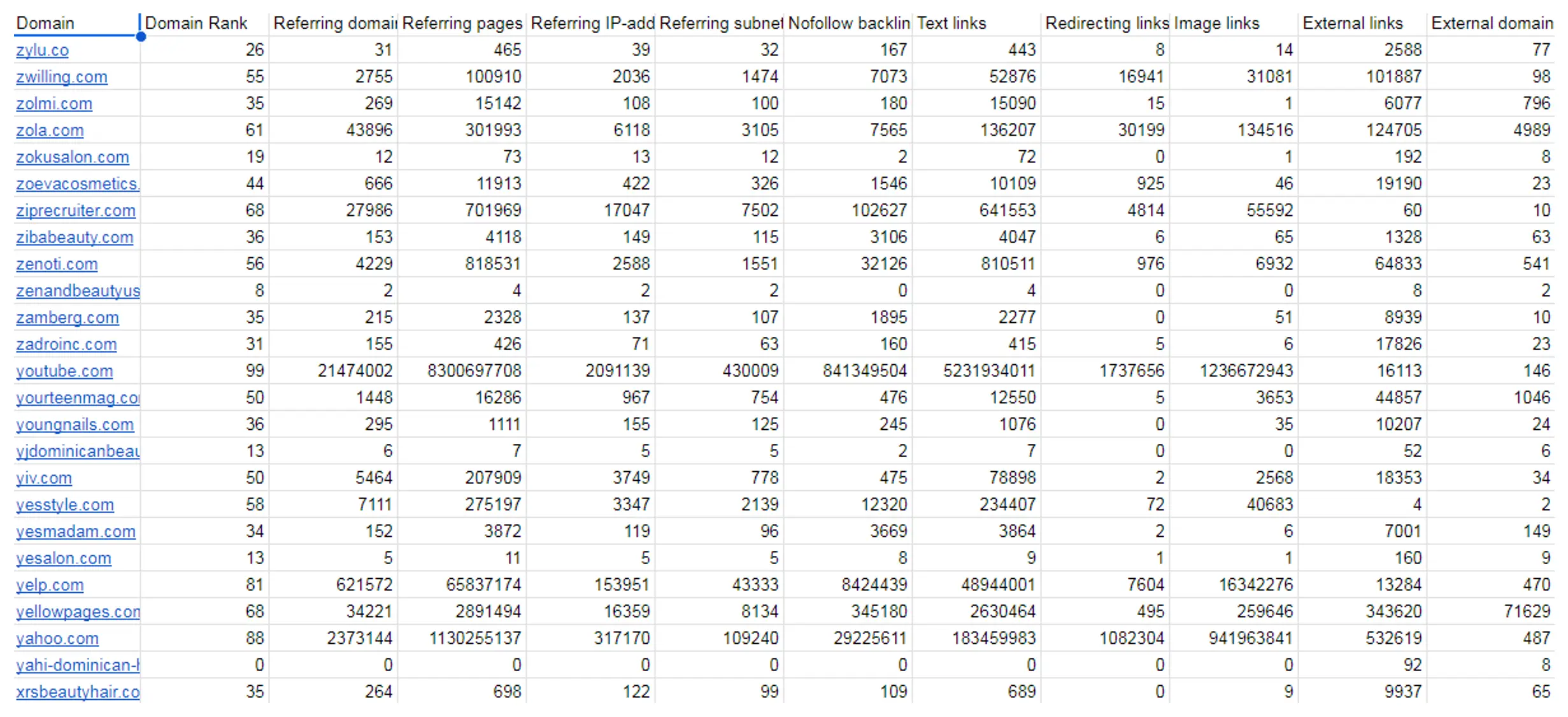

Now, we do the same, passing all the same set of domains to obtain the backlink matrix, which includes domain rank, number of referring domains, and number of referring pages.

You can find the sample code used in this step in the document at this link in the “6. Obtaining Backlinks metrics” section.

The results shown below:

You can view the entire sheet at the following link in the “Domain Backlinks Metrics” section.

Results

After we merge these results, we can understand all of the websites within a specific area related to a particular type of service and the industry to which the website belongs.

You can view the entire sheet at the following link in the “Final” section.

We filter out all irrelevant categories, leaving only beauty and fitness. We can also filter by type of service and location, and we have all the metrics about keyword performance and backlink performance.

You can view the entire sheet at the following link in the “Final with Categories filtered” section.

Conclusion

This material demonstrates a comprehensive approach to market research within specific industries by following the outlined steps.

We build a detailed understanding of market players and their performance by:

- keyword gathering and filtering;

- SERP and search volume analysis;

- domain classification, ranking, and backlink metrics.

The final result enables precise filtering by industry, service type, and location, providing actionable insights to drive informed decisions and strategic planning.

FAQ

The methodology is flexible, and you can adapt it to any industry by changing the initial keyword set and tailoring the crawling and filtering processes to suit specific needs.

The process supports regional customization by setting up region-specific SERP crawling and keyword analysis.

Traffic estimation is done by applying click-through rates (CTR) to the top 10 organic positions in the merged SERP and search volume results, highlighting the websites with the highest traffic share.

Use Serpstat for Market Research

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.