Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

What You Need to Know About Google BERT Update

What does BERT mean?

For example, consider the following two sentences:

Sentence 1: What is your date of birth?

Sentence 2: I went out for a date with John.

The meaning of the word date is different in these two sentences.

Contextual models generate a representation of each word based on the other words in the sentence, i.e., they would represent 'date' based on 'What is your', but not 'of birth.' However, BERT represents 'date' using both its previous and next context — ' What is your ...of birth?'

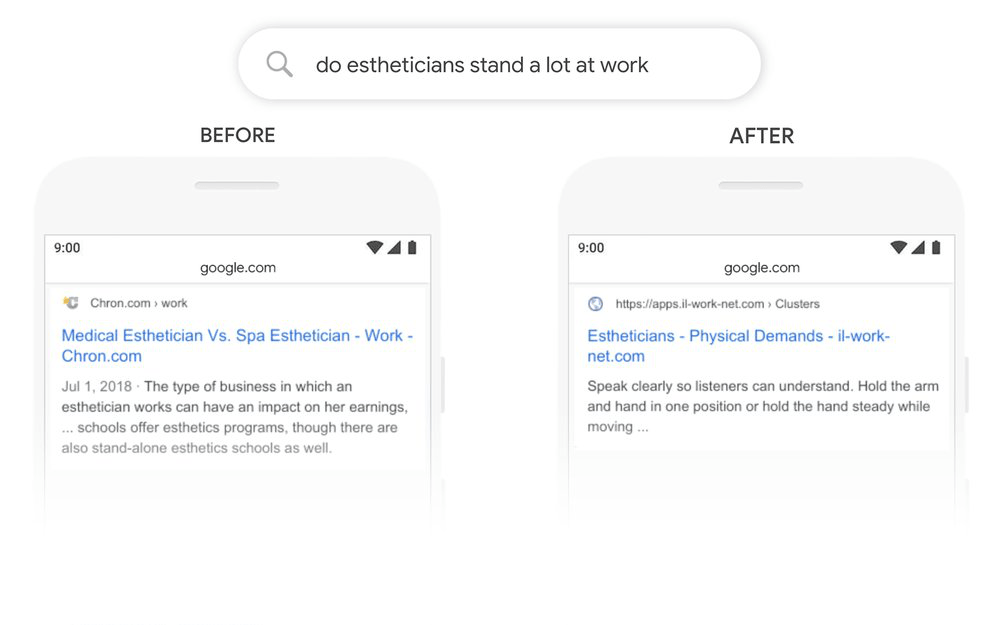

Google has displayed several examples regarding this search update. One such example is the query: "do estheticians stand a lot at work."

How will BERT affect SEO?

BERT has the following effects:

How to overcome the BERT update?

Here are some ways in which BERT update can be handled:

For example, if a user searches for "home remedies for dandruff", then they are expecting a web page that shares tips and home remedies for dandruff, not a website that sells shampoo or medicines for dandruff.

The product should be advertised, but the accuracy of the search results should not be compromised. The content should be formatted and arranged in such a way that the main focus goes to home remedies.

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.