Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

Using Scripts To Scrape SERPs For Different Results

You're in the middle of a project for yourself or a client. To successfully hit your deadline and meet your targets, you need access to detailed information that is housed deep in the underbelly of the search engine results page. But there's a problem…

For whatever reason (likely a draconian line of code in Google's latest update) you are unable to access or export the information you need.

Uh oh.

If you're a seasoned SEO or webmaster, then I can all but guarantee that the above scenario has probably happened to you at least once.

And today, I'm going to show you how you can use Python to scrape the SERPs (or your website) for different results so that you can get the data you need… In 15 minutes or less.

Sound like a plan? Then let's get to it.

Why Scrape Using Python?

With the proliferation of black hat SEOs attempting to game the algorithm and pull one over on the system, big search engines like Google don't really have any other choice. But that doesn't make the state of search engines metrics in 2018 any easier to live with.

If you want to uncover key metrics behind your website's SEO performance (that aren't available through analytics), scan for security weaknesses, or gain competitive intelligence... the data is there for the taking. But you have to know how to get it.

Sure, you could drop $297/month on an expensive piece of software (that will become entirely obsolete within the next 12 months) or you could just use the strategy I'm about to share with you to find all of the data you need in a matter of minutes.

To do this, we'll be using a coding language called Python to scrape Google's SERPs so that you can quickly and easily gain access to the information you need without the hassle.

Let me show you how.

Basic Requirements

Specifically, you'll need:

- Python:

Pandas

- Google Chrome (duh!)

- Chromedriver

For those of you who are lazy, er… prefer to work smart, I recommend that you simply uninstall your existing Python distribution and download this one from Anaconda. It comes prepackaged with the Panda library (among many others) making it an incredibly versatile and robust distribution package.

If you're happy with your existing python distribution, then simply plug the following lines of code into your terminal.

To Get Panda/ To get Splinter/ To get Splinter with Anaconda:

pip install pandas

pip install splinter

conda install splinter

Step #1: Setup Your Libraries and Browser-ups in Links

For example:

from splinter import Browser

import pandas as pd

# open a browser

browser = Browser('chrome')# Width, Height

browser.driver.set_window_size(640, 480) Step #2: Exploring the Website

Luckily, this process is pretty straightforward.

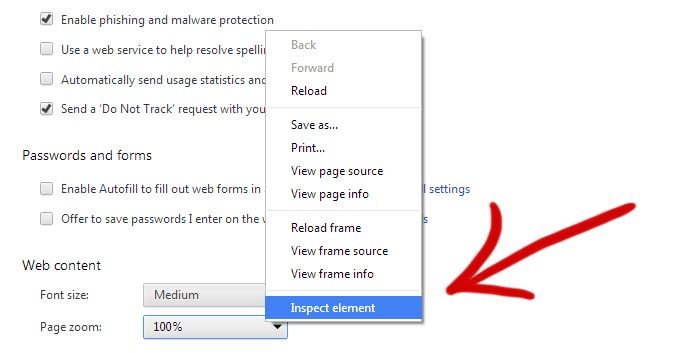

All you're going to do is:

Next, you're going to right click on the HTML element and select "Copy" > "Copy XPath".

And boom! You're ready to go.

Step #3 Control the Website

search_bar_xpath = '//*[@id="lst-ib"]'find_by_xpath(). Next, we'll want to gain navigation of the individual HTML element by using the following line of code:

search_bar_xpath = '//*[@id="lst-ib"]'

# index 0 to select from the list

search_bar = browser.find_by_xpath(search_bar_xpath)[0]search_bar.fill("serpstat.com")

# Now let's set up code to click the search button!

search_button_xpath = '//*[@id="tsf"]/div[2]/div[3]/center/input[1]'

search_button = browser.find_by_xpath(search_button_xpath)[0]

search_button.click()Step #4: Sit Back and Watch the Magic Happen (Scrape Time!)

Notice how each search result is contained within an h3 title tag with a class "r". It's also important to remember that the title and the link we want are both stored within an a-tag.

Ok, so the XPath of the highlighted tag is //*[@id="rso"]/div/div/div[1]/div/div/h3/a.

But it's only the first link. And much like the iconic rock band Queen, we want it all.

To get all of the links from the SERPs, we're going to shift things around a bit to ensure that our find_by_xpath code returns all of the results from the page.

Here's how:

search_results_xpath = '//h3[@class="r"]/a'

search_results = browser.find_by_xpath(search_results_xpath)Now, it's time to sit back, let the magic happen.

To extract the title and link for each search result, all you need to do is insert the following line of code into your Python terminal.

scraped_data = []

for search_result in search_results:

title = search_result.text.encode('utf8')

link = search_result["href"]

scraped_data.append((title, link)) To export all of the data to csv you can simply use panda's dataframe using the following 2 lines of code:

df = pd.DataFrame(data=scraped_data, columns=["Title", "Link"])

df.to_csv("links.csv")Pretty cool, huh?

And that ladies and gentlemen, is how you scrape the Google SERPs using Python.

Final Thoughts

By following the simple framework I outlined today, you can quickly and easily gain access to just about any information you need with a few lines of code and the click of a button (or mouse as it were).

So go out and scrape away!

Did you find this article helpful? Do you have any tips or tricks for improving your website scraping abilities? Comments, questions, concerns? Feel free to drop us a line below and let us know!

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.