Start Exploring Keyword Ideas

Use Serpstat to find the best keywords for your website

How To Get 34% Increase In Traffic By Optimizing Your Existing Content

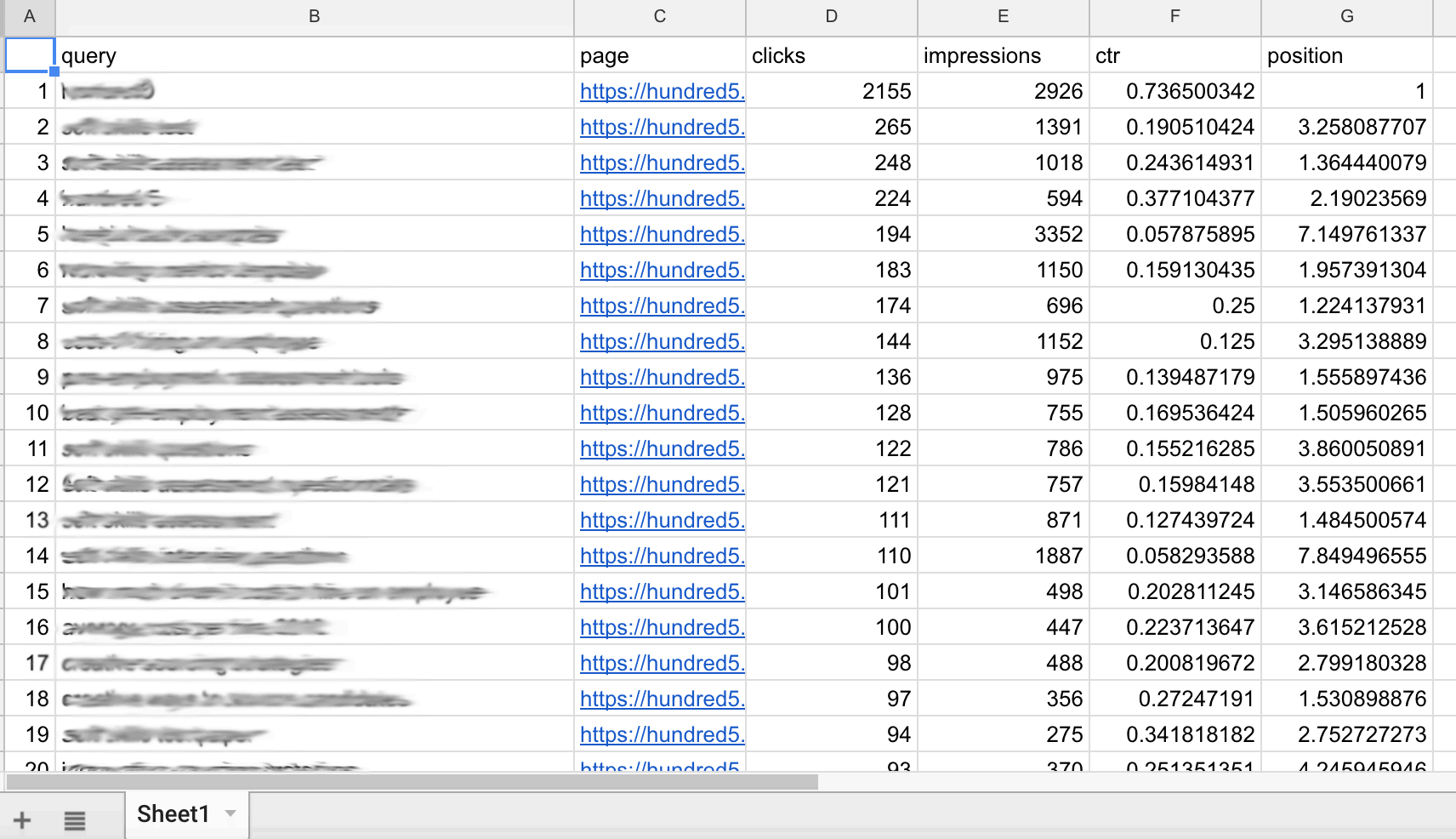

Its API comes in handy in these situations, because you can pull all the data and use it somewhere else. In addition, you have no limit to what you can pull and you can export queries with the page that ranks for it and the metrics related. Not only is this quite crucial for the success in SERP, but it also makes a good solution to common problems such as not provided keywords in Google Analytics.

Why should you go back to the old content

Now, not all tactics are so logical either, sometimes when you look back, you can see that the majority of your existing content doesn't really drive any traffic and, to reduce the number of pages link juice gets spread to, you might actually choose to delete it and, opposite of common sense, increase the organic traffic.

However, we don't go that far, and this approach is all about getting on top of what you have when it comes to the existing content, which keywords are you ranking for and which keywords could you rank for if minimum targeted effort is invested.

Getting the data from Google Search Console

The reason why I started doing this process with R was because the add-on didn't work for quite a long time due to changes in GSC API, but prior to writing this, I've checked and, surprisingly enough, it worked. However, it often has authorization issues for which I haven't found a solution except for using something else for pulling the data. Therefore, the guide itself will include both ways.

Pulling the data from GSC using R

To use it you'll have to obtain Client ID and Secret which you can do using the Google API Console.

install.packages('googleAuthR')

install.packages('searchConsoleR')

library(googleAuthR)

library(searchConsoleR)

options("searchConsoleR.client_id" = "ADD_YOUR_CLIENT_ID")

options("searchConsoleR.client_secret" = "ADD_YOUR_CLIENT_SECRET")

scr_auth()

sc_websites <- list_websites()

#Check the list of websites in GSC (best to copy URL for the query)

sc_websites

#Pull the data - you can edit the date range

gsc_data <-

search_analytics("https://hundred5.com/",

"2018-06-14", "2018-09-14",

c("query", "page"))

#Create a CSV file based on the query

write.csv(gsc_data, file = "gsc_data_export.csv")

#Find the location of the file

getwd()

After you have done this, import the CSV file or paste the data into the Google Sheets and continue reading this article from the next section.

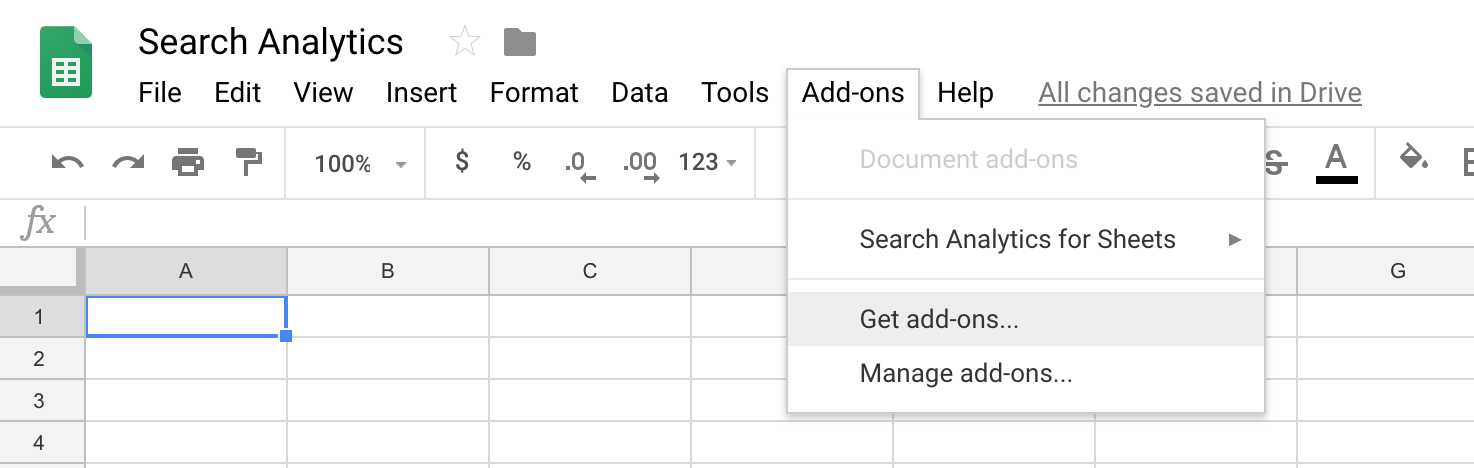

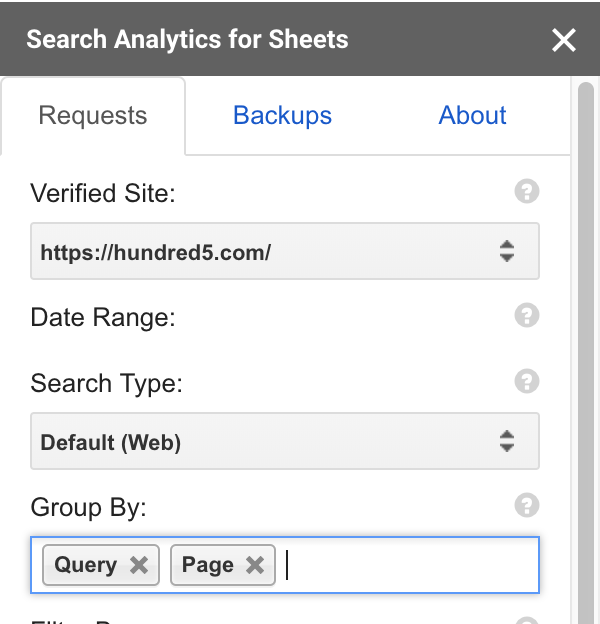

Pulling the data with Search Analytics for Sheets Add-on

Once you click "Get add-ons", you should search for "Search Analytics for Sheets" and install it.

Go again to Add-ons, but now, since you have the option, go to Search Analytics for Sheets and then 'Open Sidebar'

Working with GSC Data to find low-hanging fruits

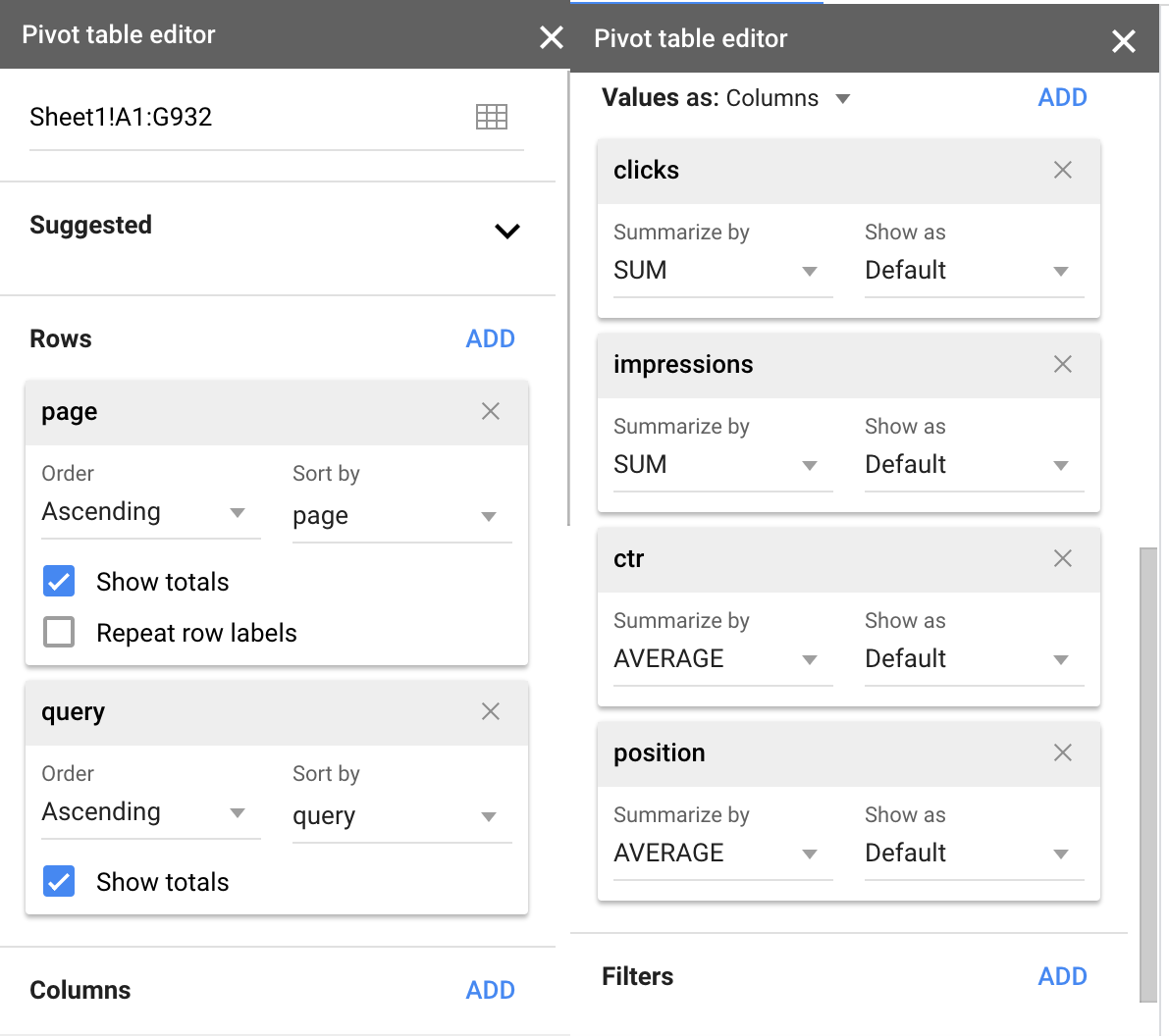

Rows:

- Page, Order: Ascending

- Query, Order: Descending, Sort By: SUM of Impressions (can be added after adding impressions as values)

Columns: None

Values:

- Clicks, Summarize By: SUM

- Impressions, Summarize By: SUM

- CTR, Summarize By: AVERAGE

- POSITION, Summarize By: AVERAGE

Filters: None

To make it easier to read and work with, you can select the entire column where CTR is and format it as a percentage and the entire Position column and reduce the number of decimals to one or two.

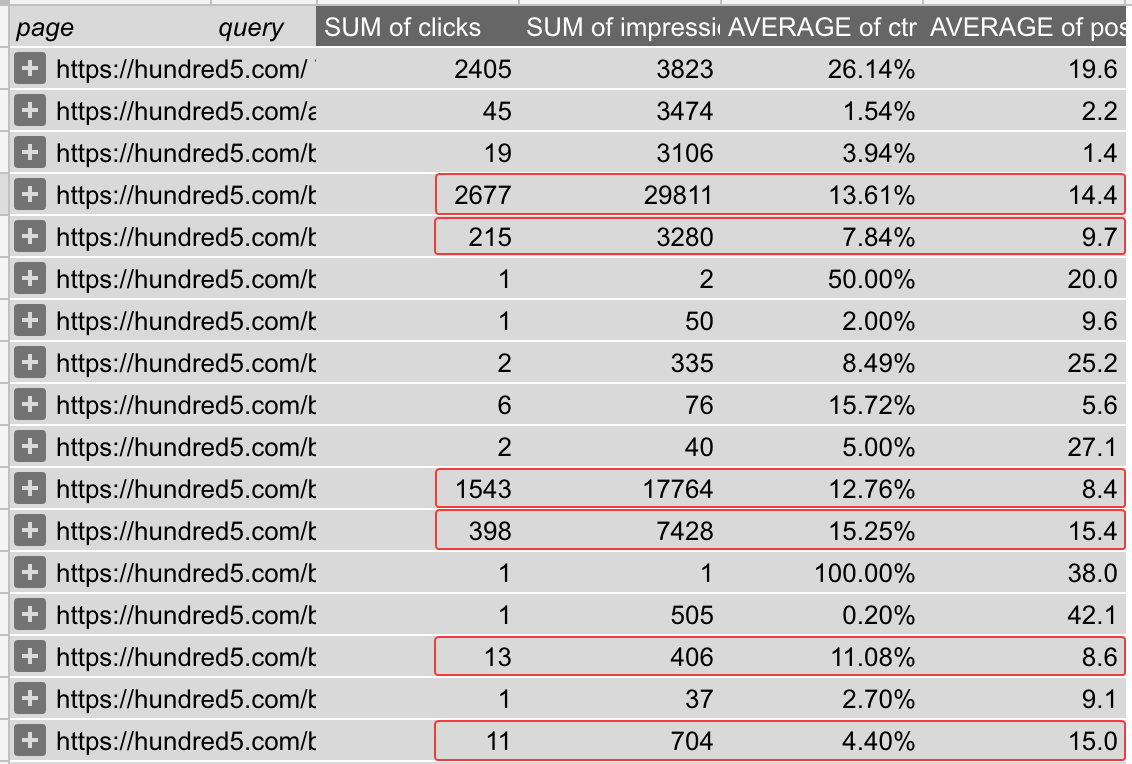

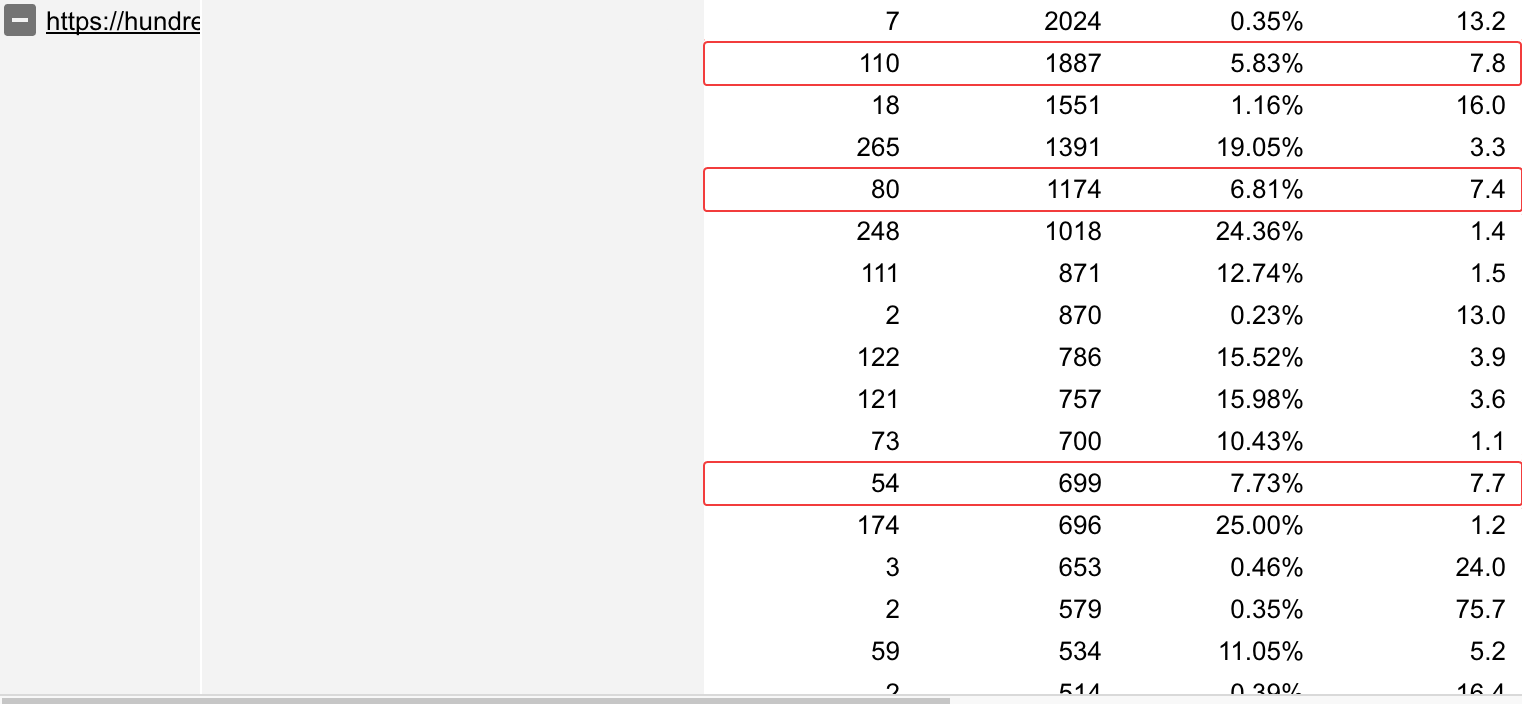

Now it's time to select the pages we're going to work with. We're looking for pages that have keywords with a significant search volume (read: impressions) while ranking roughly between 8th and 12th position. To do that, first we'll identify the pages with enough impressions:

- Adjust the title to better address the keyword (of course, you don't want to kill your rankings for keywords with higher search volume if you have that already)

- Address the keyword I'd like to target in the first paragraph

- Create another paragraph to address the keywords

- Build links towards this page.

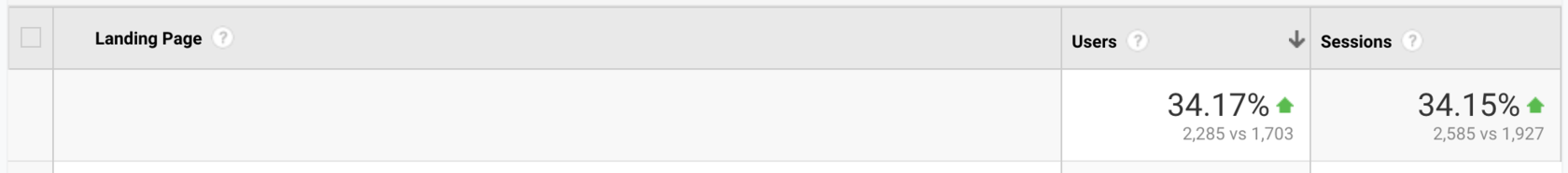

Results you can expect with this approach

Speed up your search marketing growth with Serpstat!

Keyword and backlink opportunities, competitors' online strategy, daily rankings and SEO-related issues.

A pack of tools for reducing your time on SEO tasks.

Discover More SEO Tools

Backlink Cheсker

Backlinks checking for any site. Increase the power of your backlink profile

API for SEO

Search big data and get results using SEO API

Competitor Website Analytics

Complete analysis of competitors' websites for SEO and PPC

Keyword Rank Checker

Google Keyword Rankings Checker - gain valuable insights into your website's search engine rankings

Recommended posts

Cases, life hacks, researches, and useful articles

Don’t you have time to follow the news? No worries! Our editor will choose articles that will definitely help you with your work. Join our cozy community :)

By clicking the button, you agree to our privacy policy.